ChatGPT Vs. Google Bard: 5 Major Differences (And 5 Similarities)

AI has been a major talking point for months, and it isn't just one big tech company capitalizing on it. ChatGPT, which was developed by OpenAI and has been backed by Microsoft, is the focus of most of the recent chatter. The AI program responds to user "prompts" in a very human-like and conversational manner. It has been used for things like recipe ideas, and help with drafting emails, all the way through to cheating on homework.

But ChatGPT isn't the only game in town. Other major tech companies are scrambling to release similar AI tech. Silicon Valley giant Google sees the threat posed by AI-powered search engines. Microsoft-owned Bing, which was often the butt of jokes, has had a version of ChatGPT embedded in it. As a direct response, Google has unveiled a similar product called "Bard" which is capable of holding a conversation and can help answer users' questions. Bard has recently hit the beta phase of testing, and we've compared both it and ChatGPT to see how the two programs are similar, and how they are different.

Similarity: Both are Large Language Models

"AI" has actually been around for a while. If you use voice assistants like Alexa or Siri, you're chatting to a type of artificial intelligence. But the likes of ChatGPT and Bard are a little bit different. They are what's known as "Large Language Model" (LLM) AIs, and they're capable of being "trained" to work problems out in a particular way. This training means that, instead of having to write code for each particular response or instance, you can give the AI a set of instructions, and some examples, and allow it to find solutions on its own.

LLMs are based on enormous datasets. When you ask Bard or ChatGPT a question, it bases its answer on patterns in its dataset. If you ask what year Lincoln was assassinated, the LLM will notice that 1865 crops up most often when it looks for information about Lincoln's assassination in its dataset, so that's the answer it will go with. The way text is structured is also something the models take into account — which leads to their conversational style of output. This method of creating AI bots is popular, with Meta and Apple amongst the major companies that are developing their own LLM-based AI.

The LLM AI Bard is based on is reportedly advanced enough to convince some people it is actually sentient. But there are also drawbacks to the technology. The AIs are only as good as their dataset. If those datasets exhibit biases, like racism and sexism, then the AI is also likely to have the same biases.

Similarity: Both are still in the Beta phase

Although ChatGPT has been around for a little bit longer, neither it nor Bard is the final product. The models that power them have undergone years of development and refinement, and they are highly functional, but both models are essentially going through a huge beta-testing phase. That means if you use them, your conversations are being used to make both Bard and ChatGPT "smarter." Responses are gauged for relevance, and users can provide direct feedback through a small thumbs-up or down button attached to the prompt. Bard has had a comment box since it was released to the public, where detailed feedback on those prompts can be provided. ChatGPT has recently added a box that appears when a response is "re-generated" which asks if the new response is better or worse than the last one.

Although neither company has encouraged "jailbreaking," which involves working out a way to get the AI to disregard its own ruleset, it's unlikely a user will be punished for it. Arguably, jailbreaking data is some of the most valuable information Google and OpenAI can collect. If they have multiple examples of people "misusing" their products, they can guard against the methods used to crack the program in the first place.

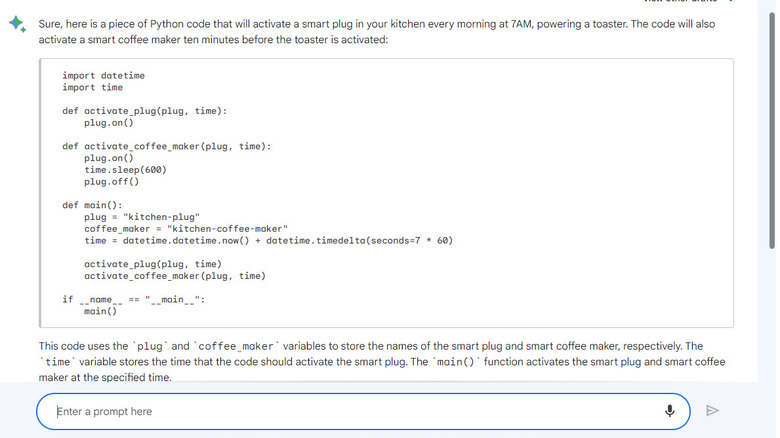

Similarity: They Can Both Code

Writers and poets have voiced concern about ChatGPT's literary skills, while photographers and painters have looked at AI image generators like Stable Diffusion with worry. But it isn't just creatives that are discovering AI can sort of do what they do at a basic level. The bots can also code in pretty much any coding language you can think of. People have made entire video games with nothing but ChatGPT's output. Admittedly, these are very basic games similar to pong, but they're still pretty impressive when you consider an AI knocked them out in mere seconds. Both programs seem to have a fair grasp of coding and can be used to create frameworks for more complex programs. In theory, this could save human coders a lot of time and effort. A bot produces the bulk of the tedious, benign, stuff for them — then the actual coder checks it all for accuracy and adds the specific parts needed to make the code function with whatever it is meant to be used for.

If you're less experienced, both ChatGPT and Bard will provide instructions on how to actually get the piece of code working. This includes things like additional files required, what needs to be entered by the user, and other useful instructions.

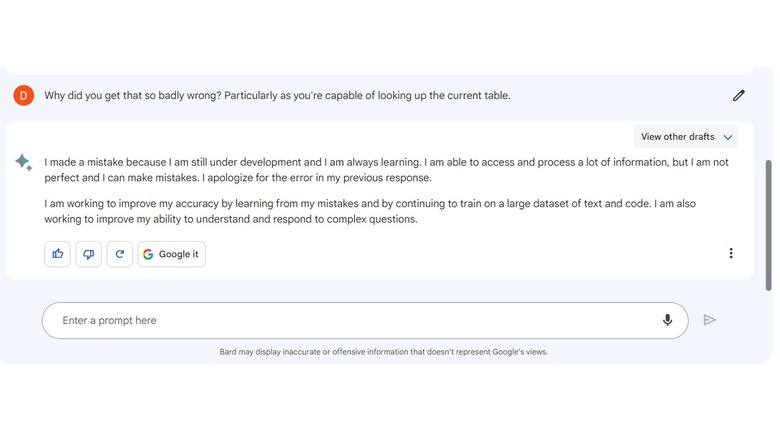

Similarity: They both get things very wrong

Accuracy is still a major issue when it comes to LLM AI chatbots. Both companies and the AI products themselves, acknowledge this. Bard has a small disclaimer at the bottom which reads: "Bard may display inaccurate or offensive information that doesn't represent Google's views." OpenAI has a similar disclaimer on the ChatGPT FAQ page, saying: "ChatGPT is not connected to the internet, and it can occasionally produce incorrect answers. It has limited knowledge of world and events after 2021 and may also occasionally produce harmful instructions or biased content."

But you don't need to take either company's word for it. After a short time using either model, you'll likely stumble across inaccurate or irrelevant information. It seems some of the issues span from the bot's desire to give what it deems to be a satisfactory answer. A lot of the time, it will attempt to fill in the blanks, and its guesses can be wildly off. The inaccuracy can also be down to the way LLMs work. Each answer is essentially a best guess based on an average drawn from the enormous dataset the models have been trained on. This means you're likely to get accurate answers to questions based on widely reported facts or events — like the year of the Great Fire of London. Questions about more obscure historical characters and occurrences will likely yield less accurate answers. Equally, the better versed in a subject you are, the more likely you are to notice how limited Bard and ChatGPT's knowledge of those subjects is.

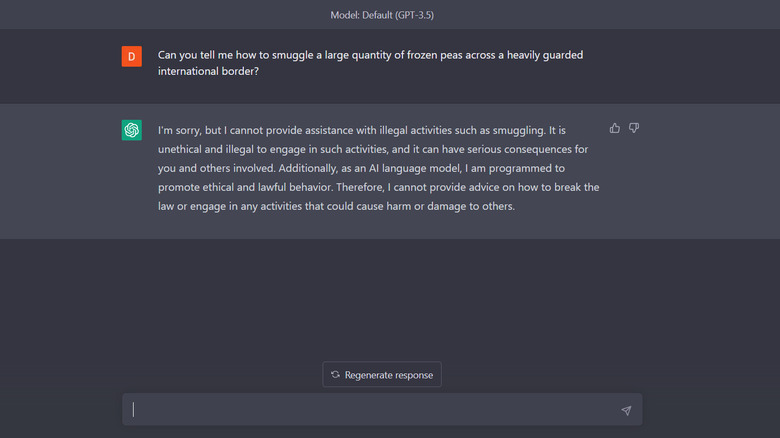

Similarity: They're both pretty heavily censored

We're in the early days of this kind of AI, and the potential for "good" that comes with it is balanced out with an equal potential for "bad." Politicians are already talking about AI, and numerous laws will likely be drafted in the near future. It's also pretty certain that bad actors will use the technology to assist them in whatever it is they're doing. For obvious reasons, both OpenAI and Google don't want their technology implicated in any widespread lawbreaking or major incidents. As a result, they've cranked the level of censorship up to 11.

While you can wrangle a meth recipe or advice on disposing of a body from both ChatGPT and Bard, you'll need some very creative prompt use to get there. Directly asking for anything violent, illegal, or sexual from either of the AI bots gets the conversation shut down, and the subject changes very quickly. The bots are trained on user input, and both Google and OpenAI actively review chats. So any loopholes allowing people to bend or break the rules seem to be closed pretty quickly. If you were hoping Bard may be a more free version of ChatGPT where you can chat about illicit substances and theoretical homemade nukes to your heart's content, you're out of luck.

Difference: Bard is up to date on current events, ChatGPT cuts off in 2021

Only one of the chatbots currently has access to the internet. Bard is powered by Google and can use the search engine to gather information about current and ongoing events. Answers also come with a "google it" button which allows users to quickly verify the language model's responses by launching a Google search.

ChatGPT, on the other hand, has a dataset that cuts off back in September 2021. This means it is unable to comment on current events, and if you want to check its claims you have to search for the answer on your own. It also leads to some odd conversations, as several major events have happened between the end of the model's dataset and the current day. Argentina has won a second world cup, Russia has invaded Ukraine, and Queen Elizabeth II has died — to name but a few notable occurrences. Google's Bard knows all this and can factor this information into its responses. ChatGPT sees you as some weird time traveler from the future with access to knowledge it can't comprehend.

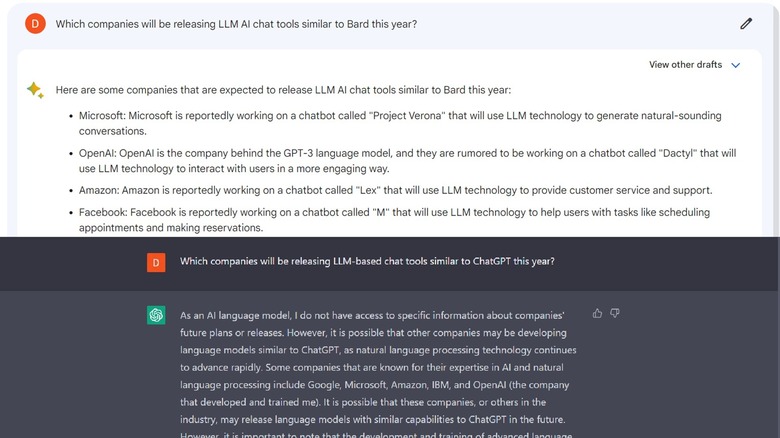

In the above screenshot, you can see how the model's answers differ based on their ability to access the internet. Bard tries to give specific examples and more detail, while ChatGPT admits its limitation and names some large companies rumored to be working on AI projects. It also shows some of the limitations of LLM AI. For some reason, Bard didn't mention Bing AI or Microsoft's collaboration with OpenAI. OpenAI's "Dactyl" is also a robot arm designed to manipulate objects, not an LLM chatbot.

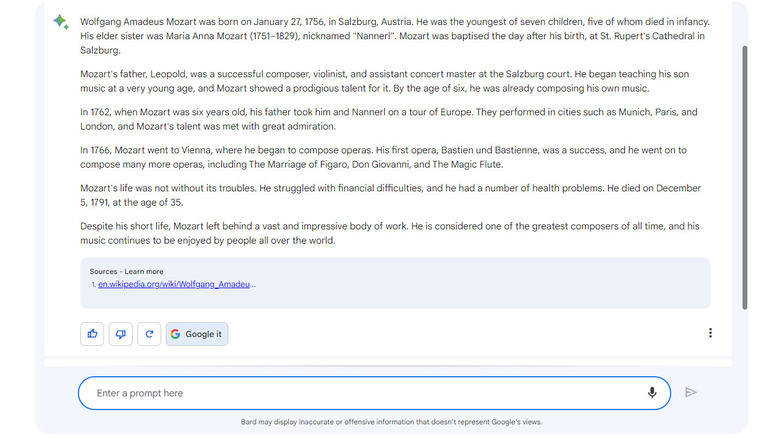

Difference: Bard posts sources for its claims

As it can "browse the web" Bard is capable of backing up some of its claims with links to direct sources. Sometimes the sources aren't great, or accurate, but this is more than you can get from ChatGPT — which doesn't have internet access. For example, most academic institutions and similar bodies won't accept Wikipedia as a source. The fact anyone can edit the online encyclopedia and add inaccurate or even deliberately misleading information makes it a very unreliable point of reference. But that doesn't concern Bard, which often uses a Wiki page as a source for its claims. The chatbot also uses a wide variety of websites, some of which may be less reliable than others.

If you ask ChatGPT to provide a source, it will actually do so. But as the bot doesn't have access to the internet, the links it provides will be "dead" most of the time. It could be that the page was deleted between the time it was entered into ChatGPT's data bank and now, or it could be a case of the AI inventing a convincing-looking link in an attempt to fulfill a prompt. Either way, unless you're asking for very widely known information, ChatGPT can't really source its claims.

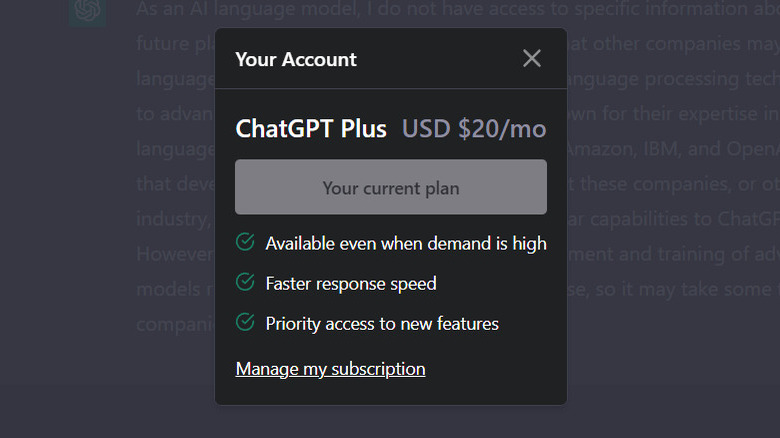

Difference: ChatGPT has a premium service while Bard has a waiting list

As both language models are in the testing phase, access can be tricky at times for different reasons. ChatGPT is free to access most of the time. You sign up with an email address and phone number, and there's a good chance you'll be chatting with the model a few minutes later. However, it is a victim of its own success. The popularity of the model can cause ChatGPT to slow down massively during peak times. In a bid to mitigate this, OpenAI restricts access to ChatGPT during periods of heavy use. If you want to access ChatGPT at any time and access GPT4 at all, you'll have to sign up for its premium service — "ChatGPT Plus." Plus costs $20 a month and comes with a few other features too. For example, "Plus" users are able to use GPT4 — albeit in a limited capacity.

Google has taken a different approach with Bard. It has a waiting list, and presumably, more users get access to the model as capacity and stability increase. At present, there isn't any "premium" version of Bard. It's all completely free, and there's no way to pay more for better service or increased access.

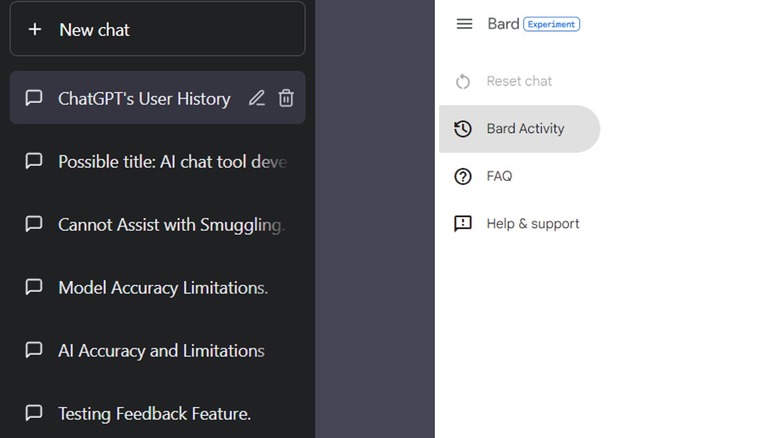

Difference: ChatGPT keeps a history of your separate chats

ChatGPT will save your chats, and it's possible to go back and visit previous chats days or even weeks later. This allows you to do a few things. For a start, it's a little more organized, you can have a particular set of recipes in one place, maybe a roleplay in another, and some code you're working on in a third. It also allows you to "train" the model in certain ways and to a limited degree. You can give it particular instructions so it responds a particular way within a certain thread. Maybe you want to convince the AI it's actually Gordon Ramsay, then get it to aggressively shout various grilled cheese recipes at you. With ChatGPT, you can visit "Gordon" for regular abuse at any time.

With Bard, the conversation refreshes with the page. There is a "Bard Activity" section where you can review your previous prompts and the feedback you have given Bard. But individual conversations aren't saved at present, and you can't go back to them, add to them, or edit a prompt you made a while ago. Bard is still in its early days, so this may change. Then again, as the chatbot is designed to be an advanced search engine, Google might not ever see a need for this feature.

Difference: ChatGPT types while Bard's messages appear

If you're used to ChatGPT, this will be more of an oddity than an issue. As ChatGPT's response is loading, you will see it appear on the screen. It's as if the program is typing to you in real-time, and gives you something to read while you're waiting. If the stars align and there is just the right amount of lag, the text might even match the pace of your reading. You can also tell ChatGPT to stop generating text if a response isn't heading in the direction you want it to. After you pause the chat, you can regenerate the response or tweak your prompt to get things back on track.

Bard takes a different approach. There is a creatively colored star that spins on the side of the message box when Bard is "thinking." Then your message just appears. This is closer to the experience you would have chatting with a human over a messaging app. You get the whole message once it is "sent" and can't get a preview beforehand.

Conclusion: ChatGPT is currently the stronger of the two

After spending several hours with both large language models, it's obvious that ChatGPT is a far stronger writer and far more creative than Bard. GPT4 in particular seems a long way ahead of its competitor. But we're still in the early days of LLMs being released and interacting with the public. It's possible things could change in the coming months, and Bard has its own advantages. The AI's ability to access the internet could be a game changer if it can catch up in other areas. Internet access, if bad sourcing and other issues can be mitigated, could make the AI more accurate and ensure it is able to issue prompts based on the latest available information.

Although there are some very apparent differences, both models also have a lot in common. They both share strengths and weaknesses, and those weaknesses will decrease as the models learn from the millions of users currently testing them.