OpenAI Trains Robot Hand Virtually For Real-World Dexterous Manipulation

OpenAI has a robotic humanoid hand called Dactyl that it has trained entirely via simulation and has been able to transfer that simulation knowledge into the real world. Dactyl can adapt to real-world physics using techniques that OpenAI has been working on for the last 12 months. Dactyl uses the same general-purpose reinforcement learning algorithm and code as OpenAI Five.

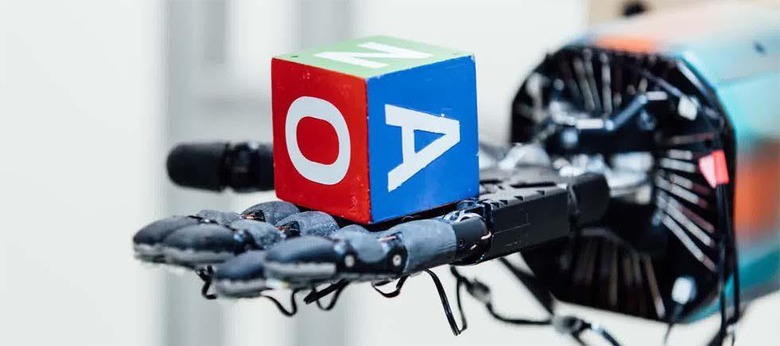

What the company says it has done is proven that it is possible to train agents in simulation and then have them use that sim training to perform real-world tasks all without needing a physically-accurate modeling of the world. Dactyl is a system for manipulating objects using a Shadow Dexterous Hand.

In the tests, the handheld a block or prism in its palm and the hand was then asked to reposition the held object to a different orientation. OpenAI says that the network observes only the coordinates of the fingertips and images from a trio of RGB cameras around the hand. The reorientation of the object in the palm requires several problems to be solved.

These include working in the real world rather than simulation. High dimensional control due to the hand's 24-degrees of freedom. Noisy and partial observations must be overcome. And the hand needs to be able to reorient multiple kinds of objects meaning that the approach OpenAI takes isn't limited to specific object geometry.

OpenAI calls its approach domain randomization learning in a simulation designed to provide a variety of exercises rather than maximizing realism. The company says that tact allows it to scale up more quickly and de-emphasize realism. The simulation of the robotics setup was built using the MuJuCo physics engine and uses a "coarse approximation" of the real robot.

SOURCE: OpenAI