10 Of The Worst Graphics Cards Ever Made

The graphics card is the centerpiece of a PC build. It is usually the most expensive component and can be used for gaming and all sorts of productivity work. These days, your choices are mostly between Nvidia, AMD, and Intel GPUs, each of which has its own set of pros and cons based on what you're looking for. Apple also technically makes GPUs as part of its M-series chips, but you don't get a choice if you buy Apple products.

This is in stark contrast to the days when there were tons of GPU makers. We're not talking board partners like XFX or MSI that make AMD and Nvidia graphics cards. In the 1990s, there were legitimate contenders such as 3DFX, Matrox, S3, and many others. These companies eventually folded one way or another, leaving us with the big three (plus Apple) that we know today. There are many differences between all of the graphics chip makers, but one thing they all have in common is that they've all made terrible graphics cards before.

So, let's take a look at some of the bigger failures in the GPU market over the years. For this list, we'll avoid low-hanging fruit like integrated graphics along with ongoing issues like the Nvidia RTX 4090 and 5090 connectors burning out, since we don't know the full conclusion of that just yet. Here are the worst graphics cards we could find.

Nvidia GTX 480 and AMD Radeon R9 390X

Let's start things off with the Nvidia GTX 480 and the AMD Radeon R9 390X. Each of these graphics cards has a different history as they were released in different generations and by different companies. The two of them share one flaw, however, and it's one for which both became exceptionally well known. To put it simply, both of these graphics cards run hot. We're not talking normal hot, as many PC components heat up quite a bit when pushed. These chips ran hot all the time, with temperatures getting up into the 194°F range during gaming and other regular tasks.

For the GTX 480, its penchant for throwing heat was not only well-known but also well-documented. It would routinely climb the temperature charts when pushed, maxing out higher than any other card tested in its era. Rival AMD even made a commercial about it, and fans would poke fun at the GTX 480's grill-style radiator as a legitimate method to cook food (spoiler: you can't, but people did try).

People didn't have nearly as much fun making fun of AMD for its exceptionally hot R9 390 card, but it ran similarly hot. The reason for both cards running hot is largely the same. It was an inefficient design that drew a ton of power and wasted power turns into heat. On the plus side, you could heat whole rooms with these things in the winter just by playing your favorite video games.

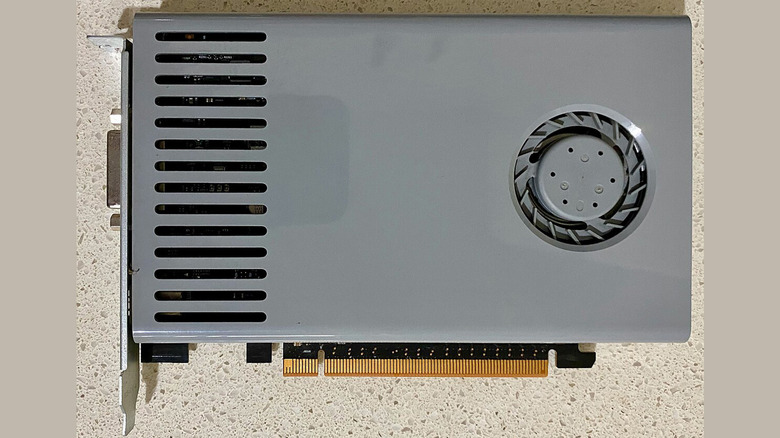

Nvidia Titan Z

There was an era where GPU makers tried to make dual GPUs happen and the Titan Z was arguably the most excessive and ridiculous attempt out of all of them. The GPU launched in 2014 as part of Nvidia's 700-series GPUs. It featured monster specs for its era and it far exceeded the 700-series that launched at around the same time. In fact, it wasn't until the Nvidia GTX 1080 in 2016 that the Titan Z was finally beaten. In terms of raw performance, it was like the RTX 5090 of its era.

The dual-GPU architecture was this card's central flaw. Dual GPUs had their issues, including compatibility with games, performance hiccups, and more. Compounding that was the Titan Z's $2,999 launch price. That's ludicrous even by today's standards, but this was back in 2014 when the top-of-the-line Nvidia GeForce GTX 780 Ti started at $699. It was an exercise in excess through and through, and would unfortunately pave the way for more super-expensive graphics cards down the line.

Perhaps even more egregious is that the money didn't get you much. AMD's dual-GPU competitor, the R9 295X2, was only a couple of percentage points behind the Titan Z in terms of raw performance and cost half as much at $1,499. So, while it was a spec giant, it was a horrible value proposition all around.

AMD Radeon 6500 XT

If the Nvidia Titan Z was an exercise in excess, AMD's RX 6500 XT was the polar opposite. AMD released the 6500 XT in 2022 for the low, low price of $199, and it could be argued that even at that bargain basement price, the card wasn't worth it. The biggest knock against the RX 6500 XT is that it was designed to be a laptop GPU. As most enthusiasts know, laptop GPUs are much weaker than their desktop counterparts, so without seeing a single benchmark, many already knew that the 6500 XT was going to be a rough release.

The 6500 XT was only a few percentage points better than the outgoing RX 5500 XT, and the latter often outperformed its successor on some games. Spending another $130 on the RX 6600 increased performance by a hilariously wide margin. This was due to a number of factors, including the PCI Express x4 limitation that other graphics cards didn't have, along with having just 4GB of VRAM. In short, this card was barely better than its predecessor and not worth the upgrade.

There are other, more minor issues, too. For instance, since it was designed for laptops and not desktops, it only came with two display outputs. This was an all-around awful graphics card, even for its price.

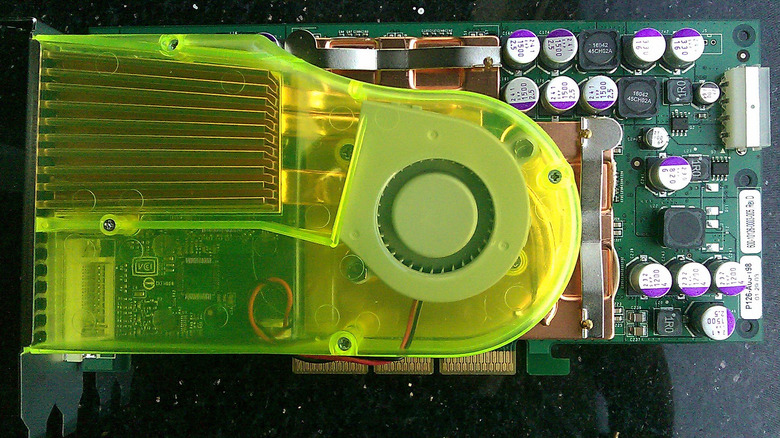

Nvidia GeForce FX 5800

Ah yes, the old hairdryer. Nvidia released this thing in 2003 for an MSRP of $299. To give you an idea of how far technology has come, this card came with 128 MB of GDDR2 memory over a 128-bit memory bus. For its time, it was pretty decent and could run modern games. The problem was that the card ran hot. It's a fairly common issue, especially among older GPUs. However, this one also came equipped with a lime-green cooler that was louder than some home appliances.

Anandtech tested the card in 2003 and found that the blower on it could get as loud as 77 dBA. For some context, that's about as loud as freeway traffic, garbage disposals, hair dryers, and some lawn mowers and power tools. In short, you could hear this thing from across your house half the time. Still, the design isn't too bad. It's reminiscent of a laptop cooler in some ways, with the highly directed flow of air shooting straight out of the back. It could keep the graphics card cool enough to function, but overclocking was out of the question entirely.

The card was so loud and obnoxious that Nvidia made a spoof video as a sort of apology to enthusiasts who had to deal with the thing. Tech giants don't poke fun at themselves like that anymore. In any case, the GeForce FX 5800 and its arcade plastic green cooler earned its spot on this list.

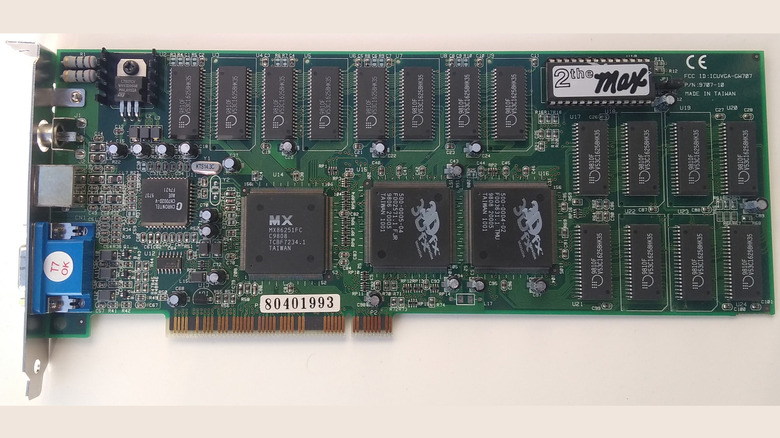

3DFX Voodoo Rush

3DFX was one of the larger players in the 1990s with its Voodoo series of graphics cards. During that time, it was known for having some of the best graphics cards available on the market. They were among the fastest cards available in their era, and the brand's Glide API was used in a lot of then-modern titles, giving the graphics card excellent game support. At the time, the brand seemed unstoppable. Then they released the Voodoo Rush card, which was the first stumbling block in an otherwise illustrious portfolio.

The Voodoo Rush had a variety of optimization issues that ultimately led to the card underperforming when directly compared to its predecessor. The prior Voodoo card was better in a lot of games, particularly "Quake 2" and "Quake 3" by fairly hefty margins. That would be like Nvidia releasing a new graphics card that performed double-digit percentages worse in Fortnite than the prior generation.

The company eventually rebounded with the Voodoo2 and the Voodoo3, but by that time, ATI's Radeon and Nvidia had caught up and had become market leaders. The company eventually folded in 2000 after a few years of missteps. Nvidia purchased 3Dfx in 2004, acquiring all of its patents and technology, and bolstering its rise to industry leadership.

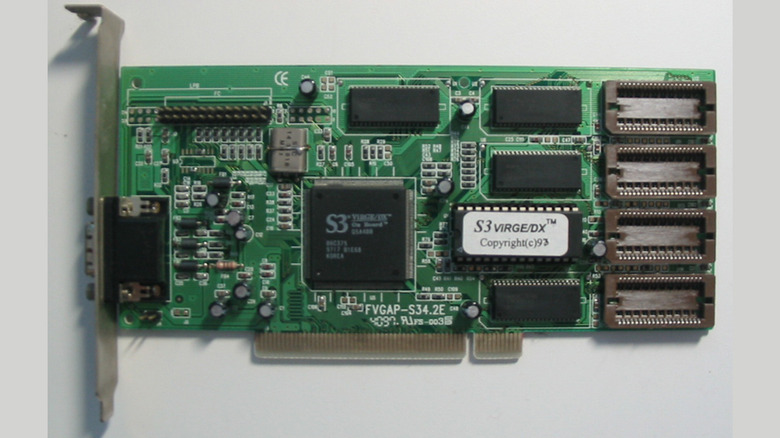

S3 ViRGE

S3 was another graphics player in the 1990s, and it excelled in graphics acceleration. For a period, S3 was best known for its value proposition. It rarely made the fastest cards on the market, but the price-to-performance ratio made them favorites for early enthusiasts building their rigs. Things were going well until the brand launched the S3 ViRGE, which is an acronym for Virtual Reality Graphics Engine.

Describing why this chip was bad takes a little explanation. When it was doing its primary function, it would perform quite well. However, as soon as the user added things like texture filtering or perspective correction, the card would tank in performance, especially at resolutions at or exceeding VGA (640 x 480). S3 also used its own API for 3D acceleration and as a result, didn't offer effective support for the more popular OpenGL. Since developers mostly didn't use S3's API, many games didn't work well with the ViRGE. This was at least partially fixed in later driver updates.

Since the card was so bad at 3D accelerating, enthusiasts of the time jokingly called it a 3D "decelerator". Fortunately for S3, competition was lax back then, and since the card did well with 2D graphics, it sold rather well. Eventually, S3 fell behind the likes of Nvidia and ATI, and that was that for the brand.

AMD Radeon VII

We return to modern times for our next entrant, the AMD Radeon VII. This graphics card is quite interesting if nothing else. It was sold as a graphics card to compete with Nvidia's RTX 20-series GPUs, and it shared the architecture of the MI50 GPU that AMD sold to data centers. This gave it some unique specs, such as its HBM2 memory. Despite its cool name and unique features, the Radeon VII eventually became a failure for AMD.

This GPU was designed to go head-to-head with the Nvidia RTX 2080 at the same price point of $699. It was unable to do that, putting up performance more on par with the prior generation's GTX 1080 TI. Also, the graphics tech landscape was bouncing off the walls over raytracing, which AMD famously omitted from its Radeon VII launch. So, while it was the same price and released around the same time, the RTX 2080 was superior in just about every way.

To compound the issue, there were known issues with AMD's HBM2 memory, and many cards met an early grave via the infamous Error 43. Plus, AMD released the RX 5700 XT just months after the Radeon VII. For $399, you could get 90% of the Radeon VII's performance without the issues. AMD discontinued the Radeon VII less than half a year after its launch, putting a quick end to its mistake.

Nvidia GT 1030 DDR4

By the time Nvidia hit its GTX 10-series of GPUs, things were mostly on autopilot for the brand. You could get the GTX 1060, 1070, and 1080, each of which had performance improvements over the other. However, Nvidia still apparently hadn't figured out budget-oriented cards yet because the GT 1030 DDR4-version exists, and boy was it a bad one. For context, the rest of the GTX 10-series — including another variant of the GT 1030 — used DDR5 RAM, which was better in every possible way.

Okay, so let's get the big issue out of the way immediately. Virtually every review of the DDR4 version of the GT 1030 called it a disgrace of a graphics card, which is true in every sense of the word. That one little number change reduced performance by upwards of 65% when compared head-to-head with its DDR5 counterpart. Gamers Nexus tested both and found that on Rocket League — a game that is not hard to run on modern hardware — the GT 1030 DDR5 version was able to get 63FPS average on 1080p with medium settings. The DDR4 version averaged 28 FPS. It was so bad, it's on our shortlist of cheap GPUs you should never buy.

The even bigger sin was that the DDR4 variant was released a year later than the DDR5 variant and for the same $79 price tag. Thus, it cost consumers literally nothing to simply opt for the superior card. We're not sure who the DDR4 1030 was for, and we'll likely never figure it out.

Nvidia GeForce G100

During our research, we wanted to find graphics cards with the lowest benchmark performance that are still comparable to modern GPUs. Well, say hello to the Nvidia GeForce G100 OEM Edition graphics card. We found this little guy while browsing TechPowerUp's benchmark comparison tool. Compared to every other card the publication has ever tested, the G100 is the slowest, with the GeForce 210 coming in second at just a 10% increase in performance.

So, how bad are the bottom of the benchmark comparisons? Well, it boasts a heavy 3 FPS on DirectX 9 games, 3 FPS on DirectX 10 games, and 1 FPS on DirectX 11 games. We are relatively certain that an enterprising individual who built a PC to play Minecraft while in Minecraft gets a better framerate than the G100. It doesn't, but it's fun to make jokes. On the plus side, the single-slot card only required 35W to operate, meaning it didn't need any power cables.

Modern graphics cards really put the G100's glacial pace into perspective. The Nvidia RTX 5090 is just over 50,000% faster, while AMD's flagship, the 7900 XTX, is just over 29,000% faster. My desktop PC has a 7900 XT, which is 25,160% faster. Those are absurd numbers and they quite clearly communicate why this OEM-only card belongs on this list.

Any dual GPU

To round out the list, we'll go ahead and mention that just about every dual GPU on the market was mostly a flop. We mentioned the Titan Z by name because it is perhaps the most egregious example, but it's not the only dual GPU that ever hit the market. There are quite a few of them, ranging from the Nvidia GeForce 7950 GX2 and the 9800 GX2 to the Radeon Pro W6800X Duo and the aforementioned AMD Radeon R9 295X2. These GPUs weren't necessarily bad, but they also weren't very good.

The reasons why are numerous. Dual GPUs tended to be much more expensive than their single counterparts, while not granting that much extra performance. Dual GPUs were never twice as powerful as single GPU cards, and even when you used two graphics cards in tandem — be it Nvidia's SLI or AMD's CrossFire — the performance boost was, at best, usually around 50%. In addition, dual GPUs were significantly more expensive than other cards. We mentioned earlier how the Titan Z was $2999 and the Radeon R9 295X2 was $1499 at launch. These upcharges are fairly typical for dual GPUs.

Dual GPUs have also been plagued with issues, such as lower compatibility with modern games and various bugs and performance issues that are not common to single GPUs. In short, it was an idea that companies ran with for a while, but ultimately didn't work. Multi-GPU setups may get a second renaissance as Nvidia's VR SLI lets two GPUs render each eye on each GPU.