6 Coolest Features Of Apple's Vision Pro Headset

The Vision Pro is Apple's latest groundbreaking product, ushering in a new age of "spatial computing." Apple's Vision Pro offers an all-encompassing virtual real estate using virtual reality and an interactive augmented reality experience that enhances your surroundings. This results in a coherent transition between physical and virtual realms, and Apple claims this experience to be highly superior to any other AR, VR, or mixed-reality headset available in the market.

The Cupertino-headquartered giant calls the Vision Pro "the most advanced electronics device ever" and claims that 5,000 patents have gone into creating its unique and unmatched experience. At WWDC 2023, five top Apple executives, including CEO Tim Cook, and several engineers spent time detailing the artistry that makes the Vision Pro, encompassing the longest session during the virtual event.

The Apple Vision Pro will cost $3,499 and will be available in early 2024. The months leading up to the market launch have been reserved to entice developers to the new visionOS platform, built ground-up for this and future headsets from Apple. The Vision Pro is unequivocally cool, and besides the usual benefits of immersive video and gaming, it offers several other features that make up for a unique experience. Based on the demos Apple showed, here are the top cool features of the Apple Vision Pro.

Rotating crown to increase or decrease immersiveness

The Apple Vision Pro features an immersive three-dimensional interface displayed on tiny micro OLEDs for each eye. The incredibly sharp displays offer a realistic visual quality, while the virtual object simulated through the software is complemented by effects like shadows over real objects in your physical reality. These aspects give these virtual elements a realistic presence, making them feel natural in your surroundings.

At the same time, the Vision Pro headset allows you to choose the level of immersion with a rotating crown similar to the Apple Watch. The crown helps you tune in or out of a virtual setting based on how attentive or focused you want to be.

For instance, if you are working and want to be able to present for your colleagues, you can dial down the level of immersion. However, when watching a movie or playing a game, you can surround yourself fully in virtual spaces called "Environments" with the rotating dial.

The Environments are dynamic imagery from actual locations and are captured volumetrically with high-resolution 3D cameras to make it appear like you are physically present in the setting. The idea is to replace your natural surroundings with something more soothing so you can operate in peace, watch a video, or play games without distractions.

Seamless eye tracking

Quite interestingly, the Apple Vision Pro lets you interact with the interface simply by using your sense, such as vision, voice, and touch. Your eyes play a crucial role in navigating through the interface and act as a mouse pointer, allowing you to point at any part of the immersive landscape simply by looking.

This is possible with infrared cameras and a ring of LEDs that project invisible light patterns on your eye. Simply by detecting minute movements in the eye, the Apple Vision Pro can precisely determine where you are looking, even without requiring you to move your head the slightest. This is enabled by an initial calibration performed when you use the headset for the first time.

But Apple's fine-tuning goes far beyond just sophisticated hardware. Sterling Crispin, a former neurotechnology prototyping researcher at Apple, points out in a tweet the nuances that make this eye tracking possible.

The tiny cameras not only track but also anticipate slight movements in your pupils to accurately predict when you will click. With the help of AI, Apple has deeply studied physiological and emotional responses while developing eye-tracking features to ensure users enjoy immersive experiences in all ways possible.

Apple claims the data related to eye-tracking is limited to a separate background process and not visible to third-party apps or websites. It does not elaborate if it can see and analyze that data to train those models as it acquires more data sets from real-world usage.

Intuitive hand gestures to control the interface

Apple also takes an uncommon approach to control the interface on the Vision Pro. It uses physical cues from your hands to perform actions like clicking, scrolling, or zooming in and out, eliminating the need for a bulky external controller that engages your wrist. For instance, you can tap your index finger on your thumb to click an object or flick a finger to scroll. The headset also supports gestures to scale or move open windows or zoom in or out on media. Apple says this allows for a seemingly natural experience when interacting with virtual objects.

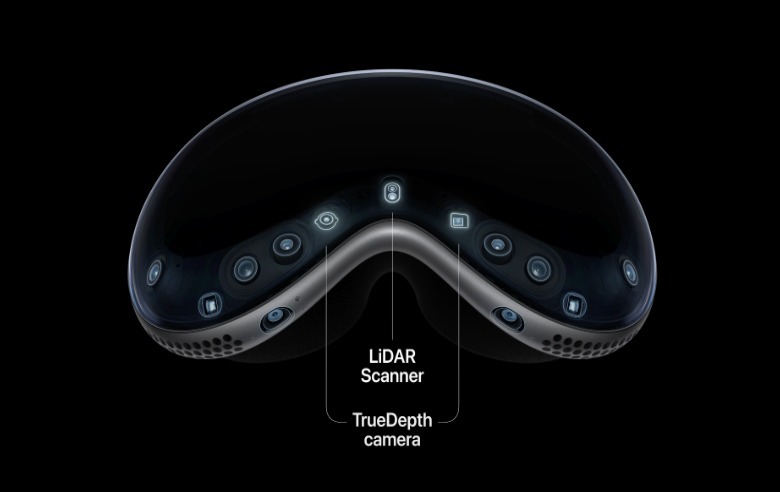

This is possible with a broad and comprehensive array of sensors that record and transmit visual data. These sensors include primary front-facing cameras alongside downward- and side-facing cameras — one each for the left and right sides of the headset. This means your hand can rest in your lap and need not be held up in the air while interacting.

While the cameras alone give a sense of where your hand is located, the Vision Pro has additional sensors that improve its real-time perception of 3D depth, even in dim ambiances. These include infrared illuminators that act as floodlights along with LiDAR scanners and TrueDepth cameras.

Apple did not say how hand gestures work inside games, but it will be interesting to see how instantly and effectively the Vision Pro can read hand movements in real-life scenarios, especially against complex backgrounds.

Seemingly transparent display

Apple wants to provide an engrossing experience with the Vision Pro without alienating or isolating you from the people around you. It emphasizes eyes are essential in presenting you in a conversation, which is why the Vision Pro shows your eyes through the headset when you are not immersed in an alternate reality. The headset appears to have a translucent screen that shows your eyes, but this is only a digital representation displayed on an external OLED display. Called "Eyesight," the feature turns on automatically as a person approaches you.

The cameras inside the Vision Pro's eyepieces will draw an actual feed of how your eyes move when talking to another person. Meanwhile, the person wearing the headset will be able to look at others, as well as the surrounding realm, through the suite of cameras relaying a live feed inside the headset.

The external display will also send a clear signal to others when you are using the Vision Pro. Compared to Eyesight, the live feed of your eyes will be hazier when you are engaged in an augmented reality experience and will be replaced by animations when you are wrapped in a more captivating virtual reality setting. That is, of course, from a long distance, as the headset will accommodate anyone within your virtual realm when they come close or start talking to you.

Realistic avatars in a 3D Facetime

Apple also introduced a new way to engage in Facetime on the Vision Pro, where the headset will place other participants in virtual tiles floating in your surroundings. You will be able to physically scale each tile or relocate them based on your preferences. Facetime will also utilize the Vision Pro's spatial audio capabilities and deliver each participant's audio from the same position as their tile and let you share screens from web browsers and apps.

However, the most exciting aspect of spatial Facetime is not how it shows other participants but how it shows you. Apple wants to redeem you from holding a phone in front of your face — or relying on any other device — when you Facetime while wearing the Vision Pro over your head. To do this, the Vision Pro creates an "authentic representation" of you with the help of the TrueDepth cameras, just like iPhones and iPads do with Memojis.

Apple says it will use neural networks to create your seemingly real persona that can be displayed on the Facetime call instead of your actual face. This persona will match your facial expressions, eye movements, and hand gestures, all recorded with the inline sensors. Additionally, while regular iPhone, iPad, and Mac users will see a 2D depiction of your face, other Vision Pro users will see a 3D version of your persona. This 3D image will have volume and depth, making it look more realistic than on other devices.

Apple Vision Pro is an independent computer

As Apple puts it, the Vision Pro is designed to free your creativity from the confines of a single display. The headset runs a new operating system, visionOS, and Apple claims to make it easy for developers to port apps that are intended for iOS or macOS. You will be able to run multiple apps on the Vision Pro effectively while placing them either stacked next to each other in a virtual space. The headset even pairs with your Mac and automatically projects the display for a private session.

Apple is incredibly confident of the performance because the Vision Pro runs on an M2 chip, which also powers multiple MacBook models and the latest iPad Pro. In addition, the headset is equipped with a second chip — the Apple R1 — explicitly designed to process real-time data from its 12 cameras and four depth sensors, thereby eliminating latency that may otherwise lead to motion fatigue.

The Vision Pro can also serve as a 3D camera with spatial audio capturing, allowing you to capture memories and revisit them later on the headset or any other Apple device. For security, the headset features an iris recognition system — called "Optic ID" — that will allow only its owner to unlock and use the headset.

In all, Apple's new headset opens countless new possibilities for a spellbinding experience, making it an extremely desirable futuristic device — if you are not among the majority dissuaded by its chunky price tag.