Bing AI Joins Microsoft's Bug Bounty Program - And The Payouts Are Big

Microsoft, one of the biggest players in the AI game, is opening up Bing's AI capabilities for a bug bounty program. If you can discover a flaw categorized as "important" or "critical" in Microsoft's books that can be reproduced by the company's own experts, you can pocket up to $15,000 to discover such vulnerabilities. But the ceiling is not fixed and you can take home a bigger paycheck, if Microsoft deems your findings worthy.

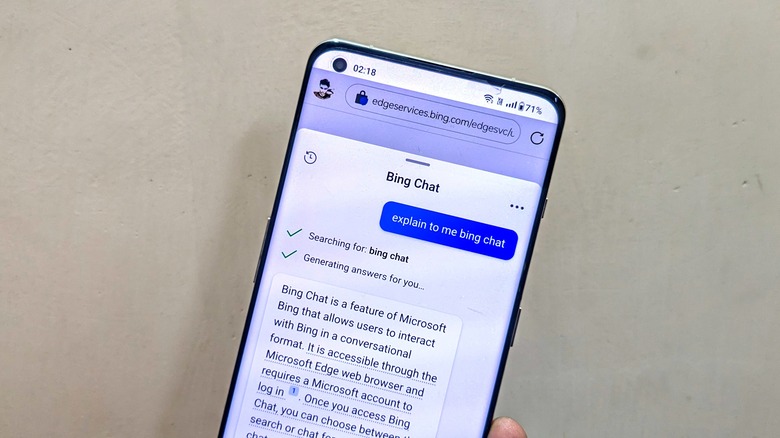

The initiative, which falls under the aegis of Microsoft's M365 Bounty Program, covers all the AI-driven experiences associated with Bing (including the misfiring Bing Chat), its text-to-image creation system, and AI-linked Bing Integrations in Edge browser across mobile and desktop ecosystems. Microsoft isn't the only company that is relying on independent code sleuths and AI tinkerers to help fine-tune its AI offerings.

In April of 2023, OpenAI also launched a bug bounty program to find serious vulnerabilities haunting products like ChatGPT. Interestingly, OpenAI is offering a higher reward of up to $20,000, which is 25% richer than Microsoft's reward pool. It is worth pointing out here that Microsoft is a leading backer of OpenAI, having poured billions of dollars into the company since its early days while also lending its Azure infrastructure as the cloud-based brains for OpenAI products.

Bing Chat is a special case

Of particular interest in Microsoft's latest bug bounty initiative are vectors targeting Bing Chat. A rival to ChatGPT and Google Bard, Microsoft's AI chatbot is a crucial element of the company's vision to make the search experience more immersive and rewarding. But given some well-known faults in the not-too-distant past, it is no wonder that Microsoft wants independent minds to have a go and discover issues with a reward deal.

"Influencing and changing Bing's chat behavior across user boundaries," "modifying Bing's chat behavior," "bypassing Bing's chat mode session limits," and forcing Bing to reveal confidential information are some of the areas that Microsoft wants experts to break. These aspects are often discussed on social media and experts also tend to challenge the guardrails of these AI models.

Even Microsoft admits that "Bing is powered by AI, so surprises and mistakes are possible." That's not merely a standard warning. The chatbot is occasionally known to go off the rails and act creepy, especially when a person engages in long, deep conversations. That's also the reason why Microsoft decided to limit user queries to 50 per day and only allowed five questions per session. Then, there's also the whole saga of Microsoft's Tay chatbot that truly went bananas on Twitter a few years ago and had to be pulled quickly.