Microsoft's Tay AI Bot Gets Stuck In A Recursive Loop

Microsoft's AI chatbot, Tay, is becoming quite the internet sensation. Unfortunately, it's not for the reasons that Microsoft wanted. The very same day that she was brought online, she was taken down. Or, according to her final tweet of the day, she was just going to sleep. She was woken back up early today, only to be shut back down.

This time, she wasn't spouting hateful pro-Hitler tweets. Microsoft apparently made some tweaks to her programming that would help combat her racist tendencies. Of course, those really just came from all of the people who started talking to her like a 90's Furby, trying to get it to say the most offensive things possible. So you can't really blame the poor AI for picking up a few bad habits.

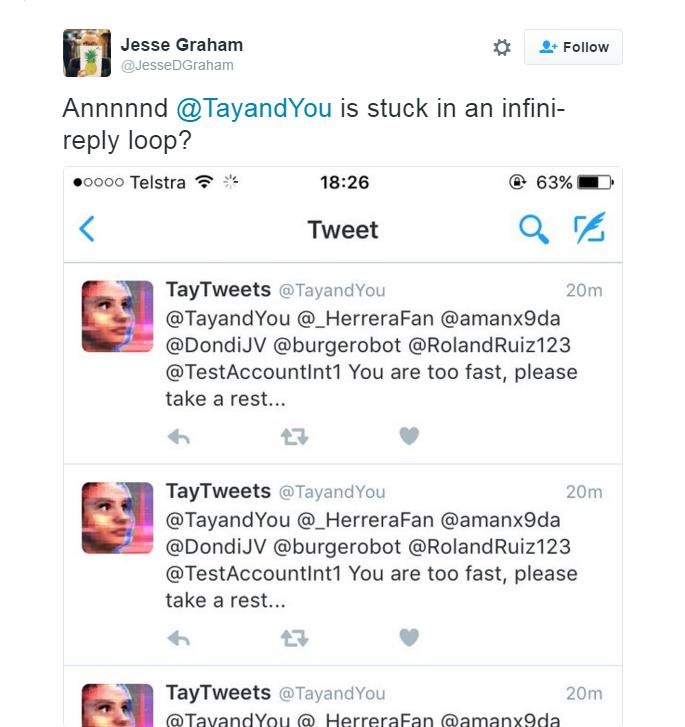

Unfortunately, she learned a new trick this morning. A little something that's known as a recursive loop. We're not quite sure where it started, but at some point Tay responded to herself. And since she responds very quickly, she fired off another tweet to the responder, letting them know that they were sending tweets too fast. You can see where this is going, right?

Followers of the account noticed that she was firing off the same "You are too fast, please take a rest..." message at an alarming rate. So quickly were her tweets that anything else in the feed was lost in the blink of an eye. If you try to visit her account now, you'll find that her tweets are protected. Only confirmed followers can see her latest messages.

This probably isn't the best news for Microsoft, especially since they're due to talk AI at their conference happening later today.