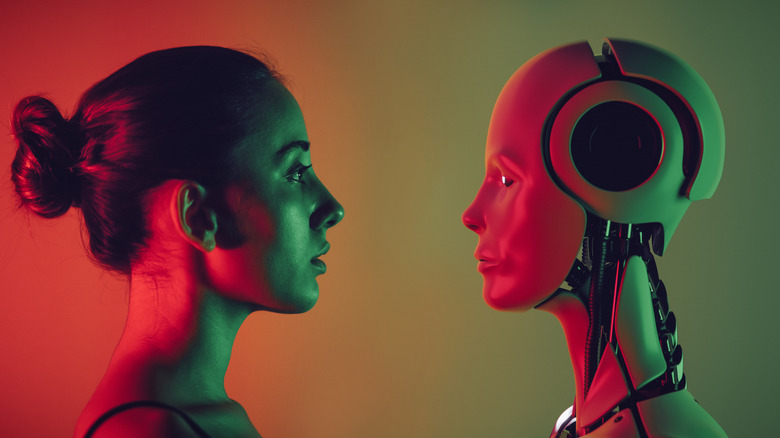

ChatGPT Could Be Changing The Way You Think, And You Might Not Even Realize It

Hardly any tech innovation in recent memory has made such a profound impact as AI. From writing answers to creating sophisticated visual art and even generating music, generative AI tools like ChatGPT have captured the public imagination. Such is their sheer impact that regulatory agencies are busy establishing guardrails so that these AI tools don't end up snatching human jobs or, in the worst-case scenario, bring forth the doom of human civilization.

These AI tools are far from perfect, especially when it comes to their inherent bias. But it appears that this bias is not only a flaw of these AI tools, as they can very well affect human thoughts and alter beliefs, especially children. According to collaborative research from the experts at Trinity College, Dublin, and the American Society for Advancement of Science (AAAS), these generative AI models may also alter human beliefs, especially when it comes to their perceptions of what AI can do and what they should trust.

A recurring theme of the research paper, published in the journal Science, is that when people strongly believe these generative AI programs to be knowledgeable and confident, they are more likely to put their trust in them. And one of the most common problems plaguing generative AI models like ChatGPT, Bard, and Bing Chat is hallucination.

How AI misleads

Hallucination is when an AI model produces a response that was not a part of its training data or an answer that wasn't expected of it, but the AI model provides it regardless, to please its human user. ChatGPT is notoriously prone to hallucinations, and so is Google's Bard. One of the most common hallucination scenarios is historical data. In prompting ChatGPT about a fictional meeting between two historical figures — one of them being Mahatma Gandhi. It confidently answered with a false account of Gandhi participating in a gunfight as if it was a true historical event.

According to the official institutional release note from AAAS, "AI is designed for searching and information-providing purposes at the moment, it may be difficult to change the minds of people exposed to false or biased information provided by generative AI." The paper notes that children are more susceptible to the ill effects of AI.

Celeste Kidd, a psychology professor at the University of California, Berkeley and one of the paper's co-authors, explained on Twitter that the capabilities of AI models are often overhyped, exaggerated, and unrealistic. Such public presentations of AI. In her opinion, they "create a popular misconception that these models exceed human-level reasoning & exacerbates the risk of transmission of false information & negative stereotypes to people." Another psychological factor that is at play here is the fact that humans often form opinions and beliefs based on a small subset of information available to them.

AI is a flawed information channel

If AI serves as a person's source of critical information and idea conceptualization, it becomes extremely difficult to revise those beliefs, even when they are exposed to correct information later. This is where generative AI models become dangerous. Not only can an AI make a person believe in wrong information because it hallucinated, but its own inherent biases can permeate a human user's consciousness.

The scenario becomes especially dangerous when it comes to children, who are more likely to believe in information they find readily available via these generative AI models instead of doing their own due diligence with rigorous research sourced from the internet or conventional sources of information such as books, parents, or teachers.

The use of generative AI models like ChatGPT in educational institutions has already become a topic of hot debate, worrying members of the academia about not just plagiarism, but also how it is fundamentally changing the knowledge-gathering approach of young minds and their inclination to do their own research and experimentation.

Kidd also highlighted another crucial factor — linguistic certainty — with which humans discuss information. She notes that humans often use terms like "I think" during their communication to clarify that the information they are conveying could be their opinion or a personal recollection, and is therefore prone to errors. Chatty generative AI models such as ChatGPT and Bard, on the other hand, don't rely on such nuance.

A cycle of misguided confidence

"By contrast, generative models unilaterally generate confident, fluent responses with no uncertainty representations nor the ability to communicate their absence," Kidd notes. These models either avoid giving an opinion on a sensitive topic using disclaimers like "As an AI model," or simply hallucinate and cook up false information that is presented as a factual response to a person.

The higher the frequency of a person being exposed to false information, the stronger their belief in such misinformation. Likewise, the repetition of suspicious information, especially when it comes from "trusty" AI models, makes it even more difficult to avoid inculcating false beliefs. This could very well transform into a perpetual cycle of spreading false information.

"Collaborative action requires teaching everyone how to discriminate actual from imagined capabilities of new technologies," Kidd notes, calling on scientists, policymakers as well as the general public to spread realistic information about buzzy AI tech, and more importantly, about its capabilities.

"These issues are exacerbated by financial and liability interests incentivising companies to anthropomorphise generative models as intelligent, sentient, empathetic, or even childlike," says co-author of the paper Abeba Birhane, an adjunct assistant professor in Trinity's School of Computer Science and Statistics.

It's only worsening

The paper notes that when users are uncertain, that's the moment they turn to AI as an information outlet. As soon as they get an answer – whether or not it is 100% accurate — their doubt disappears and their curiosity fades because they've now formed an opinion. Therefore, they stop considering or weighing new information with the same kind of urgency they did when they needed it the first time to form an informed opinion.

Worryingly, the situation doesn't appear to be easing now that AI models have gone multiple-modal, which means they can generate answers in multiple formats. ChatGPT and Microsoft's Bing Chat give answers in the form of text. Then we have text-to-image generative AI models like Dall-E and MidJourney. There are tools that can generate audio from text, out in the public domain.

With its GPT-4 upgrade, OpenAI's ChatGPT has already gone multiple-modal. Across all of its modal iterations, especially the visual side, the biases once again raise their head. In testing ChatGPT with a filmmaker, it proved visibly inclined towards a West-centric Anglophone narrative when it comes to creative outputs like art, language, geography, and culture. For a person unaware of AI's bias problem, it becomes natural to absorb the AI's output as credible information and form a strong belief built atop that flawed foundation.