Can AI Chatbots Like Bing Chat Really Experience Hallucinations?

If you've been on the internet at all throughout 2022 and 2023, you'll have seen a lot of hubbub about AI chatbots like ChatGPT and, more recently, Microsoft's Bing AI. These AI systems use a combination of natural language processing and information gathered from training on huge data sets to interact with users. They're a far cry from the primitive chatbots you might have encountered in the early days of the internet. Microsoft's Bing Chat brought these AI smarts to its infamous search engine when it launched on February 7, 2023, connecting Bing and ChatGPT to enable a live, internet-connected ChatGPT that could answer questions based on the information it gathers in real-time.

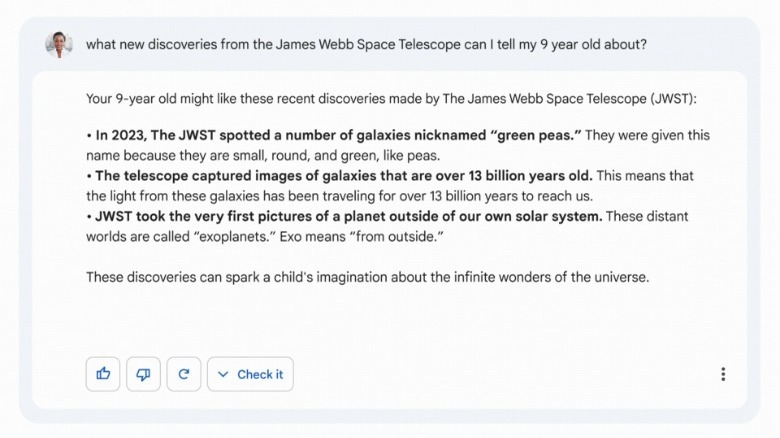

The AI systems we're seeing pop up are incredibly convincing in their delivery, and the information they serve seems to be accurate, but the longer we spend tinkering with them, the more the flaws seem to pile up. People have been using AI to write code, do homework, and even write articles and video scripts to varying degrees of success. One of the biggest challenges with text-generative AI models is called "hallucinating," and it's quickly eroding the sheen off the otherwise revolutionary tech.

AI conjures up fake facts, sources, and bizarre hands

One glaring issue many users noticed using tools like Bing Chat and ChatGPT is the tendency for the AI systems to make mistakes. As Greg Kostello explained to Cybernews, hallucinations in AI are when an AI system confidently presents information that has no basis in reality. A common mistake spotted early on was the invention of sources and citations. In a demonstration by The Oxford Review on YouTube, ChatGPT inserts a citation to a research paper that doesn't exist. The referenced journal is real, but the paper and the authors are nowhere to be found.

In another strange example, Microsoft's Bing Chat made up information about vacuum cleaners during the release demonstration, while a Reddit post seems to show Bing Chat having a minor existential crisis.

AI hallucinations are present in more than just text-generating chatbots, though. AI art generators also hallucinate in a sense, but in that case, they tend to misrepresent anatomy. This often manifests in human characters with an ungodly number of fingers, as seen in this post by u/zengccfun on r/AIGeneratedArt on Reddit, or designs and architecture that simply don't make sense.

Why do AI systems hallucinate?

The issue with AI generators — whether they be text-to-image or language generators — is inherent to how they are trained and how they generate responses. According to The Guardian AI is trained using content from the internet, which it uses to gain knowledge and context on a variety of topics. When a user inputs a prompt into something like ChatGPT, it delves into its knowledge base and provides a response based on the context it has. As a cognitive scientist, Douglas Hofstadter, explains in The Economist, AI is not conscious or sentient, even if it did try its best to convince some of us otherwise.

According to Science Focus, these AI hallucinations stem from a fundamental lack of understanding of the subject matter — the AI isn't drawing a hand because it knows how anatomy works, it's drawing a hand because it's been trained on what a hand looks like. AI making these mistakes is concerning because it could lead to significant issues in vital AI applications, like self-driving vehicles, journalism, and medical diagnostics, but it also may give some solace to those who are concerned for their livelihood. The inaccuracies and hallucinations of AI mean that it probably isn't coming for your job just yet.