4 New Features You Should Check Out ASAP After Updating To iOS 26

With the arrival of the iPhone 17 series, Apple is also rethinking the software experience it serves on its phones. This time around, the overhaul is massive, starting with a redesigned Liquid Glass interface and a bunch of new intelligent features that focus more on practical benefits than snazzy additions. Think more like quality of life additions.

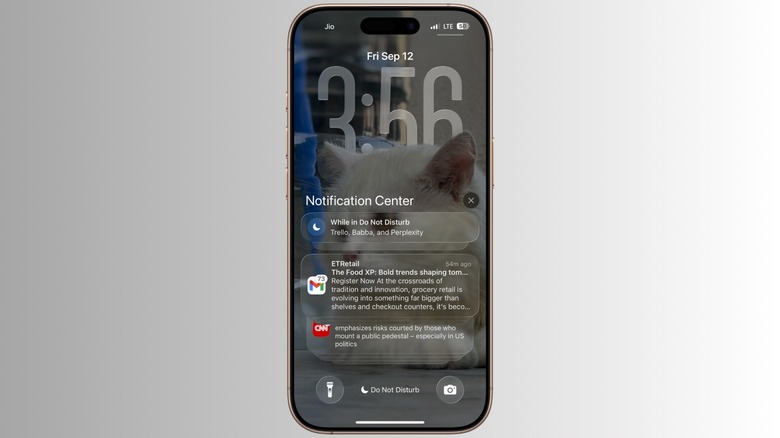

I have been running iOS 26 since the first developer build dropped after the WWDC event, and eventually shifted to the public beta version a few weeks later. The most striking element of the update has been the aesthetic overhaul. It started off as a pretty controversial decision, but following a healthy few refinements, it has grown on me. The whole interface, now draped in a translucent cover, looks stunning. The animations feel smoother, and every aspect of the UI, from the lock screen and notifications bundles to the Settings and Messages apps, feels unique.

I highly recommend that once you install the update on your phone, jump to the theme customization dashboard and pick the "clear" preset. Next, move to the lock screen setup page, pick a picture, and enable the spatial effect to see the image in a dynamic 3D view as you move the phone in your hands. Additionally, the new clock preset looks stunning, and it can automatically adjust its height as you interact with the notification cards. But there's more to iOS 26 than shiny new design elements, and what follows is a list of the four most rewarding changes that you must get the best out of.

Don't sleep on the humble Messages app

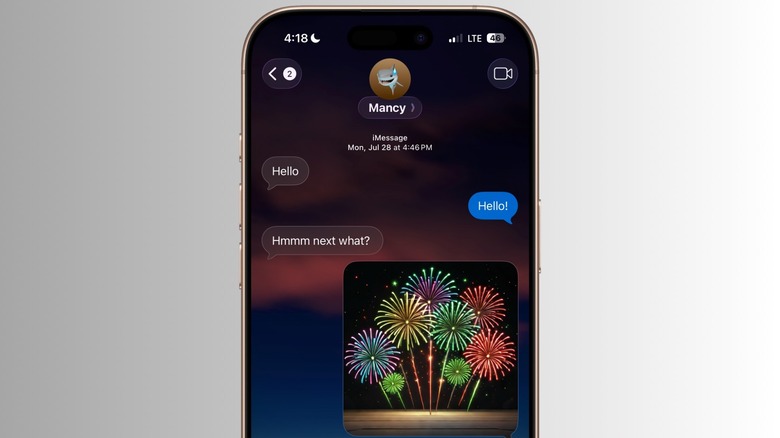

The Messages app is one of the biggest reasons loyalists stick with an iPhone in Apple's home market. Such is the schism that it has stirred a whole green-blue bubble war. Apple is not losing any quarters anytime soon, but in iOS 26, it gives more reasons to stick with the built-in Messages for more reasons than one. On the more playful side of things, you can now send a custom background for each chat. Additionally, you can now launch a poll in the chats, a feature that has been available in chat apps for a while now.

The call screening feature, which is landing in the dealer app, will also be available in the Messages app. The app gets a cleaner view, where all the messages from your friends and family will be neatly catalogued in a unified place, so that you have easy access to them. All the messages from people that are not in your contacts list are parked in a separate section called Unknown Senders, while Transactions and Promotions get their own space.

On the functional end, the app is getting support for live language translation. When you are engaged in a conversation where both parties speak different languages, the chat bubble will automatically translate the messages for the other side. The only caveat is that live translation only works on phones that are ready for Apple Intelligence. Moreover, when your phone is connected to the car's infotainment system via CarPlay, you can respond to texts with Tapback.

A smarter phone app

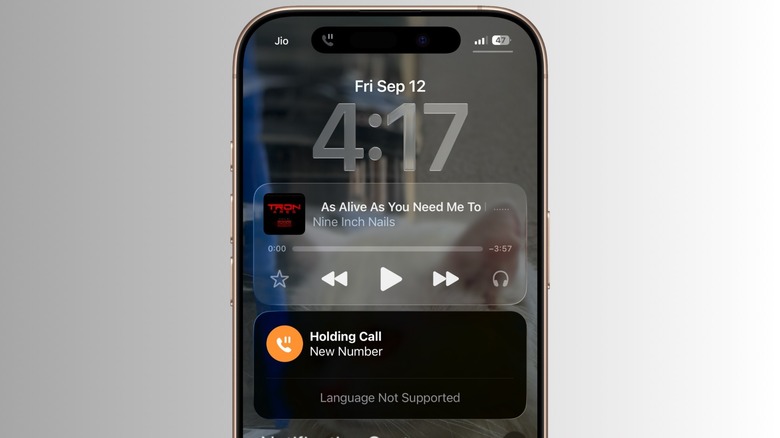

Apple didn't go too heavy with splashy AI features in iOS 26, but it utilized those chops in a few unexpected, but practically rewarding places. The Phone app happens to be one of those destinations. Apple has finally introduced a new screening feature for voice and FaceTime pings. When you get an incoming call from an unknown number, the onboard assistant takes the call on your behalf and then shows a brief transcript of what they're saying on the screen. Once the identity of the caller is verified, only then does the phone ring (or vibrate) and alert you.

In the same vein, the Phone app has landed a new Hold to Assist feature for calls where you have to wait on the line while the other person has put your call on hold. The idea is pretty simple. As soon as your call is put on hold, the onboard assistant takes over in the waiting line. As soon as a human operator is detected on the other end, it alerts you about their availability, and you can resume the conversation.

The Phone app is also getting treated to a new translation feature to assist with bilingual conversations. Once enabled, it shows a chat bubble with transcripts and a translated version of the speech. This feature works for normal voice and FaceTime calls, and supports English, French, German, Portuguese, and Spanish. Some of these features are already available on Android, especially those that run on-device Gemini Nano, such as the Samsung Galaxy S25 Ultra.

Visual Intelligence with screen awareness

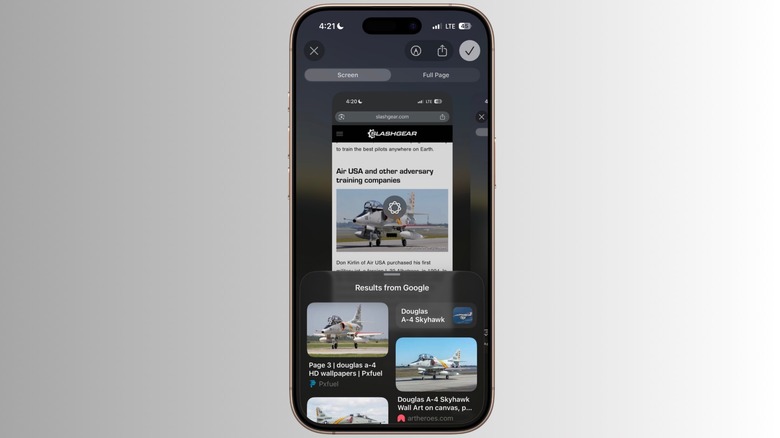

Think of it as an AI assistant with eyes and ears. So far, the predominant way of interacting with AI chatbots has been text back-and-forth. Tricks like ChatGPT Voice mode and Gemini added a new dimension to human-machine interactions. Soon, the multimodal nature of these AI assistants extended to pictures, the camera feed, and finally, to whatever it is that appears on your phone's screen. Visual Intelligence on iPhones is a culmination of those upgrades, and in iOS 26, it's better than ever.

So far, Visual Intelligence has allowed users to point their iPhone's camera at the world around them, get insights, and even take intelligent action. For example, when you tap on a business name appearing in the camera view, the AI-powered feature suggests actions such as viewing operational hours, placing an order, or making a reservation. It can also help identify plant and animal species, add a calendar entry directly from a poster, translate text, and start a context-aware action such as sending an email or calling a phone number.

With the arrival of iOS 26, Visual Intelligence lands on-screen awareness by adding a Highlight to Search feature. All you need to do is take a screenshot and tap on the new Highlight to Search option. Next, highlight an item by drawing around it with your finger, and then pull up the drawer at the bottom of the screen for more details. The whole system is context-aware, which means you will get matching results from across Google Search, Etsy, one-tap calling, or even opening a URL.

Push Siri with its upgraded AI brain

Apple is currently in a serious turmoil with its AI ambitions, which has left Siri (and iOS) at a serious disadvantage compared to products such as Gemini and how deeply it has been integrated within the Android experience. The "LLM Siri" overhaul is only due for arrival in 2026. Meanwhile, Apple is even talking with Google and Anthropic to create a version of Siri running atop Gemini and Claude, respectively, that will run on Apple's private compute servers. But you won't exactly be left out when it comes to experiencing the best of generative AI as long as your phone runs iOS 26.

Apple has confirmed that iOS 26 shifts the inherent architecture of Apple Intelligence and upgrades to GPT-5, the latest AI model from OpenAI that was released a few weeks ago. To recall, the GPT architecture powers experiences such as the ability to offload complex Siri queries to ChatGPT seamlessly, using a built-in extension system. Additionally, it brings Writing Tools to life, which allows users to refine, fix, summarize, and adjust written text. ChatGPT also lends a hand with creating images. Finally, the Visual Intelligence feature described above is also powered by ChatGPT.

And let's not forget the inherent benefits of GPT-5. Broadly speaking, the latest AI model is faster, smarter, and less prone ot making up facts, a term technically known as hallucination. OpenAI also claims that GPT-5 is better at reasoning, "coding, math, writing, health, visual perception, and more."