Adobe Generative AI: What Is It, And How Does It Work?

Adobe's generative AI technology holds enormous potential for enhancing creative work, but for some that promise might be concealed by the newness of the technology and some confusing branding issues. At its heart, Adobe's version of generative AI is designed to take simple, plain English text descriptions and translate them into usable images and documents. It also includes tools to make images more usable by eliminating unnecessary distractions within the image or expanding images for particular uses. However, there's a lot going on here and a lot is planned, so generalizing about it quickly becomes too narrow.

The company is pitching its AI products, in part, as workflow and productivity enhancers, with an end goal of refinement. But what, exactly, does that mean? Refinement is one of those odd words with mutually exclusive meanings. It can mean a process of improvement or signal a high-quality final state. Adobe's generative AI aims to do both. Users can explore creative options quickly, then iteratively refine and polish those ideas into a usable image. Designers, art directors, and other creatives can use these tools to radically speed up both brainstorming and development processes before using the fully editable output to create a final product.

Adobe's undifferentiated mass of branding swirls around its AI efforts. Did you wake up this morning planning to use Generative Fill in Photoshop via Firefly using Sensei? Probably not, but maybe you should! So let's figure this out.

Adobe Sensei makes (almost) everything possible

Sensei is Adobe's umbrella term for the overall AI technology used across its products. This probably isn't a name you need to think much about since it's only something you will likely only directly use through specific instances of the Adobe generative AI tech, as featured in Adobe's Firefly.

However, Sensei, also referred to as Sensei GenAI, will also be focused on Adobe's Experience Cloud efforts, tools designed to enhance user experiences and marketing workflows like data analysis, content management, and even commerce solutions. For enterprise organizations, this will include tools like a more flexible customer data platform, natural language tools to enhance internal and customer communication, more focused user experience journeys, and more detailed insights than ever before.

What this strategy seems to be pointing to is Adobe's intent to use AI on all sides of its business processes and practices. Need a EULA? Let Sensei draft it. Want to sell bananas to people worried about contaminated baby goods? Sensei can point you to the most likely targets. Looking for customization based on region or specific audience demographics? Sensei again. It can do anything because Adobe clearly plans to shoehorn it into everything, and there are good reasons to believe it will work.

Firefly is a standalone generative AI product you can use today

If you have an Adobe ID, you can sign into the Adobe Firefly Beta right now and start benefiting from the transformative impact of AI on creative industries — or at least experiencing it, as the true benefits might only come later. The beta software can't be used for commercial purposes, which rather limits its utility, but you can certainly begin to get a handle on how it fits into your creative workflow.

It could fit in a number of ways, and today Firefly is a collection of tools aimed squarely (and unsurprisingly) at image creation and manipulation. We'll touch on most of those individually below. The larger context of Firefly, though, is that it's this standalone website, it's the same technologies folded into Adobe desktop software and third-party products, and it's part of some as-yet-undefined future plan to have multiple varieties of Firefly for different uses.

Adobe puts Firefly into a more business-focused context when discussing enterprise uses for generative AI. There, the company focuses not only on the creative aspects of the software but on efficiency and productivity, return on investment, workflow enhancement, automation, and ethical use of intellectual property. One concrete difference between enterprise and other uses is that Adobe plans to allow enterprise users to train on their own content libraries to better customize the results.

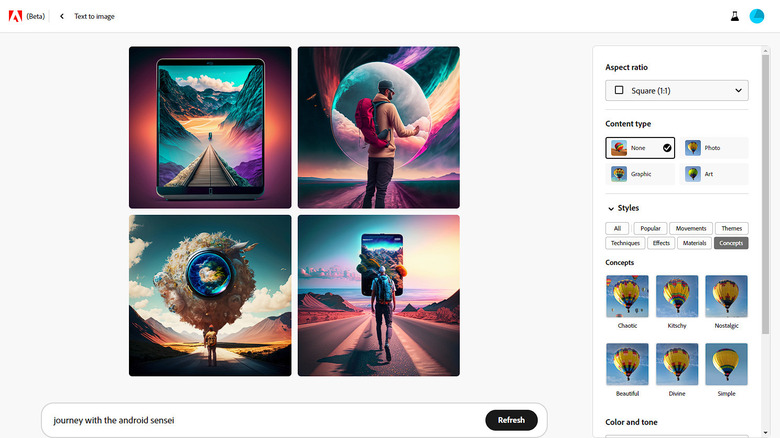

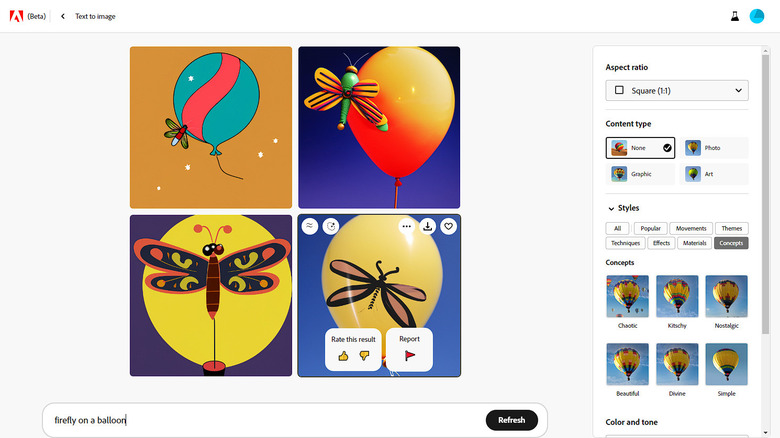

Create unpredictable art with text to image

There's no shortage of good AI image-generation tools, but Firefly's text to image feature has (or will have) a clear advantage. Feeding it a text prompt regurgitates an image like most AI image creators, but Firefly promises complete copyright clearances for the created image. That training is somewhat limited, though, which can be good from a legal point of view and perhaps a bit stifling from a creative point of view.

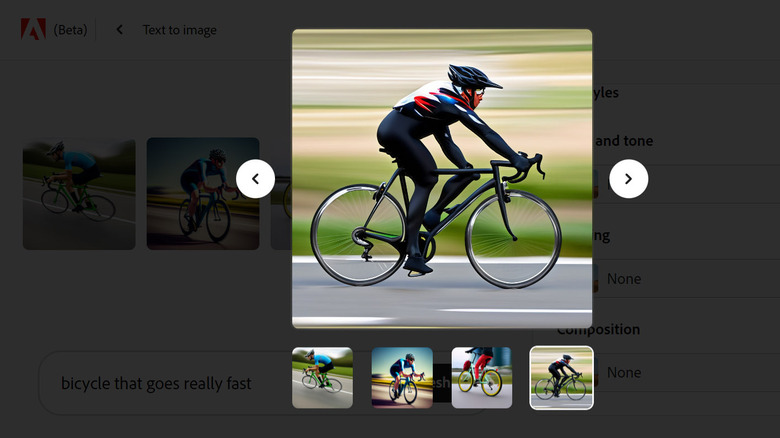

At a glance, it's not immediately clear that much of the Firefly output is usable, which probably explains the beta classification. The prompt "bicycle with turbocharger" generates a few bizarre and geometrically impossible literal interpretations. "Turbocharged bicycle" gives you some stylized bike wheels but nothing else, and "bicycle that goes really fast" renders a few drawings of standard racing bicycles but where the riders' limbs interface with the bike's pedals and handlebars. In every case, the hands and feet are distorted nightmarishly, in the manner of new AI in general.

The AI has clearly created an image where many such images already exist but has done it pretty badly. Somewhere along the way, the Art tag added itself to the search, and removing it from the "bicycle that goes really fast" prompt results in slightly less terrifying images but with the same problems — especially the one with a cyclist's left arm terminating in a calf and foot. The right arm is normal, if in an unlikely position.

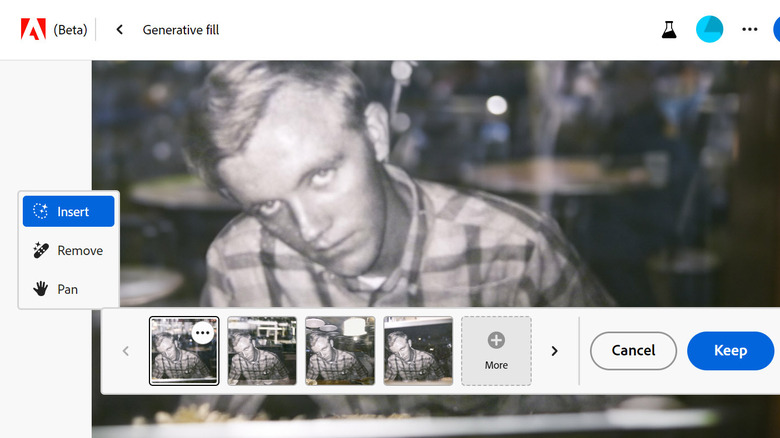

Create details and context from whole cloth with Generative Fill

Generative Fill is a tool that changes, extends, and potentially deep-fakes an existing image based on your description of the desired change. Add to or swap out a background, change the dress or appearance of your subjects, swap out objects — the possibilities are almost limitless. Unfortunately, today the compositing suffers a bit from some limitations that will probably work themselves out over time.

Hands-on use of the web-based version of Firefly is at times frustrating, but overall very impressive. Selection brush and image positioning tools are rudimentary compared to Photoshop, but the AI generated fills were quite good and stylistically in line with the original photos. A late '60s to early '70s photo of a man kept its character when the background was replaced with what we described as a "busy cafe." Adding a background to a 1980s photo of a stock car racing team with their Chevelle, Generative Fill determined the foreground without any selection and suggested backgrounds that kept the period-appropriate film quality and saturation of the original photo.

The effects weren't perfect, but they were quite good. A layered version of either of these could be edited in Photoshop to create a perfectly convincing composite. Switching contexts and adding a "carnival" background to the racing photo resulted in a period-appropriate shot from (apparently) the Carnival in Rio with the original American good ol' boys and their Chevelle in the foreground, which was somehow pretty convincing.

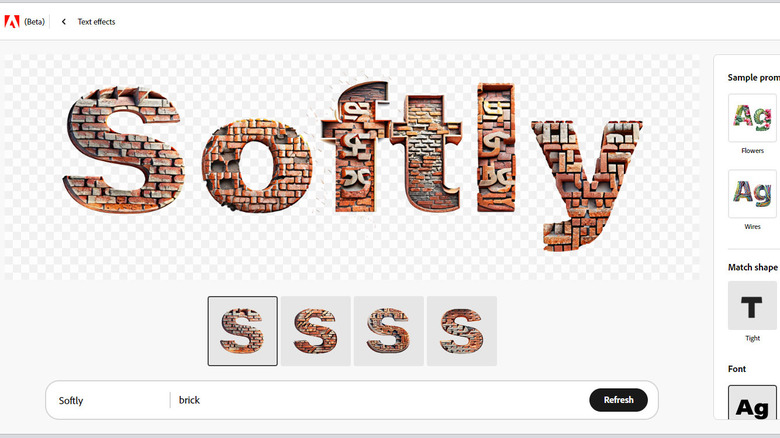

Text Effects lets you brilliantly ruin your slideshow with, well, text effects

After a few decades of trying to dissuade your staff from using 17 fonts and unnecessary visual baubles, you can give up now. Not because you've finally won but because Text Effects overruns your strongholds with an endless array of decorative typography that will turn the most drab earnings report into a psychedelic experience.

In practice, it's possible to get some good results with a little patience. It's also possible to get some kooky results with no patience at all. Stylizing the letter S with vines, surely the softest of softballs, generated rather dull results. Changing the prompt to snakes rather than vines wasn't much better. Making it "vines made of snakes" was a bit more exciting. However, when we switched to the word softly made of bricks, things started to go a little off the rails. The bricks were there, as were inexplicable gaps in some letters and weird brick-textured shapes like type or iconography.

Somewhat disappointingly, the word suss when translated to brick revealed three identical examples of the letter S rather than rendering a unique pattern for each. Given the letter T and various fills to generate, Text Effects struggled again with bicycles but returned a decent T made of donuts. Perhaps the best was the T made of the letter T, which wasn't what we got, but the result looked pretty good. There's a lot of room to experiment here, and odds are you'll get something cool looking — eventually.

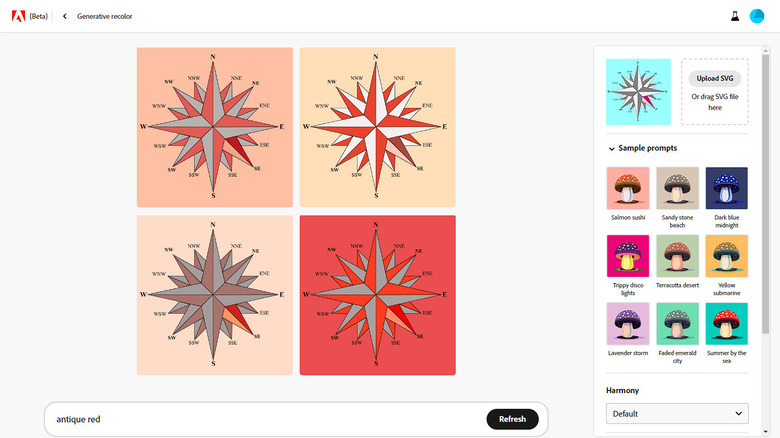

Generative Recolor helps you stylize vector art

Instantly make any bizarre thing you cook up with generative AI compliant with brand standards by using Generative Recolor to put everything in the officially sanctioned color palette, or close to it. Vector graphic editing has long been a specialized skill, requiring software and knowledge not often available outside of marketing departments. Whatever use cases Adobe intends, Recolor will certainly be a boon to those who typically have to queue up for overtaxed junior designers.

Feeding Recolor a random compass rose from Wikimedia Commons and the prompt "antique working toward PMS199" resulted in four identical, unchanged versions. "Antique red" had the same result, yielding four identical black-and-white graphics. The sample prompts ("salmon sushi," "trippy disco lights," and "lavender storm") also failed to change the image, which must have been incompatible with Generative Recolor, so we swapped it for a different compass rose with more success. Any given prompt, including the samples, would typically return three matching images and one out of left field. Still, it did a good job of applying colors in a way that made sense.

An Adobe sample image, a randomly colored abstract landscape with a color scheme described as "Sakura in the snow," seemed to come out in different random colors. Changing the prompt to "Sakura in the flames" yielded a slightly warmer color scheme. Workable, all in all, but no improvement over calling that guy you know in marketing.

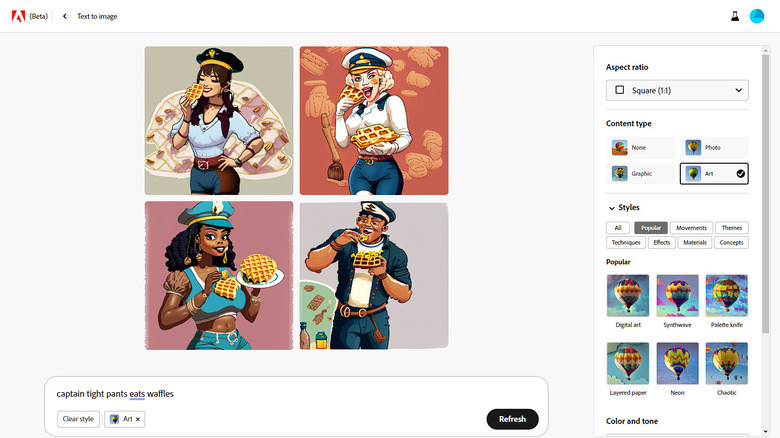

What you can't do with Adobe generative AI

Try this in Firefly's text to image tool: create an image based on the prompt "Captain tight pants eats waffles." Now, if you need to, Google "captain tight pants." You won't see a lot of overlap because Sensei's tools are restricted in a number of ways that make them safe for corporate creative environments.

Firefly isn't trained on images that Adobe can't get clear licensing for, and it will not use intellectual property that is inappropriate to its enterprise users. This means that you'll get a lot of images that originate in the Adobe Stock library or from public domain sources. You also can't reliably ask Firefly for something in the style of a given artist or photographer because Adobe considers that derivative in a way that's not commercially acceptable, which is an approach the company should be applauded for, even if it's a legal self-defense maneuver. Adobe also promises to develop a compensation model to ensure creators are paid for their contributions to AI training.

Another thing you can't rely upon Sensei for is miracles. Searching for something like "hummingbird smoking a pipe" returns images that look like the output of 1990s morphing software, and the pipe is as likely to be sticking out of the back of the bird's head as anything else. Other equally bizarre searches turn up serviceable mashups, and it's difficult to figure out where the failings originate. That Adobe is focusing its AI training on likely business use cases rather than 14-year-olds goofing off might explain such a limitation.

Where you can use these generative AI tools

You can play with Firefly online now, as we have, and many of the tools are already folded into the current Beta versions of Photoshop, including the free trial version. Generative Recolor has been added to Illustrator, where we'd expect to see text to vector once it's released. Parts of Firefly are available in Adobe's free Adobe Express.

Meanwhile, Adobe is aggressively integrating them with Premiere and other products, as well as licensing them for use in third-party applications like Google's Bard. Adobe says the Firefly web platform works on Chrome, Safari, and Edge browsers, although mobile and tablet support is limited. This limitation seemed to come into play when we attempted to resize the interface in the browser but got a mobile-related error message.

As we said, you can't use the images generated by Firefly for commercial purposes, and it's clear from our experimentation and some other issues that Firefly isn't yet ready for prime time. Some users report getting an "unable to load" error, which Adobe unhelpfully says is explained by the fact that "Any images generated that violate the will not be viewable." So, take care not to use prompts that might cause Firefly to violate the.

More seriously, though, this seems to suggest that there's a layer of filtering at work post-generation. Presumably, either Adobe is putting a lot of faith in that filtering or plans to train Firefly to filter itself in the future.

What you will be able to do with Adobe Generative AI soon

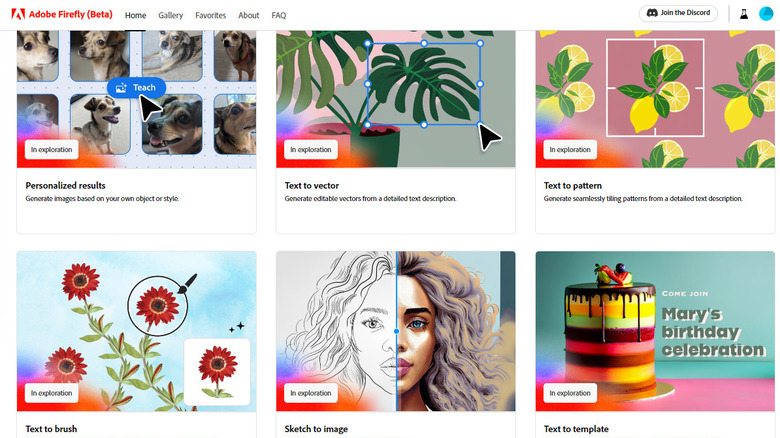

Adobe's generative AI tools are a work in progress, as all AI tools are definitionally, so naturally there are some tools in development — they call it "in exploration" — that will expand what Sensei means to you. Or maybe what Firefly means to you. One of those.

Upcoming products and capabilities include extending images like landscapes to fit your desired format, fleshing out full scenes from simple 3D models, per-user or per-corporation customization, the generation of vector art and pattern tiles from text descriptions, converting simple sketches to fully realized illustrations, and more. In addition, you'll be able to do some or all of this via an API — or have your AI assistant do it for you.

Once the features are out of beta and you can use them freely, Adobe platforms will generate metadata that marks Firefly's creations as AI-generated as part of its Content Authenticity Initiative and its Coalition for Content Provenance and Authenticity (C2PA), which are designed to address concerns about the ethical use of AI-generated images. In the future, Adobe products will also honor a "do not train" flag on content that creators don't want used to generate these images.

While there is still much to be done, Adobe seems to be taking important steps toward turning AI image generation into something that enterprise organizations and content creators can incorporate into their current systems. It should, at the very least, be interesting.