NVIDIA GPU Neural Network Makes Google's Cat-Spotter Look Dumb

Google's artificial neural network which taught itself to recognize cats in 2012 has been left looking like a dunce, with a new network by NVIDIA and Stanford University packing more than six times the brainpower. The new large-scale neural network uses NVIDIA's GPUs to pack in 6.5x more processing power than Google before it, but in a far smaller footprint. In fact, where Google's system relied on the combined power of 1,000 servers, NVIDIA and Stanford pieced together their alternative with just sixteen.

In fact, it took NVIDIA only three servers to replicate the processing power of Google's neural network from last year. The search giant relied on CPU power for its data crunching, with a total of 16,000 CPU cores spread across the thousand PCs.

However, NVIDIA's use of GPU acceleration means its 11.2 billion parameter network could be condensed into a far smaller setup. It's not the first time we've seen GPUs harnessed together for this sort of huge parallel processing; earlier this year, climate researchers used GPU-powered supercomputers to model CO2 emissions, while numerous other supercomputers, such as Titan, have paired GPUs with CPUs to improve overall performance.

What makes this latest neural network particularly interesting is the fact that it relies on off-the-shelf hardware. Each of the sixteen servers has two quadcore processors and four NVIDIA GeForce GTX 680 GPUs, with each GPU getting 4GB of memory. An FDR Inniband adapter is used to hook each server together, for low-latency communication. Admittedly, a GTX 680 card will still set you back in excess of $400 apiece, but this is still a far cheaper "supercomputer" than many we've seen.

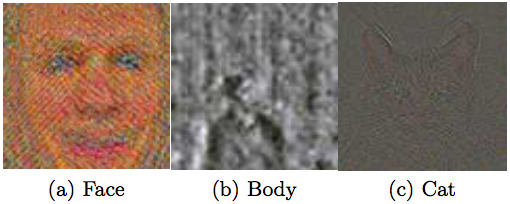

The new network is the handiwork of artificial intelligence researcher Andrew Ng at Stanford, who also worked with Google last year to develop the 1,000 machine cat-spotting cluster. That system, modeling the same activities and processes as the human brain, was able to learn what a cat looks like, and then translate that knowledge into spotting different cats across multiple YouTube videos.

Although that sounds easy to us, for computers the sort of fuzzy logic demanded to recognize types of animal or object but not only one specific example – such as, say, understanding that cats can be different colors or patterns, and extrapolating recognition from a limited set of example pictures – is very difficult. Ng and the Google team showed the neural network millions of images, building up its ability to spot the intrinsic characteristics of cats in future.

While it worked, the scale of the CPU-driven network meant it was impractical. The new, NVIDIA-powered version offers not only an increase in processing grunt but a smaller form-factor, and is likely to be of keen interest for those doing things like speech and object recognition, natural language translation, and other tasks that demand a more flexible approach.