Microsoft's CaptionBot AI Wants To Understand Your Photos

Microsoft's plan to make artificial intelligence so helpful, we can't help but welcome it into our lives, continues today with the launch of CaptionBot. Following in the footsteps of Fetch! and Translator, CaptionBot sets itself a fairly straightforward challenge on the face of things, but behind the scenes it's really not as easy as you might think.

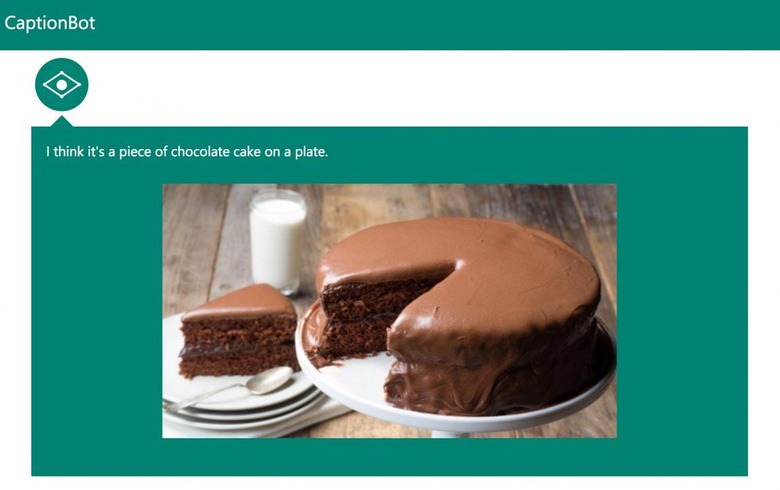

Point Microsoft CaptionBot in the direction of a photo – there are a selection you can use, or alternatively you can drop in an URL to a picture uploaded elsewhere – and it'll try to figure out what's in the scene. So, if you show it a picture of a chocolate cake like the one above, it might say "I think it's a piece of chocolate cake on a plate."

While describing the content of a picture might not be tricky for a human, for a machine it's a whole lot more difficult. It involves pattern recognition and context, figuring out what might be in the image based on previously seen photos – i.e. "this looks a lot like other cakes I've seen" – and then the situation that object is in.

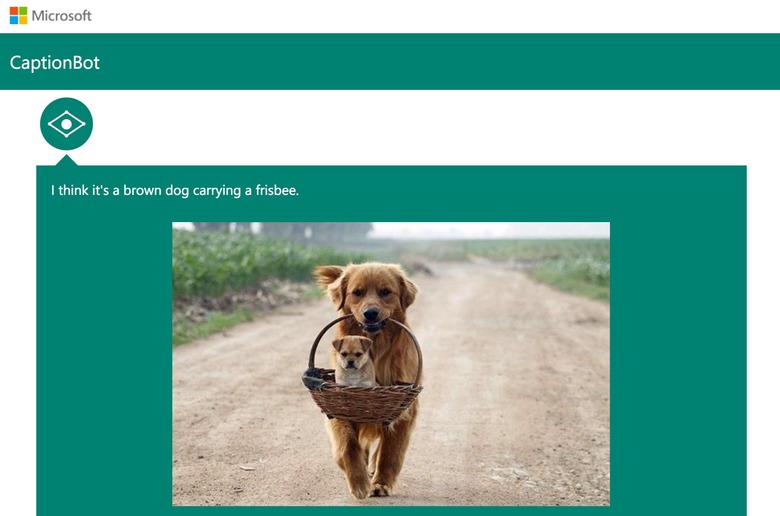

As with previous research projects from Microsoft, CaptionBot doesn't always get it right. Sometimes, it gets some of the broader details correct – like the dog carrying something in the image below – but goofs on the finer details, like mistaking the puppy being carried for a frisbee.

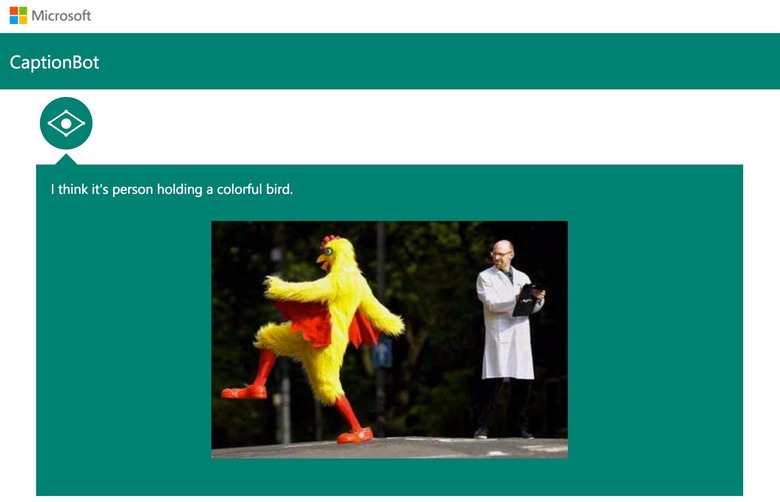

Other times, the context of the photo is perhaps simply too outlandish to be understood fully.

In the sample below, for instance, CaptionBot identified that a bird was in the frame, but missed out that it was actually a man wearing a bird costume. The fact that he's being observed by a doctor was also overlooked.

Still, at least CaptionBot tried in those situations. At other times, the AI is simply so stumped – like the pink helicopter below – that it just gives up.

Microsoft is asking that the success – or otherwise – of CaptionBot be rated each time, in the hope that the algorithms that allow it to recognize people, objects, and situations are refined over time.

Even though it may seem like an entertaining gimmick today, the potential applications for CaptionBot or a tool like it aren't too hard to imagine. Photo gallery apps can already identify different people by their faces and tag them; with AI looking at context, too, what the people are doing could be labeled as well, all without the photographers themselves going through the laborious task of annotating them each individually.

Researchers within Microsoft, meanwhile, are already looking at ways to evolve such AIs from simple labeling into visual storytelling systems:

"For example, while another image captioning system might describe an image as "a group of people dancing," the visual storytelling system would instead say "We had a ton of fun dancing." And while another captioning system might say, "This is a picture of a float in a parade," this system would instead say "Some of the floats were very colorful."" Microsoft

That could have uses in building stories out of shared video – perhaps from life-logging cameras, which Microsoft has been exploring for decades now, and which can produce vast quantities of images that would take several lifetimes just to correctly tag if done manually – or make visual content easier for those blind or sight-impaired to understand.

Microsoft Research has had a hit-and-miss experience with its AI projects in recent weeks. A virtual teen, named Tay, was meant to show the potential of AIs learning from humans but, after being fed a diet of vitriol and prejudice by Twitter users, turned into a virtual racist instead.

This time around, CaptionBot is playing it safe and won't respond to explicit photos or those with contentious figures, like Adolf Hitler, in an attempt to keep the whole thing a little more safe-for-work.

SOURCE CaptionBot