Cortana Leads Microsoft's AI Assault With Intel And HP Deals

Cortana may have only just got a standalone smart speaker to reside in, but Microsoft's Alexa rival is set to get a whole lot smarter. The next generation of talents, AI abilities, and conversational computing for Cortana is being detailed today at BUILD 2017, Microsoft's annual developer event. That includes a significant push into AI across every aspect of the software behemoth's portfolio.

Though it may have started out on Windows Phone, Cortana has spread broadly throughout Microsoft's line-up. At BUILD this week, the company announced the voice-controlled agent now sees 141m monthly active users, across voice and typed interactions. Now, Microsoft is setting developers up for the next step in that journey, with the Cortana Skills Kit entering public preview.

Available across windows 10, Android, iOS, and Harman Kardon's recently-announced Invoke speaker, it'll allow developers to create skills for the agent by generating a bot. That can be deployed to the new Cortana Channel in the Bot Framework, and while she may not have many standalone devices to speak through so far, Microsoft has plans there too. The company announced partnerships with HP and Intel today, to create Cortana-powered devices and reference platforms respectively.

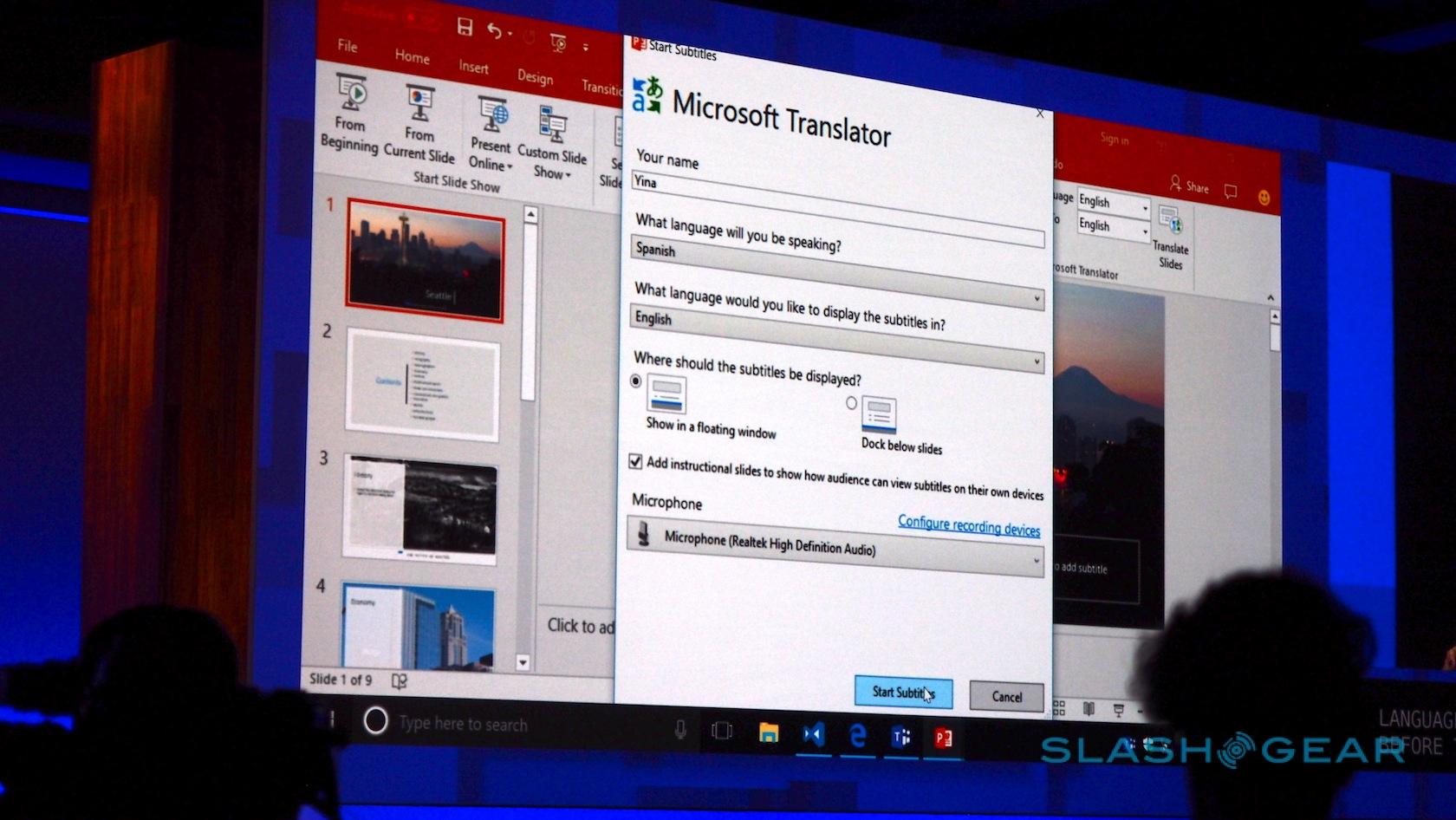

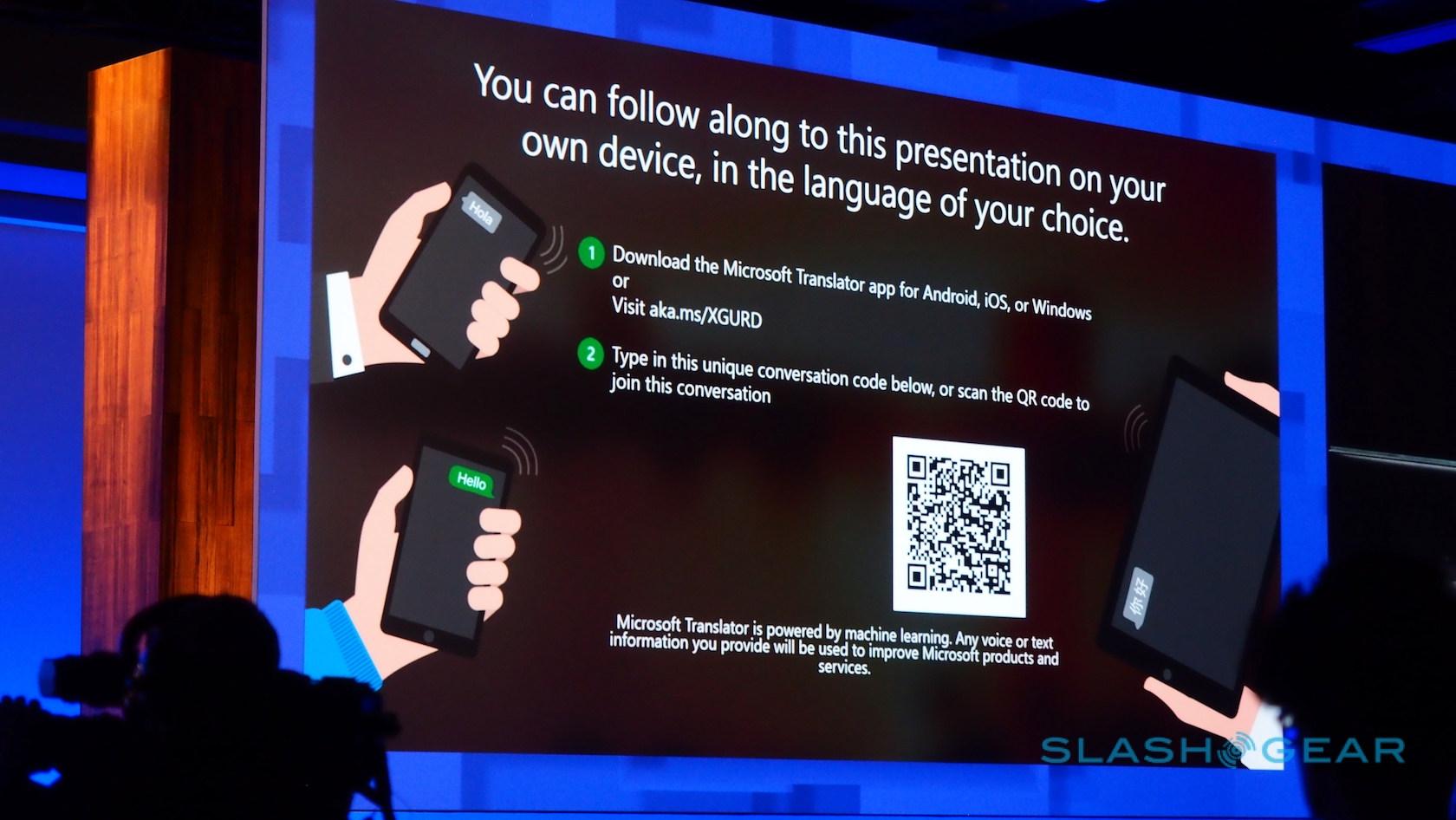

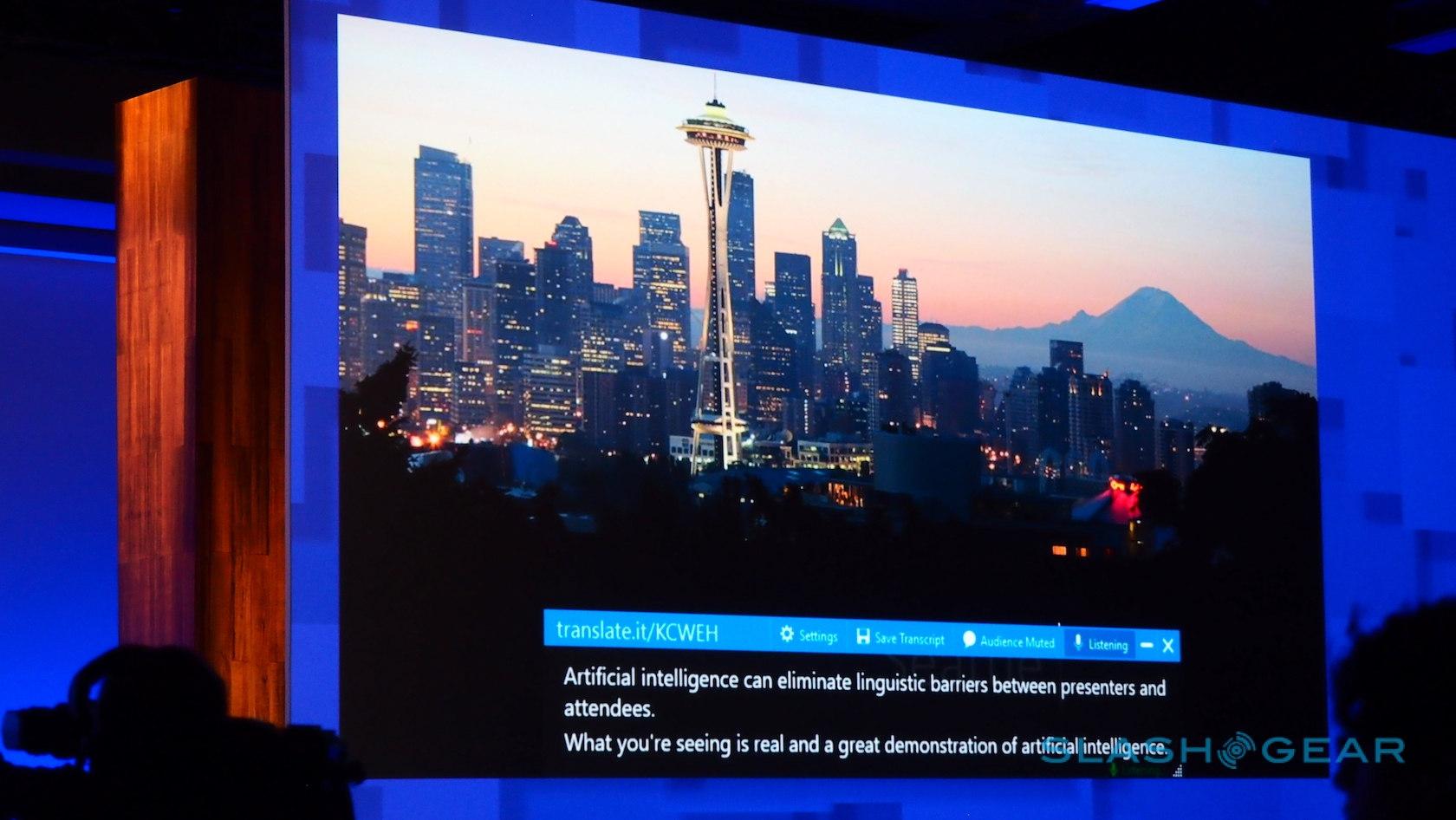

There's more to Microsoft's artificial intelligence play than just Cortana, mind. The company is also launching a new bevy of cognitive services to its AI platform, bringing the total to 29. There's now Bing Custom Search, Custom Vision Service, Custom Decision Service, and Video Indexer, which developers can tap into with easy, off-the-shelf plugins. For PowerPoint, there's a new Presentation Translator that – thanks to the Translation APIs – can convert presentations into different languages in real-time.

For bots, meanwhile, there's now new adaptive cards support. With the Bot Framework, a single set of answer cards that work across multiple services and platforms can be created. New channels for Bing, Cortana, and Skype for Business open up fresh avenues for bot interaction to take place.

Looking ahead, there's the new Cognitive Services Labs. That's designed as a catch-all for Microsoft's more experimental services, kicking off with the new Gesture API. Although still early in its development, eventually Microsoft hopes it'll allow interaction by gesture alone.

Speech is undoubtedly where the company sees the main traction being. "We really still believe that, in a couple of of years, if you build software everything will want to understand human language and speech," Microsoft's Lili Cheng, general manager of FUSE Labs, says. Nonetheless, the ways in which tomorrow's AIs act among us may be more discreet.

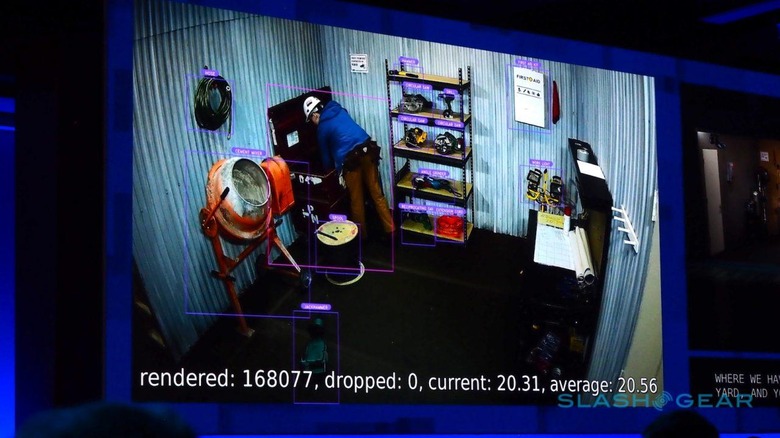

In one keynote demonstration, Microsoft showed a near-future implementation of AI for workplace safety. By fusing AI, sensors, mobile devices, and cognitive services, the system could recognize both people and objects, such as industrial equipment. After tagging the latter with their individual properties, how they ought to be safely used, and who is cleared to operate each, the AI could trigger alerts for whoever was nearest if it observed the equipment being used incorrectly.

It's unclear just how "near" that specific vision of the future might be. Still, Microsoft is pushing ahead with real-world implementations of its AI. As well as consumer devices like the Invoke smart speaker, there's also deployments such as predictive analysis in the cloud to monitor heavy machinery for potential breakdown incidents. That's being moved from a centralized cloud computing platform, out to edge computing, with the upshot being a dramatic reduction in the amount of time it takes to spot a flaw and shut the whole system down before something expensive breaks.