Reddit's CEO Says Much Of The Internet Is Dead - And Redditors Agree

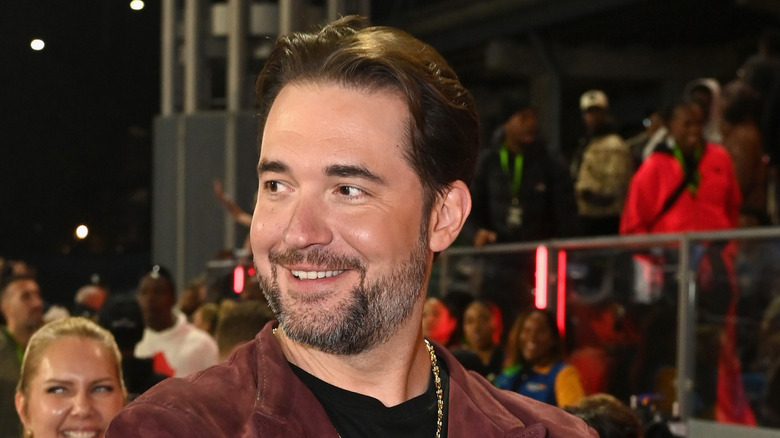

A big conversation is taking place right now about how much of the internet is actually still human. People have been circling around this topic for a long time, but Reddit co-founder and CEO Alexis Ohanian has recently lent his voice to the conversation, sparking renewed interest among members of the community. Ohanian went on an episode of TBPM and stated that "much of the internet is now just dead," citing the "dead internet theory" and asserting that so much of the internet is now AI and bot-fueled that internet users are losing that feeling of human-to-human connection that we all value so much.

Shortly after this episode of TBPM, dozens of Redditors came together in a Reddit thread to share that they agreed with his assessment. Many were in consensus about how an inordinate amount of the internet is now controlled by automated software, and that it feels like less and less of the online world is being directly shaped by human intent. Some even argued that the internet is rapidly progressing toward a place where bots are more often than not just producing ads for other bots.

Procedurally-generated content and automated spam have been issues for over a decade, but the advent of AI-generated content is taking these previous inconveniences to new levels. This ouroboros of content creation and consumption may end up causing more problems than it resolves, both for the advertisers and those who are being advertised to.

What is the 'dead internet theory'?

Before we get too deep into the discussion, let's first go over what the "dead internet theory" is and how it came about. Discussions about the rise in bot-generated content have been circulating for over a decade, but one of the first instances of its use was in an anonymous post that appeared on 4Chan's /x/ board in September 2020. The original version of this theory posited that the internet was being run by a cloak-and-dagger cohort of powerful individuals behind the scenes, as well as a more visible league of paid influencers. It suggested that this was by design, intended as a means of controlling attention and feeding consumers into a never-ending cycle of advertising and consumption.

The "dead internet theory," in its original form, has been widely disregarded by many critics as a conspiracy theory. New Models founder Caroline Busta called it a "paranoid fantasy" in an interview with The Atlantic, while Robert Mariani, writing in The New Atlantis, stated that the theory "reads like a mix between a genuinely held conspiracy theory and a collaborative creepypasta — an internet urban legend written to both amuse and scare its readers with tales on the edge of plausibility." Mariani concluded their point by saying that "[t]he theory is fun, but it's not true, at least not yet."

However, the theory, as most people interpret it today, is no longer about the idea of a league of invisible puppet masters that are seeking to control and manipulate the public. Instead, it's primarily focused on the much simpler (and more believable) idea that most of the internet is composed of text, images, and other forms of content that have been produced by bots and AI rather than humans.

How much internet traffic is actually human?

This newer interpretation of the "dead internet theory" appears much closer to the mark, and there's a fair amount of data to support that. Research by cybersecurity firm Imperva estimated that bot traffic accounted for 37.2% of internet activity in 2019 and has climbed in the years since. Its latest figures (via The Independent) state that bot traffic reached 51% in 2024, with a matching increase in "bad" bots.

For example, just take a look at your email account and ask yourself how many of the emails were written by someone who actually entered your email address and hit the send button. Several Reddit contributors in the discussion about Ohanian's comments even question how much of Reddit itself is actually bot-driven, expressing doubts about the authenticity of the platform's upvote system and wondering whether a significant portion of the conversations and responses are AI-generated. This accounts for a lot of the content that makes the internet what it is. There are bots that scan websites for information, ones that send private messages and comment on social media posts, bots that scrape for targeted ads, and many other varieties.

That isn't all, though. It used to be that bots were largely composed of human-written pre-coded text that was then targeted and shared on a massive scale. The introduction of AI means that bots can now be made by a computer, with a prominent example being Meta infamously using AI to create bot accounts on its own platform. Bot conversations can now be entirely computer-generated, with no human involvement.

We're getting more and more AI-generated content

Internet content is another area where humans are seemingly getting drowned out. More and more frequently, we're seeing companies turn to AI-generated advertising content, with some pretty shameful uses of AI appearing in commercials from major corporations, but that's just the tip of the proverbial iceberg.

AI-generated content isn't just limited to corporate interests. Free access to generative AI, like ChatGPT and Gemini, means that just about everyone has access to the technology. Anyone with a website or social media account can use it to generate complex content and then share it, further increasing the amount of non-human-made media on the internet. There are certainly hundreds of platforms dedicated to sharing AI-generated images, but AI content is also flooding into Facebook, Instagram, X, TikTok, YouTube, and virtually every other photo and video-sharing platform there is. What's more, while there are a few ways to tell if an image was AI-generated or if it's the real deal, the constant awareness it requires can be fatiguing, especially as AI content continues to become more convincing.

Additionally, it's also nearly impossible to estimate how much AI is used to build websites, whether it's in the code itself, thanks to AI coding tools, or in the text that we read. Some of the Redditors complained that they have detected a noticeable increase in the number of AI-written articles recommended by search engines. It can be very difficult to tell if an article, product description, or a company's service description was written by a human.

What problems arise from a less-human internet?

There are a few immediate problems with the way the internet is heading, and some that venture into the realm of speculation. The notion of bots advertising to bots or the cannibalism of AI scraping AI-generated content may pose future problems, but there are a few more immediate concerns that have already emerged.

One of the most insidious issues we're still trying to manage is the disastrous spread of disinformation and fake news. This is a problem that involves everyone, from the bad actors who deliberately create misleading, AI-generated content all the way to your kindly grandma who shares a link without realizing it's fraudulent. Newsguard, an organization that specializes in tracking fake news sites, has identified 1,271 news sites that actively spread unreliable, AI-generated images and information. This is more than ten times the number of these sites than there were in 2022.

It's not that people weren't able to produce false information before; it's just that AI has dramatically ramped up the scale of production. AI image generation, in particular, has helped make the fake content more deceptive. Reddit commenters also mentioned how frustrating it's become trying to get reliable information from search engines. The result of all this is that you might start to suffer from a sort of vigilance fatigue. Constantly having to be on the lookout for fake accounts and misinformation is exhausting, making the internet a less enjoyable place to interact and much less useful for gathering information.