What Is Path Tracing And Is It Different Than Ray Tracing?

Ray tracing and path tracing sound similar — and technically, they are. Both methods simulate how light travels in a 3D environment, but the way they work and the results they produce are completely different. It isn't just "ray tracing, but better." In fact, the difference between them is what separates a good-looking scene from one that feels almost photographic. Ray tracing is a step above rasterization, turning a 3D scene into a flat image and then filling in the pixels with color, which is the old-school method most games still use — fast, but not very smart about how light actually behaves.

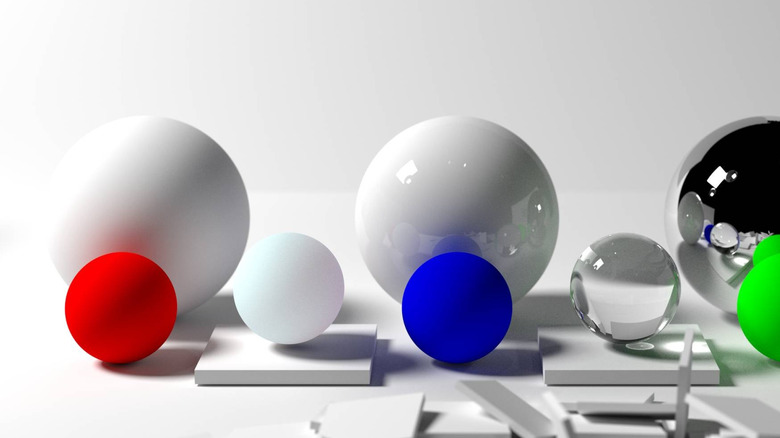

The way ray tracing works is it takes light rays from a camera's point of view and calculates how they reflect or scatter off different surfaces. It typically stops after a few bounces, and that's enough to generate realistic shadows or reflections, but only in the most important parts of the scene. With path tracing, however, each ray bounces around the scene, interacting with everything from walls and windows to floors and even dust, until it fades out.

It follows the entire light journey, producing lighting that behaves the way real light does — with soft shadows, natural reflections, and believable indirect glow. That's why path-traced scenes look more natural, and sometimes weirdly photoreal — because they are. Of course, it's been too demanding for gamers, which is why, up until recently, full path tracing was mostly something you'd only see in animated films or VFX. But thanks to better ray tracing hardware and upscaling technology, that wall's starting to crack.

What path tracing changes in modern games

In the first wave of ray-traced games, game engines were designed to use only a few ray-traced effects. Titles like "Battlefield V" and "Call of Duty: Modern Warfare (2019)" used Microsoft's DirectX Raytracing (DXR) API to add just one or two ray tracing effects — usually shadows or reflections — on top of a rasterized frame. Even "Control," one of the most ambitious early examples of ray tracing, let players toggle ray-traced reflections, shadows, and lighting separately depending on their system's limits. When those features were disabled, the game fell back to traditional rasterized effects instead. This hybrid rendering approach made sense at the time since each ray-traced feature was demanding on GPU performance.

This is exactly why path tracing feels like a big step ahead. Instead of just layering a particular ray-traced visual on top of rasterization, it simulates all lighting effects — reflections, shadows, bounce lighting, ambient occlusion — through a single, physically accurate process. "Cyberpunk 2077" is the most complete example of this so far. Its "Ray Tracing: Overdrive" mode lists one unified option simply labeled path tracing.

"Indiana Jones and the Great Circle" takes a more flexible approach. Its "Full-Time Ray Tracing" mode is split into Medium, High, and Ultra Path Tracing modes, but players can still see and adjust individual RT settings like sun shadows and reflections. This means that even with these settings off, the game's base global illumination system is ray-traced, and it's required for the game's lighting to function. Simply put, it means even with certain PT settings off, the pipeline is fully ray-traced, but players have more control over what they want active, and at what quality.

What difference does path tracing make for developers

Lighting has always been one of the most tedious and time-consuming parts of being a game developer, beginner and veterans alike. In the past, artists had to place dozens of fake lights just to make a scene look natural. That meant positioning extra light sources, baking light maps, and using tricks like cube maps and screen-space reflections to simulate real effects. Bounce lighting, too, had to be faked, and getting the mood right was a time-sink. These methods require extensive setups and only provide limited realism.

Path tracing, however, has cut down the busy work quite a lot. Light rays from a single source can bounce through the environment naturally, and light walls, ceilings, and objects without the extra work. Instead of placing dozens of fill lights or adjusting shadow maps, the renderer simulates how light rays bounce, reflect, refract, and scatter — all driven by physics itself. For developers, it means they don't have to sell the lighting anymore by layering tricks, which means less setup, fewer hacks, and more time to focus on storytelling and design.