What Do OpenAI & Anthropic's New 'Vibe Coding' Tools Actually Do?

The tech world is buzzing about the potential of Agentic AI to change the way the world works. More advanced than traditional chatbots, agentic AI utilizes reasoning software that independently executes complex sequences, requiring in-depth decision-making. While the most high-profile examples are in AI-connected vehicles, agentic reasoning models are used in multiple industries, ranging from supply chain management to sales and scientific research. These developments are touted as some of the most innovative in the history of AI that may fundamentally change how people interact with their work.

One recent advancement is in coding, where new applications purport to review, edit, and write programmers' code for them. Known as vibe coding, users build software by inputting prompts into AI systems directly. The trend extends beyond amateur programmers, however, as major software companies like Microsoft and Amazon are increasingly incorporating AI-generated code into their software. For instance, a quarter of Google's code is produced by agentic AI systems, while Mark Zuckerberg predicted that half of Meta's would be built by AI within a year.

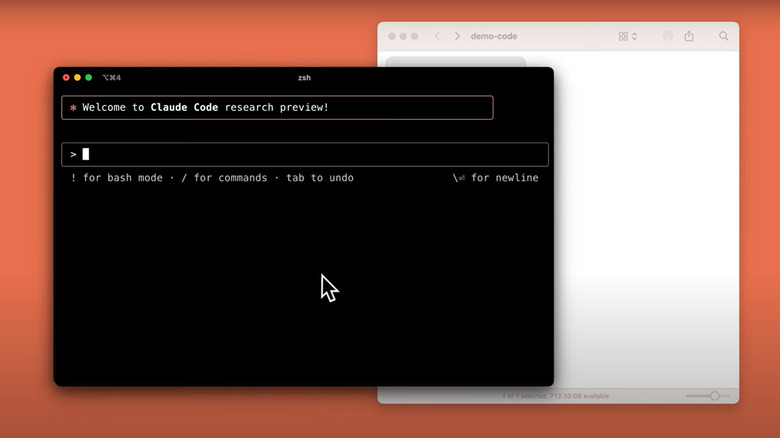

Pioneering and capitalizing on these trends, several companies are developing AI programs for the coding process. Two of the biggest players, OpenAI and Anthropic, released their newest AI coding applications in May 2025, dubbed Codex and Claude Code, respectively. Geared towards advanced software engineers, these programs offer a host of features that promise to write and test large sections of code. While each has its benefits, the rise of AI coding systems also poses significant challenges.

Anthropic's Claude

Although Anthropic's command-line Claude is designed to make the coding process easier, its emphasis on customization and flexibility means it requires time, effort, and a degree of expertise to install and run effectively. As such, it is a difficult sell for early coders.

Integrated directly into a programmer's development environment, Claude can edit files, execute tests, find and fix bugs, automate Git operations, and resolve merge conflicts. Although it varies by customer, Anthropic's user guide outlines several common workflows. For example, coders can ask Claude not only to find problems but also to plan, implement, and catalogue solutions. Another frequent application is to have Claude create and run tests before instructing the system to write code capable of passing it.

According to Anthropic, Claude adjusts to users' workflows through locally stored plain text .md files. To put it simply, customers can outline the rules of their conversations with Claude by outlining critical information like bash commands, testing instructions, and developer environment setups. Claude can be installed through Anthropic's API or in conjunction with enterprise AI systems like Amazon Bedrock or Google's Vortex. Claude also offers GitHub Actions that can instantly pull requests through Claude's GitHub app.

As of June 2025, Claude Code is available through three subscription packages: Pro, Max 5x, and Max 20x. At $20 a month, Pro is Anthropic's most basic subscription level and can handle "short coding sprints" using Claude's Sonnet 4 system. Max 5x, for its part, costs $100 per month and enables subscribers to run larger codebases through its recently released Opus 4, which the company touts as the world's "best coding model." Max 20x, double the price of Max 5x, is intended for 'power users' of the company's Opus 4 and Sonnet 4 programs.

OpenAI's Codex

Codex is a cloud-based line-command application using OpenAI's codex-1 software – the software engineering variant of ChatGPT o3. Unlike Anthropic's API, Codex users access Codex through a ChatGPT sidebar. Using this familiar chatbot format, programmers can either type prompts directly into Codex and click 'code' or enter their codebase and hit 'ask'. Capable of executing linting, testing, and type-checking commands in thirty minutes or less, Codex executes each task in isolated environments informed by the user's preloaded codebase. Not only can users track their progress in real time, but the system also provides terminal logs and test outputs citing its changes. Once completed, users can review and revise results or pull their work into GitHub and local workspaces. Like its Claude counterpart, users can customize Codex through .md files stored in their repository.

Of note is OpenAI's $3 billion acquisition of Windsurf, a popular vibe coding application formerly known as Codium that brings AI-generated code into programmers' existing projects. The acquisition is expected to expand the company's coding programs even further, potentially providing a leg up on competitors like Anthropic. As of June 2025, the sale had not been finalized.

Upon release, Codex was only available to OpenAI's ChatGPT Pro, Team, and Enterprise subscribers. ChatGPT Pro, which costs $200 per month, includes unlimited access to OpenAI's suite of programs, including OpenAI o1, GPT-4o, Advanced voice, and the advanced o1 pro models. In June 2025, however, OpenAI expanded access to its more affordable $20/month ChatGPT Plus subscription tier.

Security questions emerge

Despite its advantages, the growth of vibe coding also raises serious cybersecurity questions. One trend that has experts worried is vibe hacking, in which hackers use AI to generate malware packages. Although AI programs employ security protocols to prevent this, researchers have found that chatbots are easily jailbroken and can be used to write malware. To put it simply, a byproduct of AI lowering the skill and time thresholds for writing code is that it enables malicious actors to produce malware at unprecedented scales and speeds. These cyberattacks have been a growing problem since WormGPT's debut in 2023. Since then, hackers have used AI to increase the frequency, variety, and sophistication of their cyberattacks. One study by security firm Palo Alto Networks created 10,000 malware variants using jailbroken AI programs. As researchers from Ben Gurion University of the Negev put it, "what was once restricted to state actors or organized crime groups may soon be in the hands of anyone with a laptop."

To the industry's credit, AI companies actively work to disrupt these operations. For instance, OpenAI stated that it disrupted over 20 malicious hacking and disinformation operations using its software, while Anthropic has been at the forefront of warning legislators and consumers of the dangers of abusing AI software.

In addition to enabling attackers, experts warn that relying on AI-written code may make users more vulnerable to threats. The reasons for this are complex and range from inferior quality to vulnerabilities inadvertently reproduced at scale. Contributing to this issue may be the paradoxical reality that, as agentic systems have gotten more advanced, they're making more mistakes, with companies like OpenAI's new reasoning systems showing significantly higher hallucination rates.

The costs of efficiency

One question surrounding programs like Codex and Claude Code is their effect on job markets. In a 2025 Pew Research Center report, a majority of experts believe that AI puts software engineering jobs at risk. Anthropic's CEO Dario Amodie said as much in a May 2025 interview with Axios when he predicted that AI could eliminate half of entry-level white-collar jobs by 2030. In a talk with the Council on Foreign Relations two months earlier, Amodie directly linked this issue to coding, stating AI could write 90% of the world's code by the end of 2025. These trends, which disproportionately affect young workers, come as companies adopt AI at record rates. According to the World Economic Forum's 2025 Future of Jobs Report, 41% of employers intend to reduce their workforce because of AI.

Microsoft exemplifies this move towards AI-automated programming. In April 2025, CEO Satya Nadella stated that nearly a third of Microsoft's code was written by AI, and a month later, Microsoft laid off 3% of its workforce. A large percentage of those cuts were software engineers, making up roughly 40% of the 2,000 layoffs in its home state of Washington. Although not expressly linked to AI, many note that the cost-saving measure comes as Microsoft invests a reported $80 billion in AI-related programs in 2025, and Microsoft CTO Kevin Scott predicts that 95% of coding tasks will be AI-generated by the end of the decade. The irony is difficult to overlook, as the job of one generation of coders may be to build the technology that puts the next generation out of work.