5 Ways The U.S. Military Uses AI In Warfare

Our lives are continually being changed by the advance of AI technology. Some applications of AI are smaller and more subtle, while others represent a paradigm shift (or a potential future paradigm shift) in our use of the software and hardware we're familiar with.

Warfare, too, is being impacted by such developments. Tired SkyNet jokes will inevitably arise like a robot revolution whenever "warfare" and "AI" are mentioned together, but with safe and careful use, AI can provide enormous benefits for forces around the world. From weapon systems to computer systems and from automatic targeting to loading and monitoring, there's infinite potential for AI to save military lives, assets, time, and money.

Of the nations that can be expected to invest heavily into the implications of AI in the military, the United States would naturally be high on the list. After all, Brookings reports that a total of $905.5 billion was pumped into military matters in the U.S. in 2023. Some of that dizzying sum is helping to develop some fascinating technology, so let's take a look at Project Maven, piloting systems for drones, and some other ways in which AI is being used by the U.S. military.

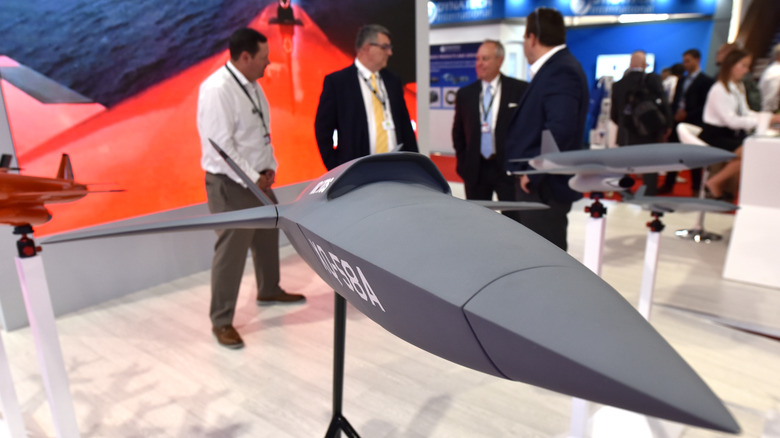

Piloting drones

From the MQ-9 Reaper — the U.S. Air Force's 36-feet-long, 114 Hellfire-equipped UAV – to the TB-2 Bayraktars and DJIs wielded by Ukrainian forces in the war against Russia, drones have enormous utility in warfare. By virtue of being smaller than the likes of fighter aircraft, they can access targets that larger hardware cannot. There are also the incredible boons that the operator isn't sitting in a cockpit and that drones can still prove highly effective weapons while not necessarily costing outrageous amounts to develop and produce.

Any weapon, though, can only perform at its best when operated as effectively as possible. To help with this, AI has been used by the U.S. to aid in the piloting of drones. In January 2024, 96th Test Wing Operations Commander Colonel Tucker Hamilton explained to Defense News (via Defense One) that previous drone AI tended to only be able to follow narrow commands such as "fly at this throttle setting at this airspeed," but the remarkable XQ-58 represents something very new.

Flying at 652 mph and intended for operations alongside and in support of piloted aircraft, the Kratos XQ-58A Valkyrie can, in essence, make decisions for itself. "We give it an objective, but it decides what throttle setting, what bank angle, what altitude, what dive angle ... to meet that objective," Col. Hamilton went on, noting that this technology is currently being used in simulation conditions as its operators continue to investigate just what it can do.

Locating targets

Firepower alone can't win a conflict. In fact, when poorly implemented, it could prove to be a liability. What operators and commanders need to do is focus all that destructive power where it'll have the most impact. Targeting systems and guided munitions have been growing ever more sophisticated for decades (pictured above is the infrared AIM-9 Sidewinder that debuted in September 1953 and was one of the earliest high-profile examples of the technology), and this has led to the implementation of AI in the process of analyzing and identifying targets.

In 2020, Bloomberg reported that the U.S. 18th Airborne Corps began using AI software to determine the positions of targets. Machine learning advanced at a rapid pace (as machine learning tends to do), with the result that, in February 2024, The Register reported that 85 strikes by the U.S. military had been assisted by AI targeting to that point this year.

Organizing an air strike is a carefully monitored process that requires data collection, selection of the appropriate aircraft and munition for the job, and monitoring of the relative locations of allies and opponents. US Central Command CTO Schuyler Moore is quoted by The Register as carefully noting that, even with such attacks, "every step that involves AI has a human checking in at the end." It may not be making any final decisions, but AI can certainly do what it does best — ease the burden of time-consuming tasks in this area of warfare.

Keeping track of soldiers' health

Of course, the military — and warfare itself — isn't only about combat alone. The U.S. military faces the same issue that organizations of all sizes and shapes do: the health of its workforce. To keep track of this, the COVID-19 pandemic brought about an intriguing and potentially crucial development from the Defense Innovation Unit.

The Unit's job is to determine how and where non-military technology could be adopted and adapted by the Department of Defense for the military's benefit. One such piece of technology is the fitness tracker, such as an Apple Watch, Garmin Venu, or Fitbit. In May 2023, the U.S. Army detailed the breakthrough that its Rapid Assessment of Threat Exposure work had achieved, revealing an AI algorithm was fed a range of data about the pandemic and how it spreads, and this algorithm was then added to otherwise-conventional fitness tracking devices. Jeff Schneider, the Rapid Assessment of Threat Exposure program manager, noted in a press release quoted by the U.S. Army that the wearing of these devices allowed the Department of Defense to "noninvasively monitor a service member's health and provide early alerts to potential infection before it spreads."

The ability to better track sleeping and dietary habits, too, provides both service members and the DOD with a far broader picture. As the technology advances, so will AI's ability to safeguard that health.

Detecting and protecting allies

AI targeting systems can be key to the essential difference between safely keeping allies out of the crosshairs of a planned airstrike and making a tragic, horrific mistake. In a potentially fraught position on the ground, it can be incredibly difficult to distinguish friend from foe, and the amount of information that has to be parsed during a rescue mission can be overwhelming.

However, if there's one thing that computers are great at, it's tackling a lot of information at once. Joey Temple, senior targeting officer with the 18th Airborne Corps, was surely used to having to do just that, and he was very unsure of AI's capacity to do so, Bloomberg reports. However, while using the Maven Smart System during his part in supporting the withdrawal from Afghanistan in August 2021, Temple was stunned by how well the machine could display all the necessary information during the frantic operation. It tracked the positions and movements of so many different people and vehicles involved while keeping everything legible. "I could see General [Chris] Donahue walking around," he told the outlet, conceding that he "became a believer" in the capacities of Maven after the experience.

The Maven Smart System, and the wider Project Maven itself, is a crucial element of the nation's approach to AI in warfare, both past and future. Finally, we'll take a look at the project and the attitudes guiding the U.S. military's wider work with AI.

Project Maven

In April 2017, the Deputy Secretary of Defense issued a memorandum discussing the need for what was deemed an Algorithmic Warfare Cross-Functional Team. This being a bit of a mouthful, the name of Project Maven came to be associated with the team instead.

Maven is the United States' ever-developing data analysis system, the key to many of the innovations the nation's military is working on, such as targeting systems for weaponry and detection and tracking software. According to Bloomberg, Maven can now use location tags from social media as one element of its data gathering and analysis process. Then there's the U.S. Army's March 2024 research into AI bots giving battlefield orders. Chat GPT-4 Turbo and GPT-4 Vision were used to advise a player on how to defeat an enemy force in Starfield II. They succeeded in just that, and there's the rub.

So far, it seems, AI has been taught to play a part in warfare but a limited one, without influence on critical decisions where the stakes are high. The ethical concerns regarding AI as a whole are highlighted further here. As former Air Force General Jack Shanahan, once in charge of the emerging Maven, put it to Bloomberg, "If it is used in an automated mode and something goes horribly wrong, people will not use it for a long time." The challenge, then, is regulating use of all these emerging facets of AI warfare as they develop, utilizing their many advantages safely and responsibly.