How Google Wants To Expose Hyperrealistic AI-Generated Art

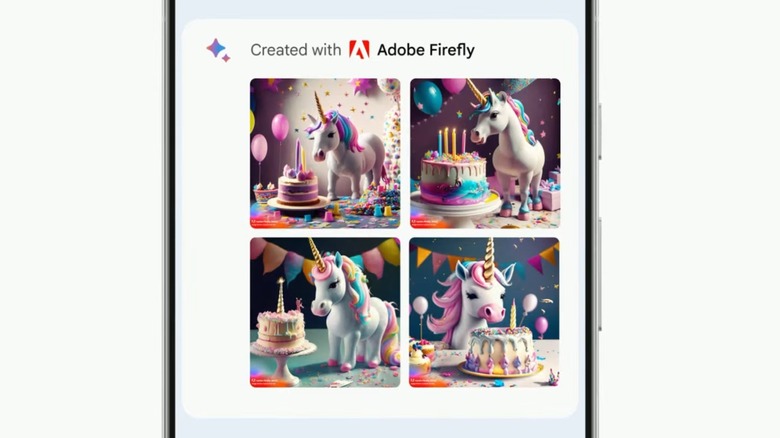

At Google I/O 2023, the tech giant announced several new improvements to its artificial intelligence technologies. Concurrently, it focused on the responsible use of AI, with easy ways to distinguish AI-generated art. Every image that is artificially generated using Google's tools will feature a deeply-etched watermark along with metadata used to identify synthetic images. This will apply to Google's own chatbot Bard, Google Workspace, and services such as Adobe Firefly that use AI as a base for image creation.

AI is amping up ways to declutter our workflows and help us in our creative pursuits. Google is one of the companies at the forefront of this digital renaissance and recently announced several improvements coming to the Photos app, including a much-improved version of Magic Eraser called Magic Editor, which can artificially formulate sections that weren't initially captured in a photo. In addition, AI-generated hyperrealistic images have ethical implications and may pose a substantial threat.

At the Google I/O 2023 developers conference, the company emphasized the importance of separating AI-generated media from photos of actual objects to prevent unfortunate scenarios such as misuse, spreading of misinformation, or identity theft.

Can primitive tools combat sophiticated AI?

Google says it is contributing to the safe and responsible use of AI and will ensure every image generated using its AI tools will use watermarking to help identify unreal photos. Google CEO Sundar Pichai said the company is building its AI image generation models to include watermarking right from the start. Without elaborating on the approach, Pichai also said watermarks will survive "modest editing."

At the same time, Google's AI models will embed information about an image being AI-generated into its metadata — data embedded into an image and containing information such as camera settings, location, color profiles, etc.

While these approaches may be projected as effective, there's no guarantee that the watermark cannot be simply cropped out of the AI-generated image. Similarly, there are countless tools that scrub off metadata from image files, and Google's approach may not be fruitful unless it introduces a new metadata type that cannot be removed.

It remains to be seen how effective these techniques are in practice, but it's good to see big tech companies stir up conversations about the safe use of AI.