Watch the video Mobileye says casts doubt on every other autonomous car project

Intel's autonomous car division, Mobileye, has released a new video showing one of its self-driving cars in action, an unedited 40 minute stretch of the vehicle navigating a challenging urban environment. In the process, Mobileye – which was acquired by Intel in early 2017, in a deal worth over $15 billion – raises questions about the path to autonomous driving taken by most other projects, Tesla and Waymo included.

Driverless vehicle demos aren't uncommon, of course. One of the easiest ways to drum up hype about a Level 4 or Level 5 self-driving car project is to stick a camera inside it – and, optionally, a few journalists – and send it off on a city route.

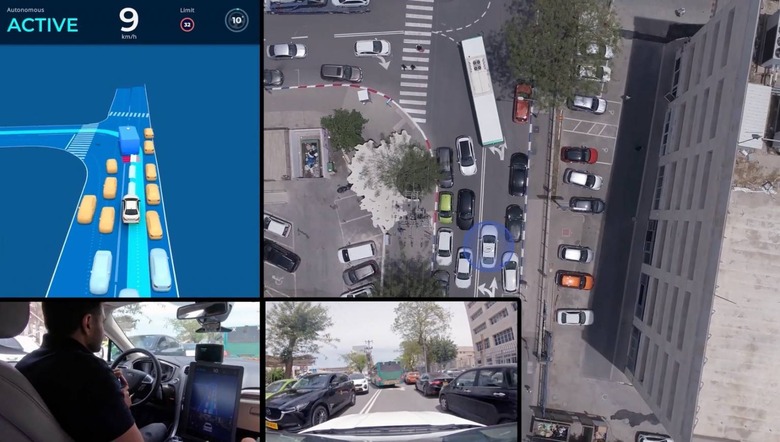

Neither is it the first time we've seen a Mobileye-powered prototype in action, and indeed we had a front-row seat of the tech at work in a Delphi prototype three years ago. Still, the company's latest footage goes further than most. The video, Mobileye says, is unedited, and there's only one interruption: that was when the drone the company had following the autonomous vehicle needed a new battery swapped in.

What's interesting – and fairly unique – about Mobileye's approach here is that the whole 40 minute demo is only using in effect half of the company's autonomous system. In this case, it's a camera vision system, with eight long-range cameras and four parking cameras. The driverless computer in control in this particular test vehicle doesn't have access to any radar or LIDAR sensors.

Ironically – given Tesla opted to stop using Mobileye technology back in 2016 and switch to its own system as it developed Autopilot – this camera-based autonomous driving is something Elon Musk has been a vocal proponent of. The Tesla CEO has been dismissive of LIDAR, calling it unnecessary and too expensive, and instead focusing on vision-based technologies instead.

What sets the two approaches apart, however, is that unlike with Tesla, Mobileye is saying camera-based autonomy is essential but also insufficient. Visual-based driverless systems aren't enough on their own, the Intel-owned firm argues, because while they're safer than a human driver, the difference in proficiency still isn't enough.

It puts the emphasis not on technology alone, but the meeting point between autonomy and public perception of autonomy. "From the societal point of view, if all cars on the road were 10x better on [mean-time-between-failures versus human drives] it would be a huge achievement," Amnon Shashua, president and CEO of Mobileye, says, "but from the perspective of an operator of a fleet, an accident occurring every day is an unbearable result both financially and publicly."

The reality, Shashua suggests, is that for autonomous vehicles to scale acceptably, they'll need to be at least a 1,000 times better than humans at that metric. That means one accident every 500 million miles (though of course that assumes a single vehicle, not a driverless fleet).

"To achieve such an ambitious MTBF for the perception system necessitates introducing redundancies — specifically system redundancies, as opposed to sensor redundancies within the system," he explains. "This is like having both iOS and Android smartphones in my pocket and asking myself: What is the probability that they both crash simultaneously? By all likelihood it is the product of the probabilities that each device crashes on its own."

Sensor fusion has become the goal of many autonomous car projects, where data from multiple sources – cameras, radar, LIDAR, and others – are blended into a single set to which the driverless algorithms are applied. Redundancy in that case is typically having a second array of hardware, and sometimes – though much less common – a second set of some of the sensors. That way, so the theory goes, if one computer goes down, the second can step in. It also means the vehicle's maneuvers can be decided upon by two systems separately, and only given the green light to go ahead if each computer agrees.

Mobileye's approach, though, intentionally avoids sensor fusion. Instead, the company has two separate redundant subsystems, one using camera vision and another with sonar/LIDAR. "Just like with the two smartphones," Shashua says, "the probability of both systems experiencing perception failure at the same time drops dramatically."

Unsurprisingly, Mobileye argues that its approach is trickier – but has more safety value – than that of rivals using sensor fusion. The reality, of course, is that there's still no commercially available self-driving car on the market, and that while there are multiple projects promising to change that, it's not entirely clear when there'll be a launch at scale rather than something more than a glorified demo. At that point, we – and regulators – will face the question of just how "perfect" we expect our robot drivers to be, in comparison to flawed but familiar humans at the wheel.