Project Soli Could Be Google's Riposte To Apple's Digital Crown

Wearables may bring your digital life to your wrist but that doesn't make it any easier to interact with, a problem Google believes it may have solved. Handiwork of the Google ATAP team, the internal skunkworks cooking up new and innovative hardware and software like Jacquard and Ara, Project Soli is the first ever radar chip capable of tracking gestures while also small enough to fit into a smartwatch or a phone. While it may only be eight months old, it's already poised to dramatically shake-up how we use small-screen devices.

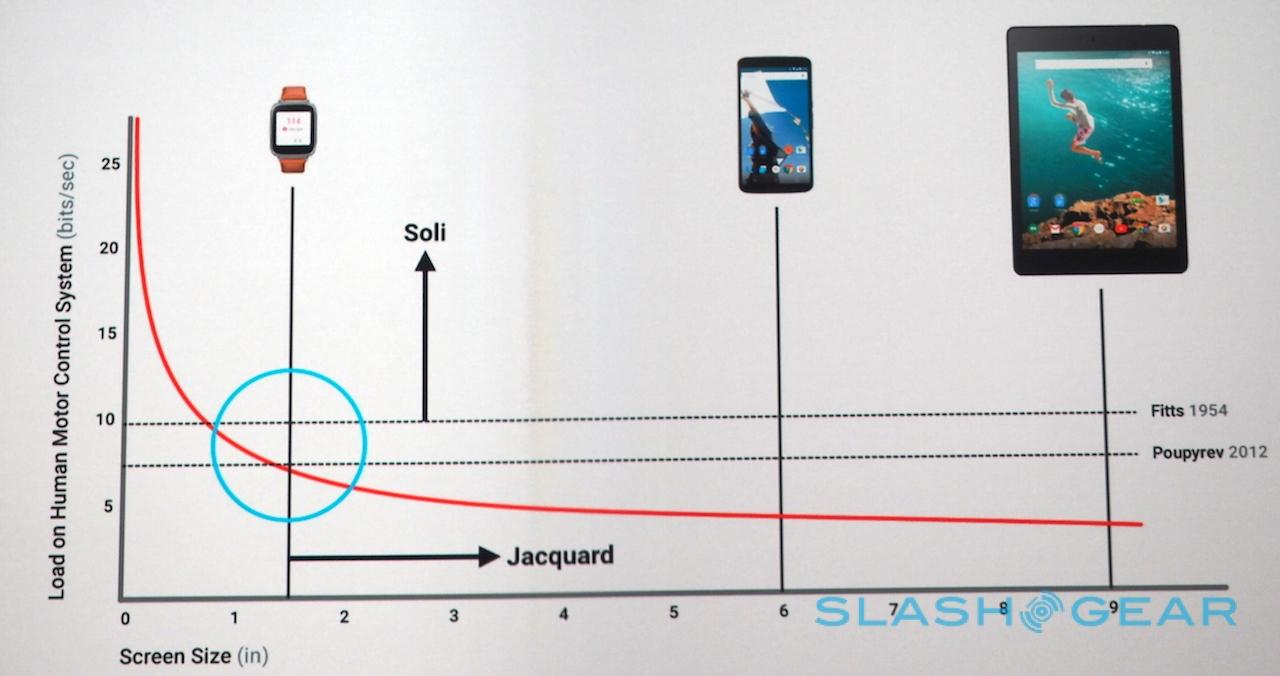

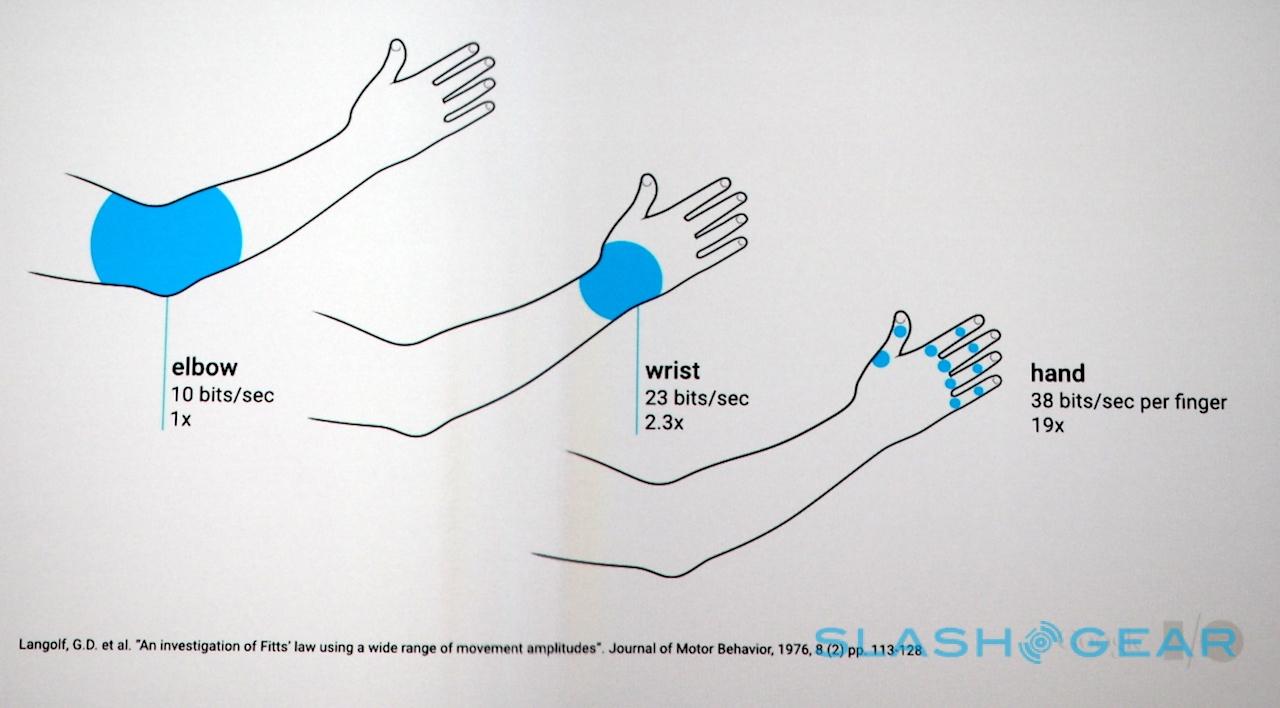

"Our hand is an amazing instrument, it's very fast and precise, particularly when we're using tools which take advantage of our fingers," Ivan Poupyrev of the ATAP team explained at Google I/O 2015 this morning. "If you measure the bandwidth from our context to the tip of our fingers, it's 20x that to our elbow."

That proficiency hasn't been matched with interfaces that are similarly refined, Poupyrev argued, leaving gadgets like smartwatches difficult to control.

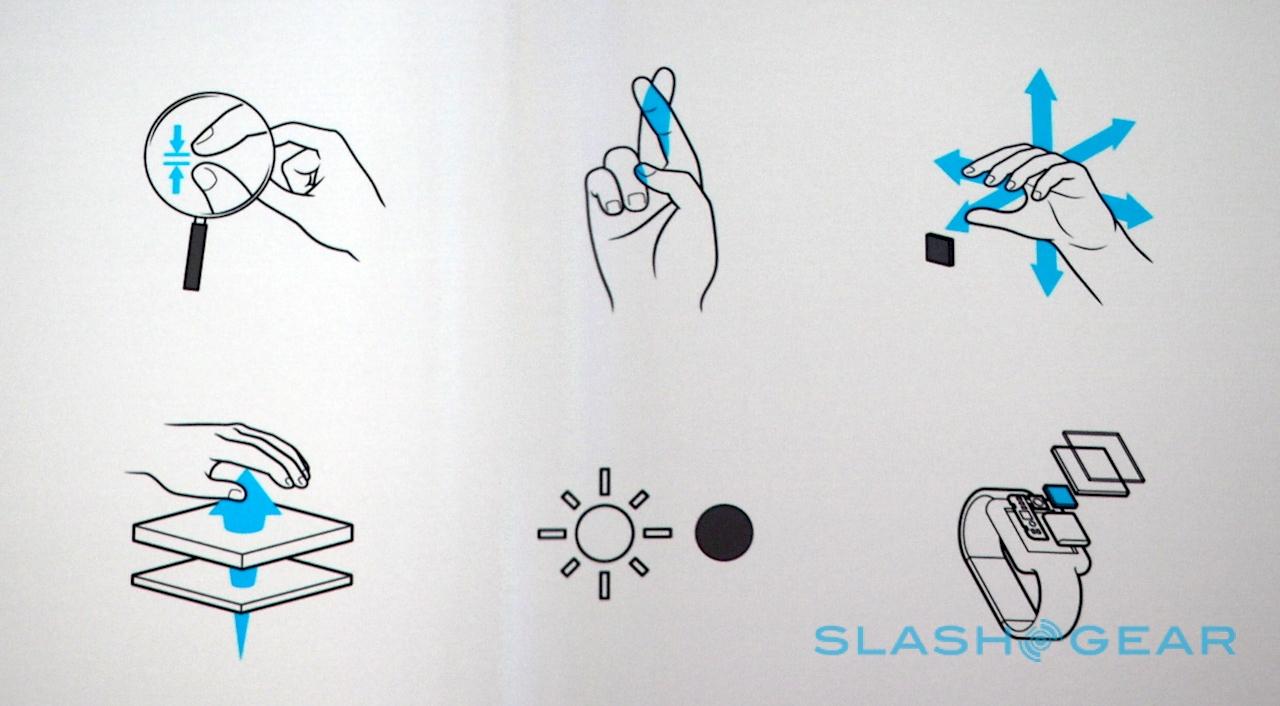

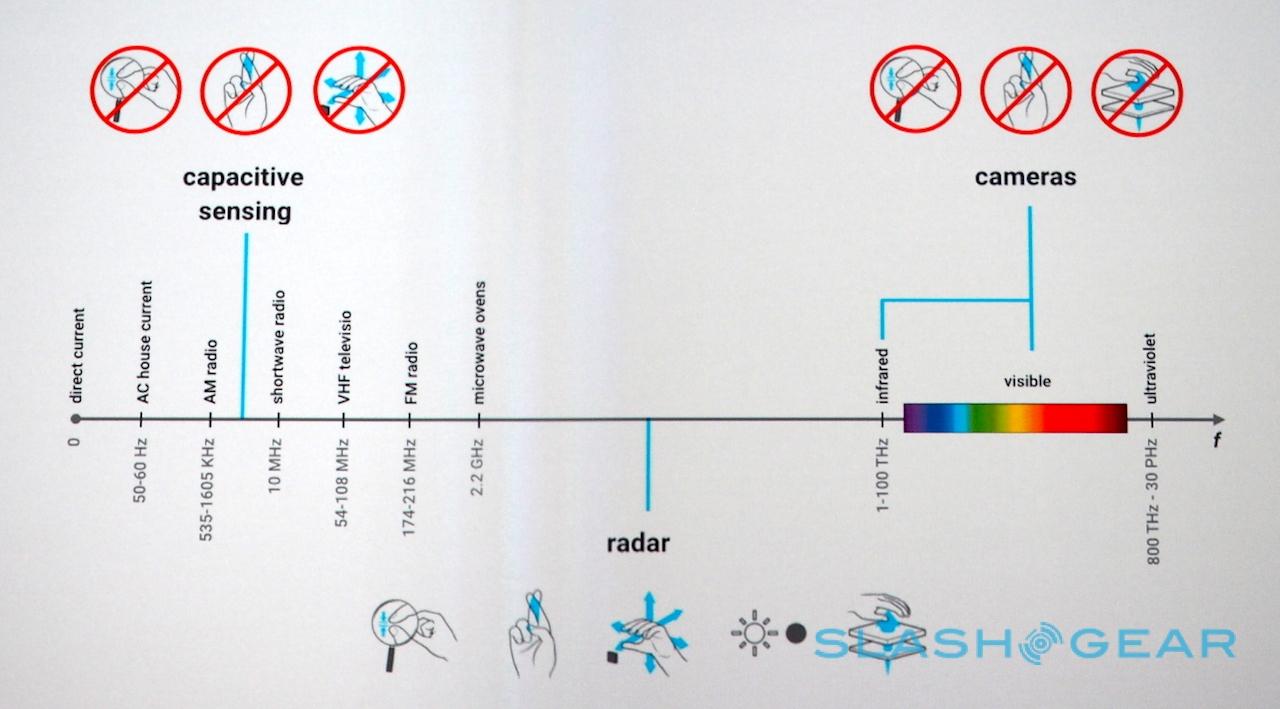

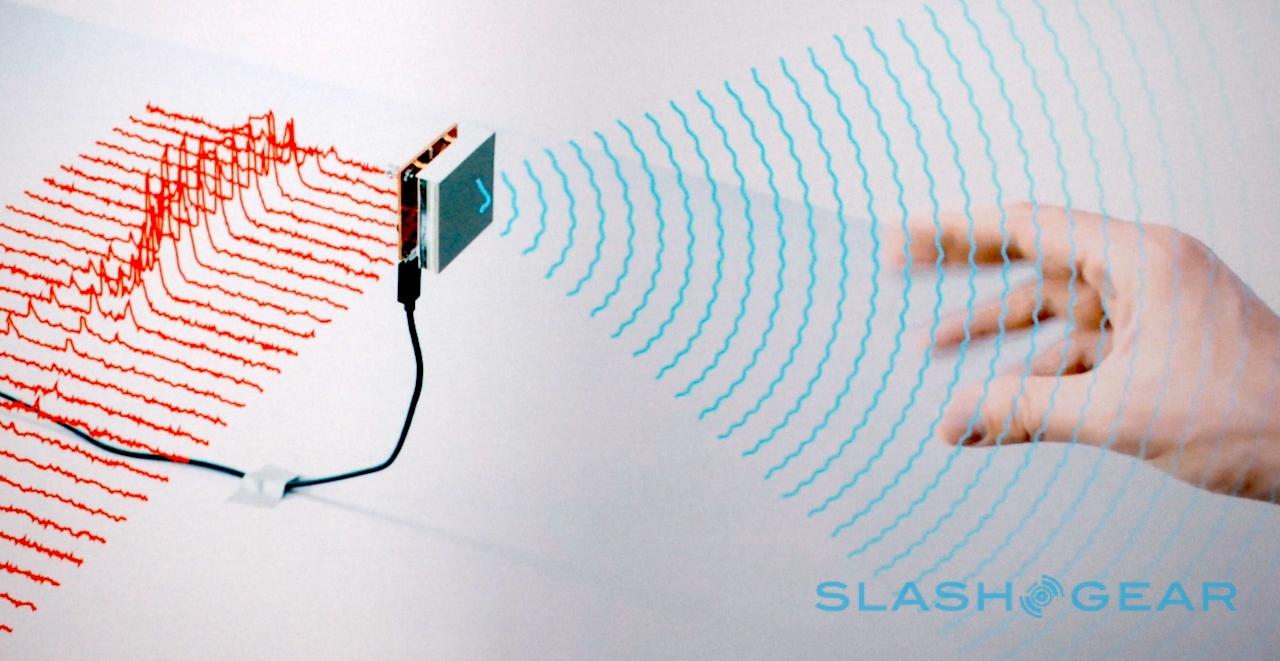

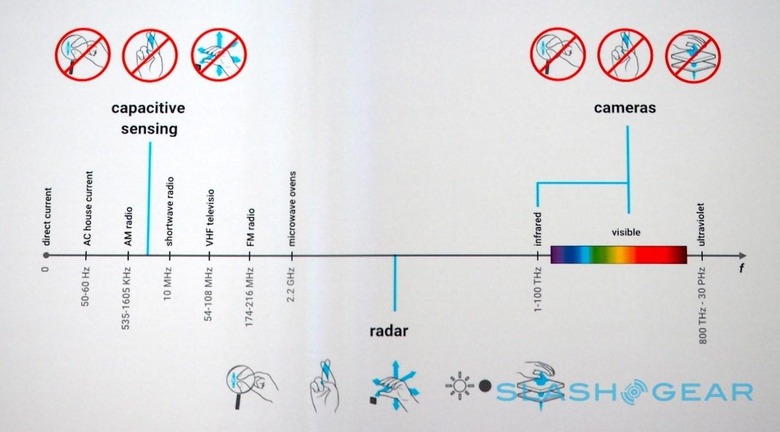

The answer might be Project Soli. ATAP considered all the potential ways of tracking movement, dismissing capacitive options because they couldn't see overlapping fingers, and visible light methods because they were unable to see through other substances. Instead, the researchers turned to radar.

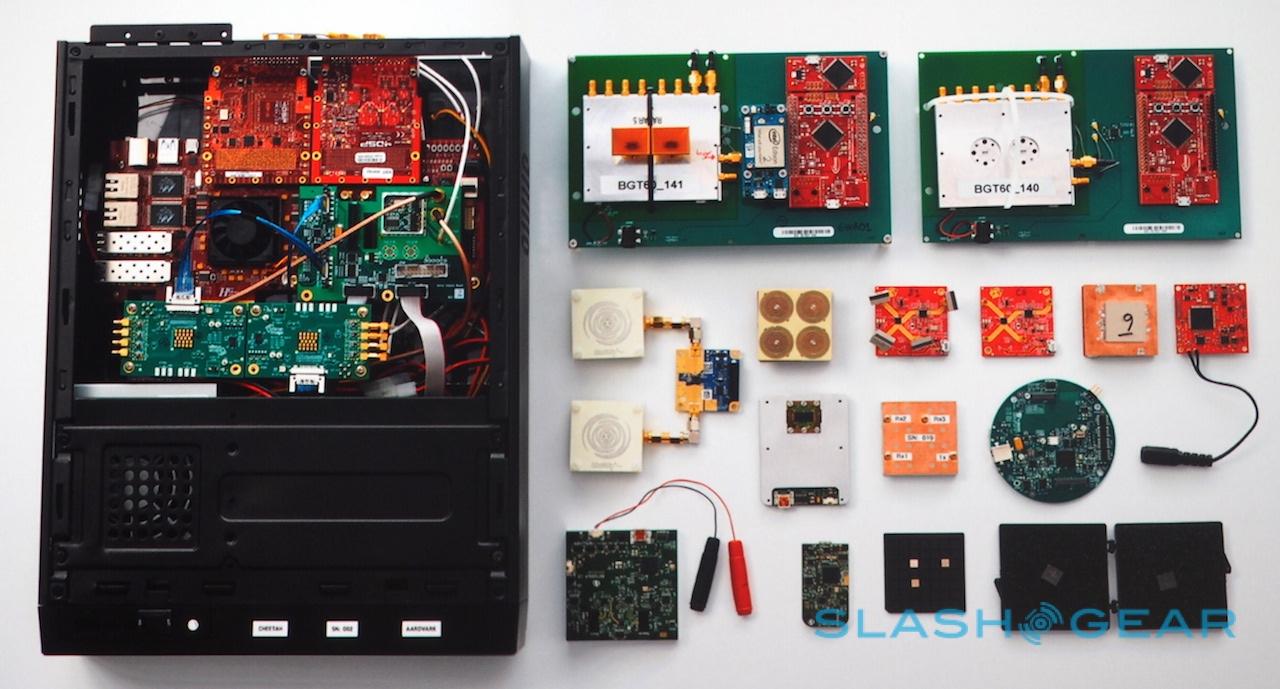

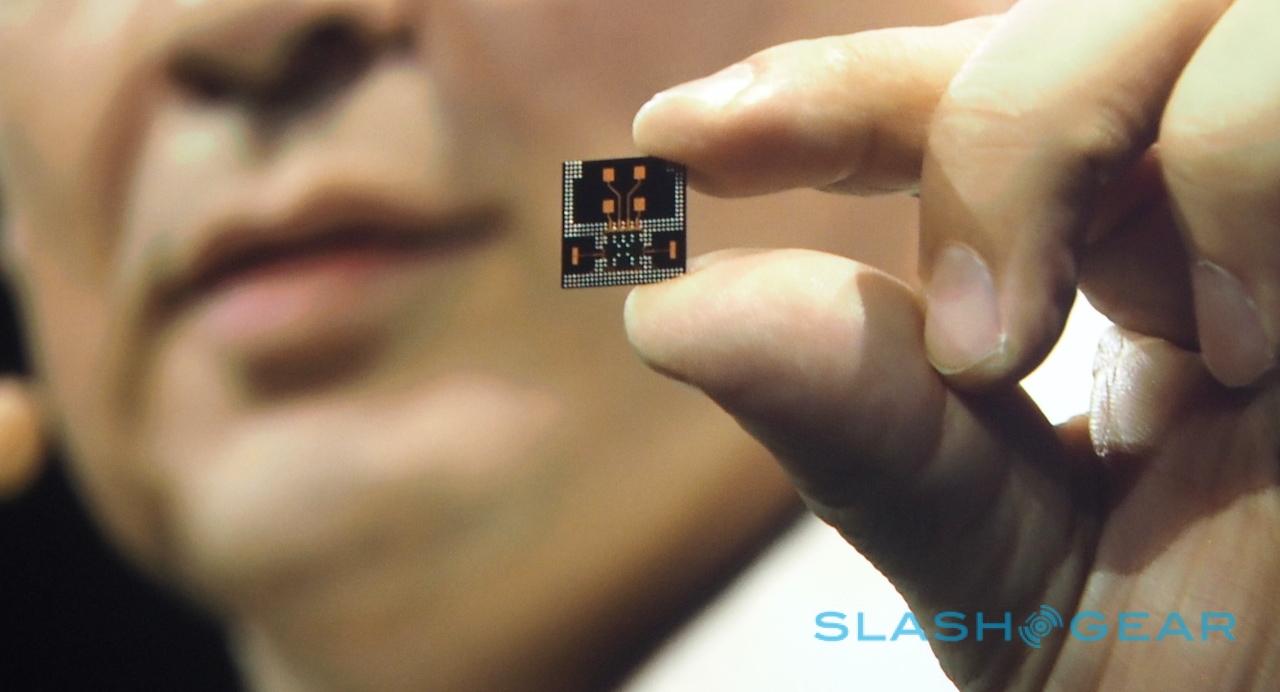

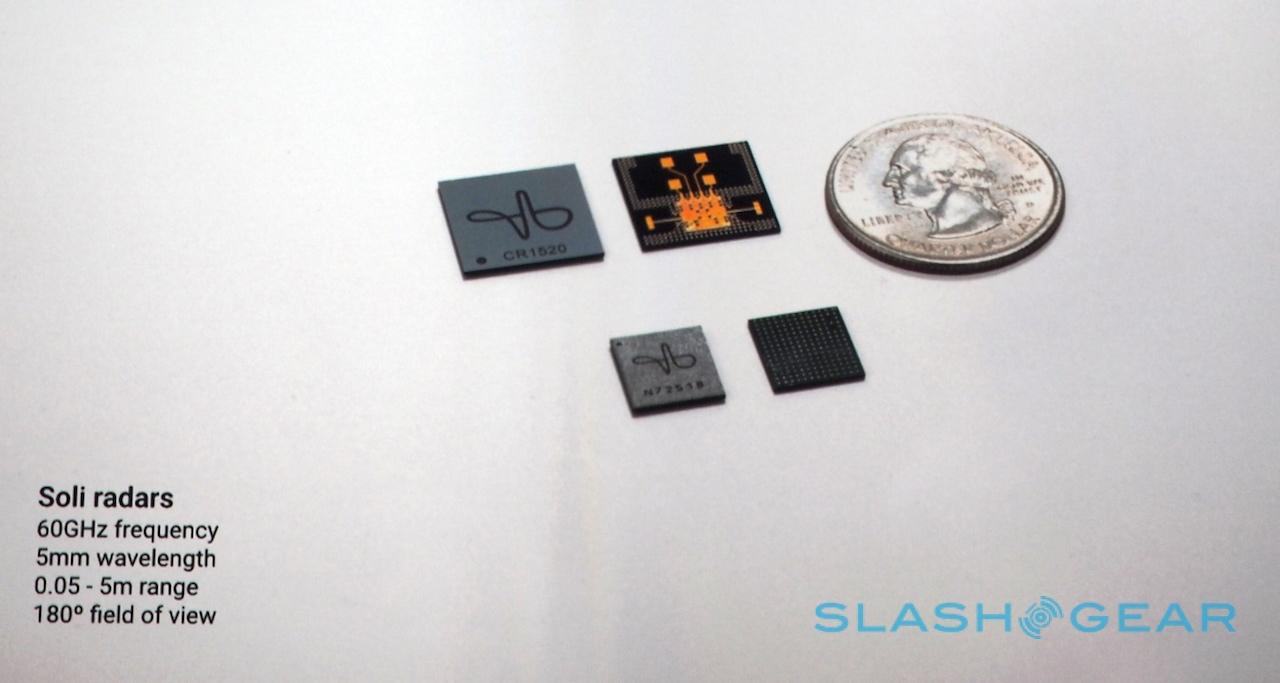

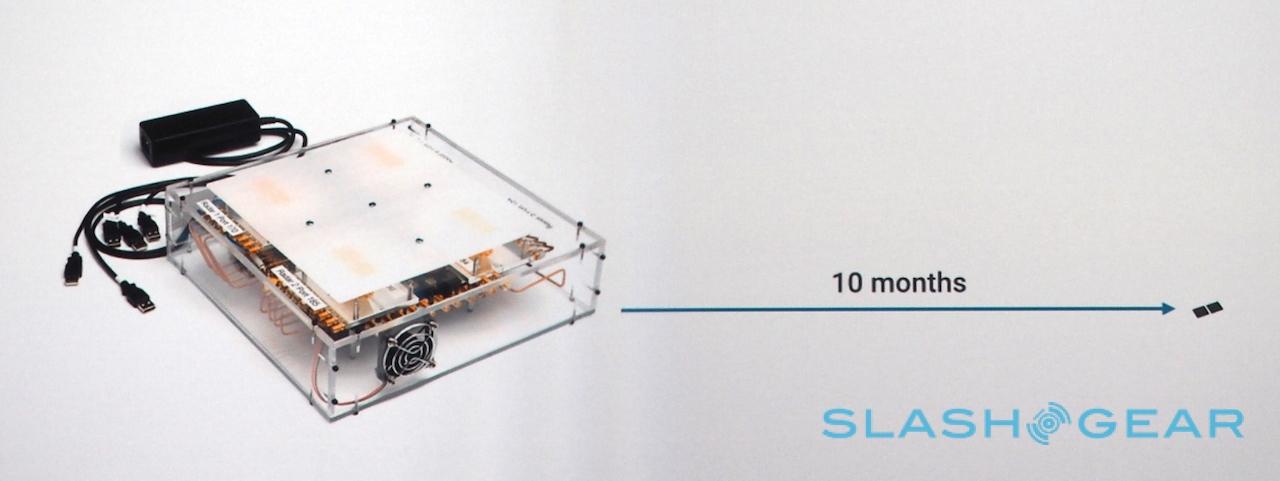

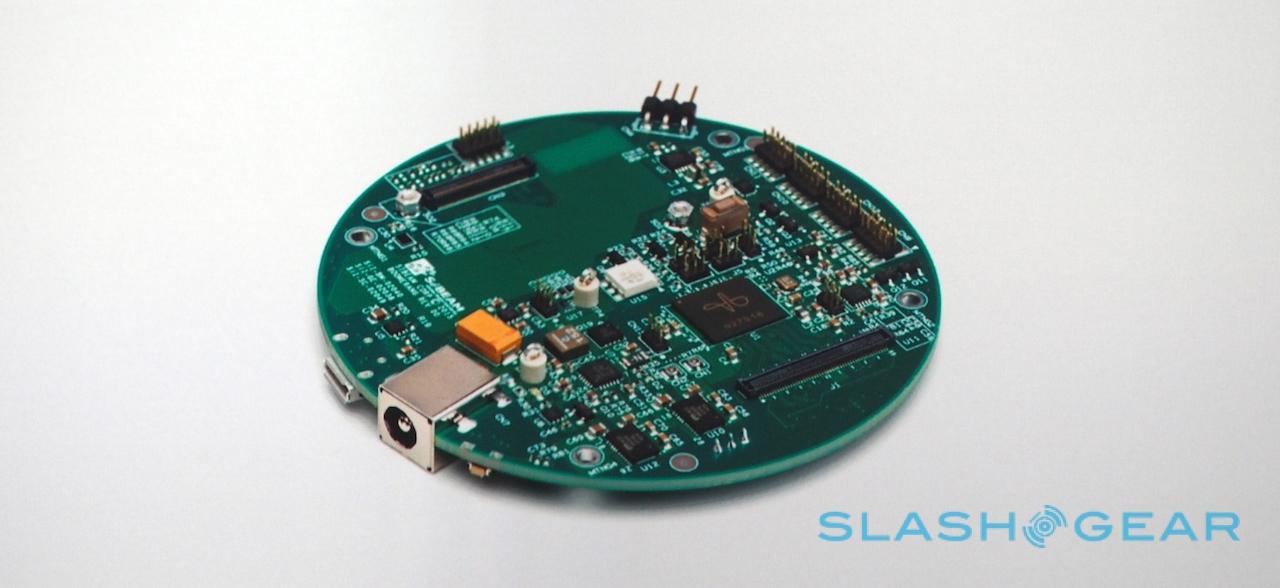

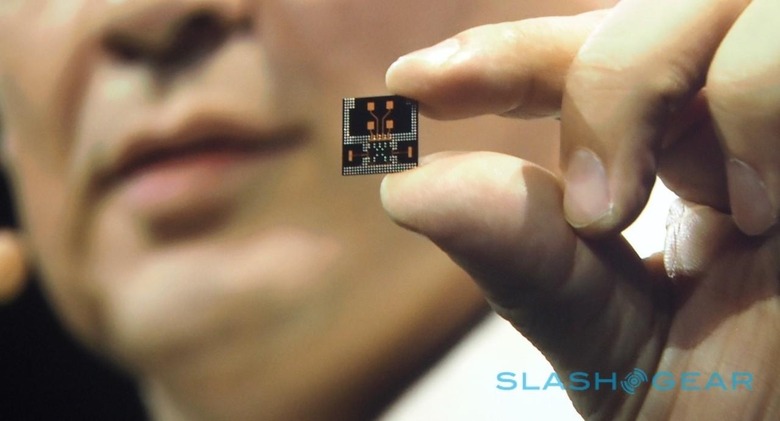

Not just any radar, mind. ATAP cooked up a special system of its own design, managing to shrink it from the chunky original prototype through to a tiny component sized to suit a wearable.

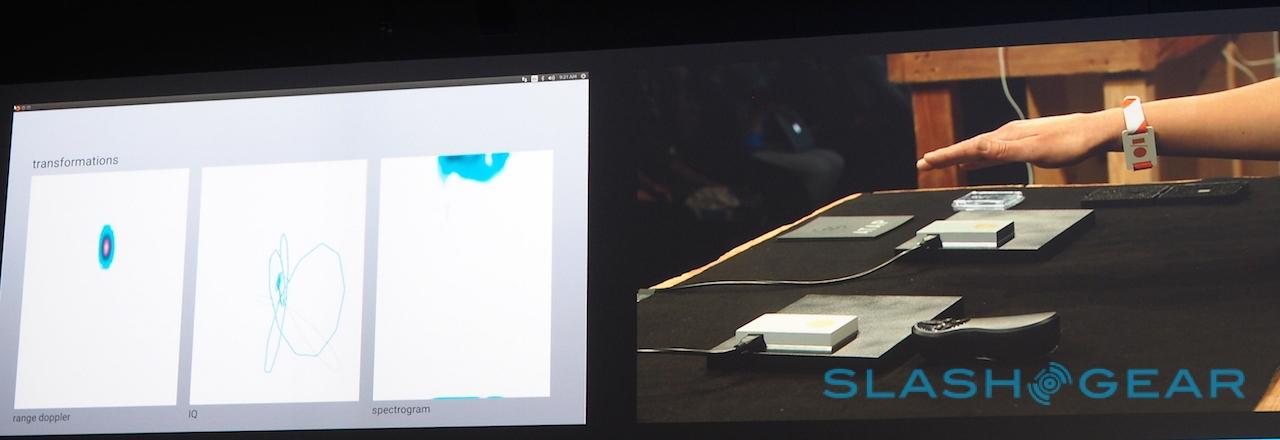

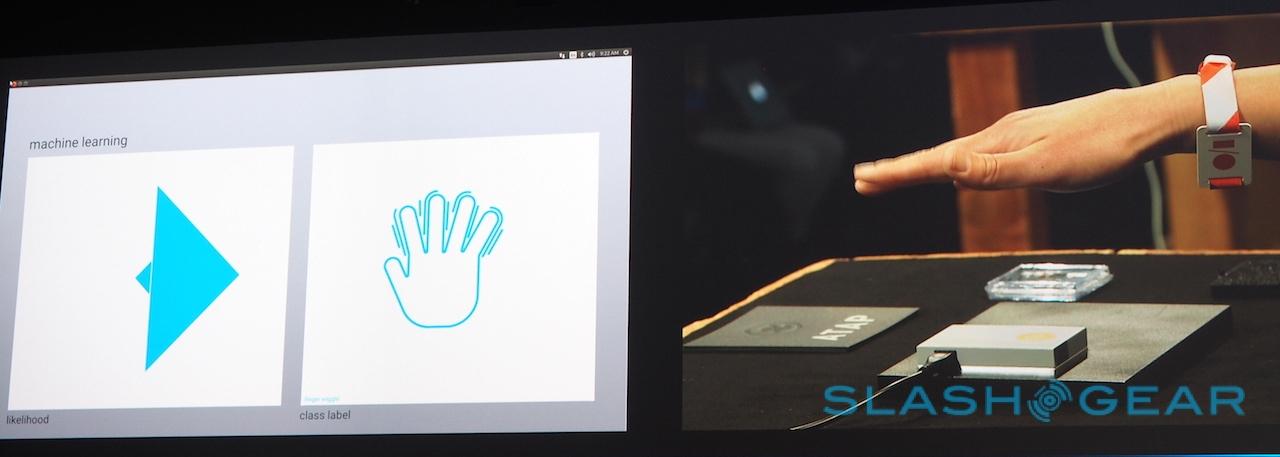

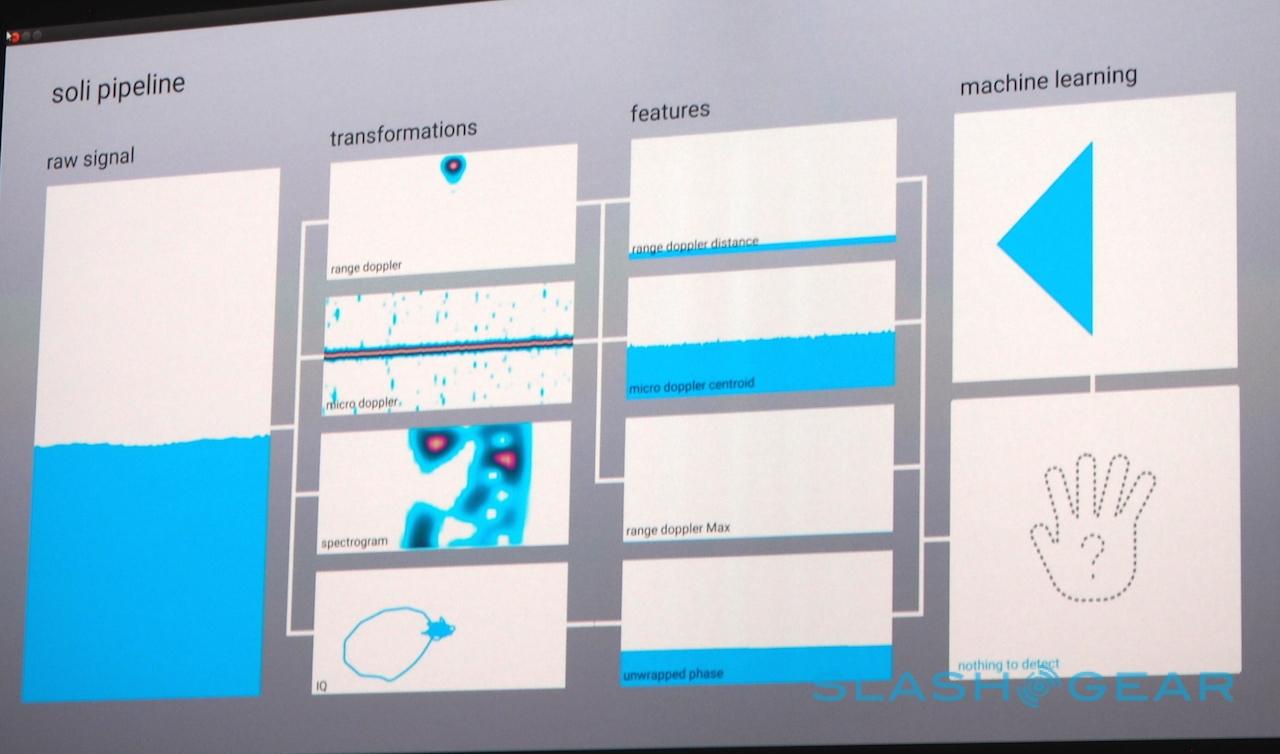

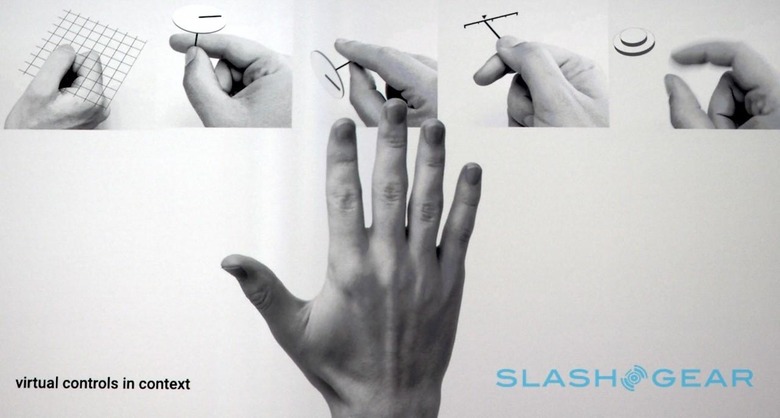

Hold your hand over the top, and it can not only track its movement but identify individual fingers, or spot when you're forming a fist. Google uses a variety of measurements from the sensor, all fed into machine learning algorithms that figure out what gestures are being made.

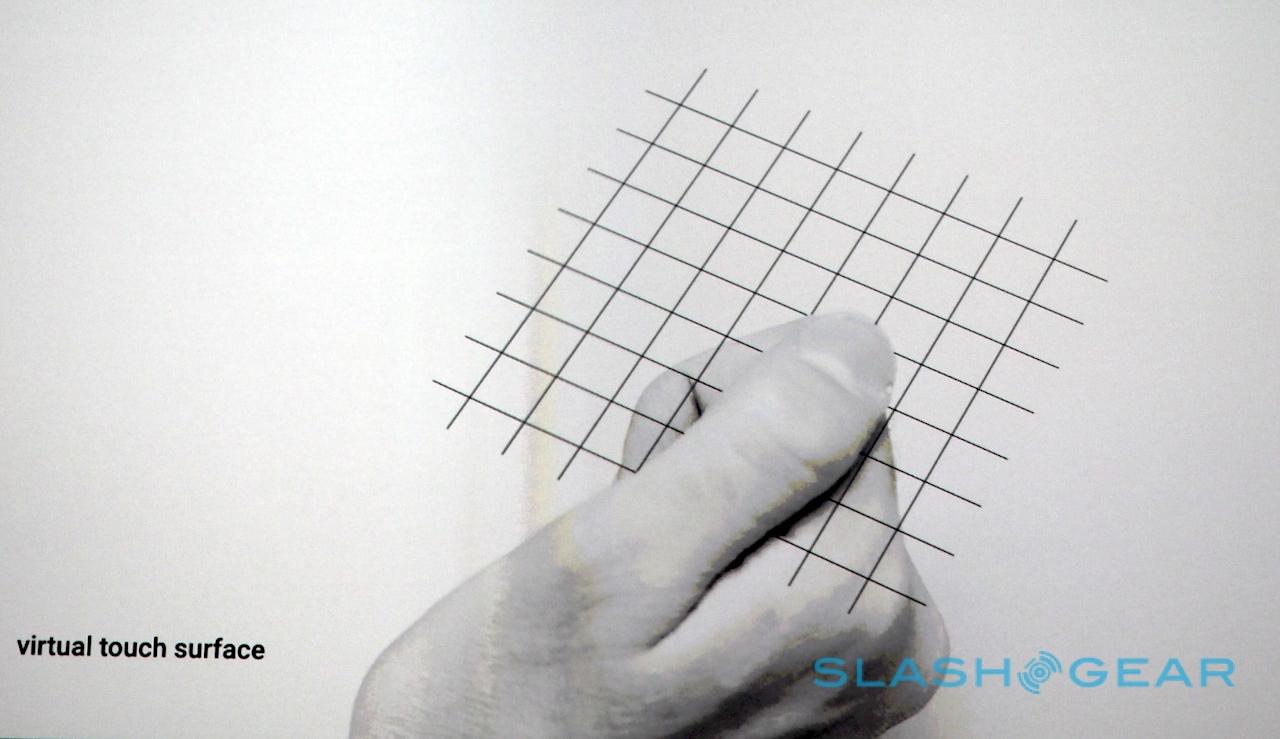

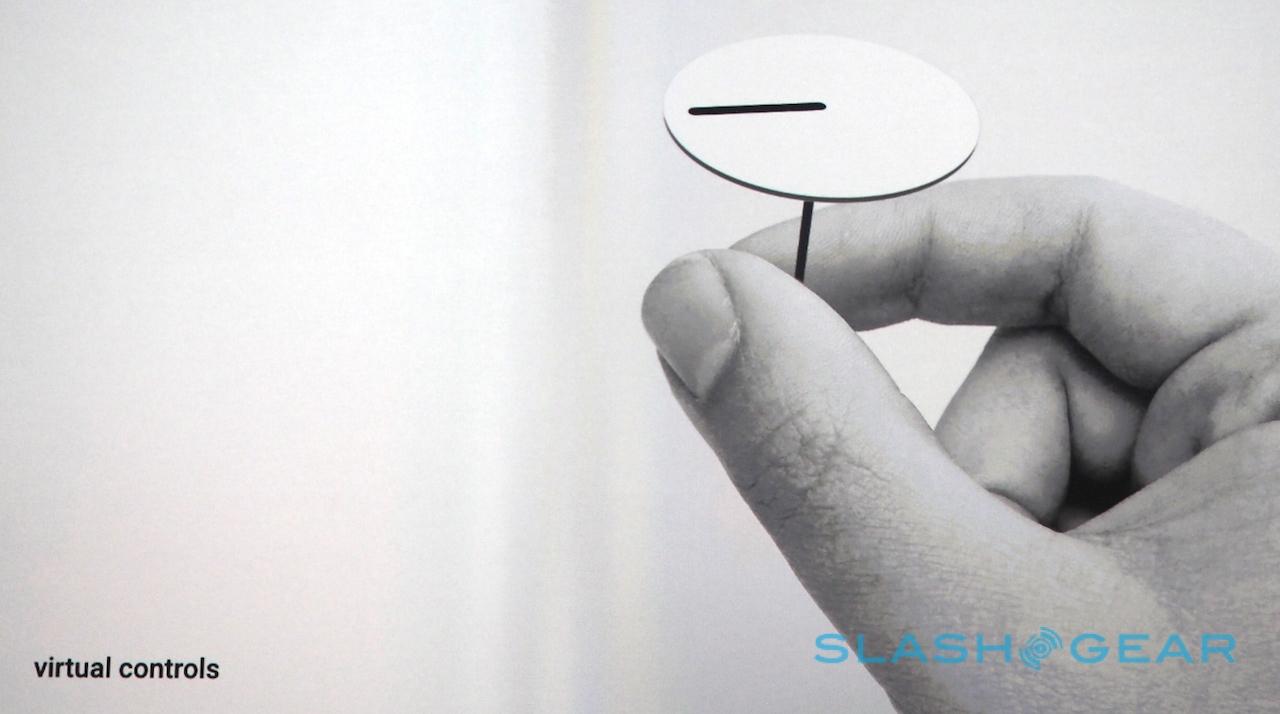

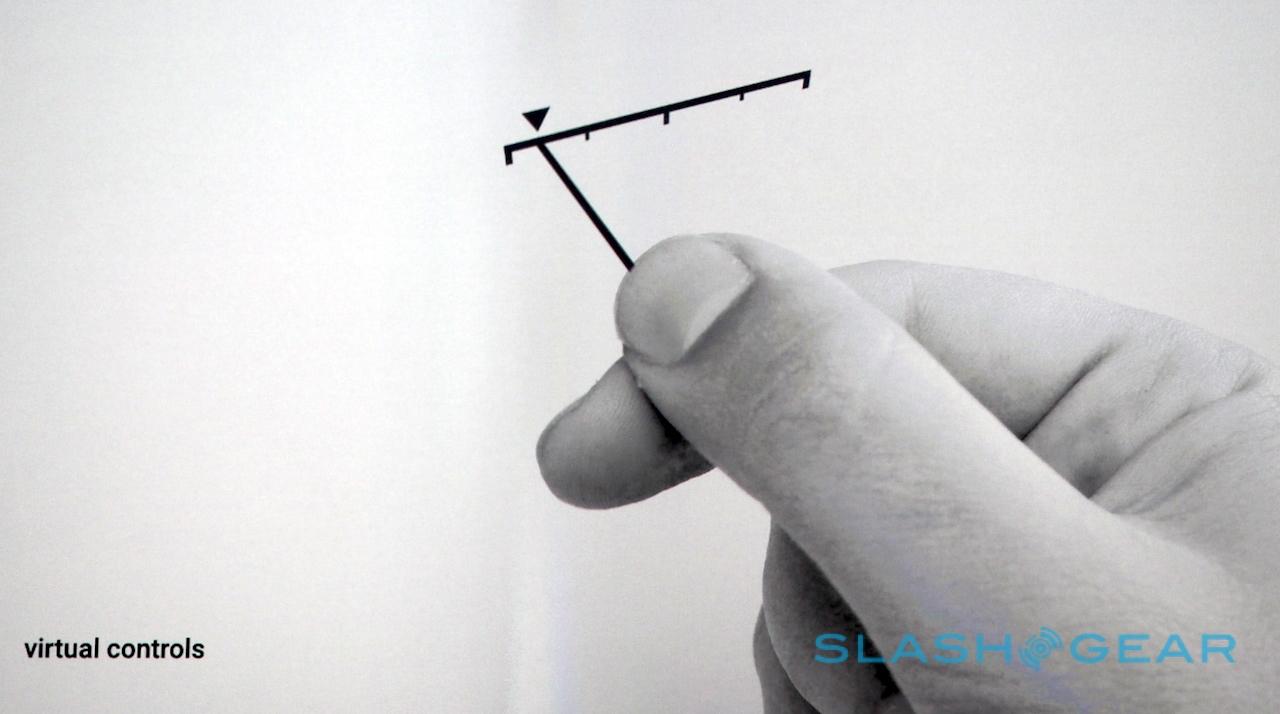

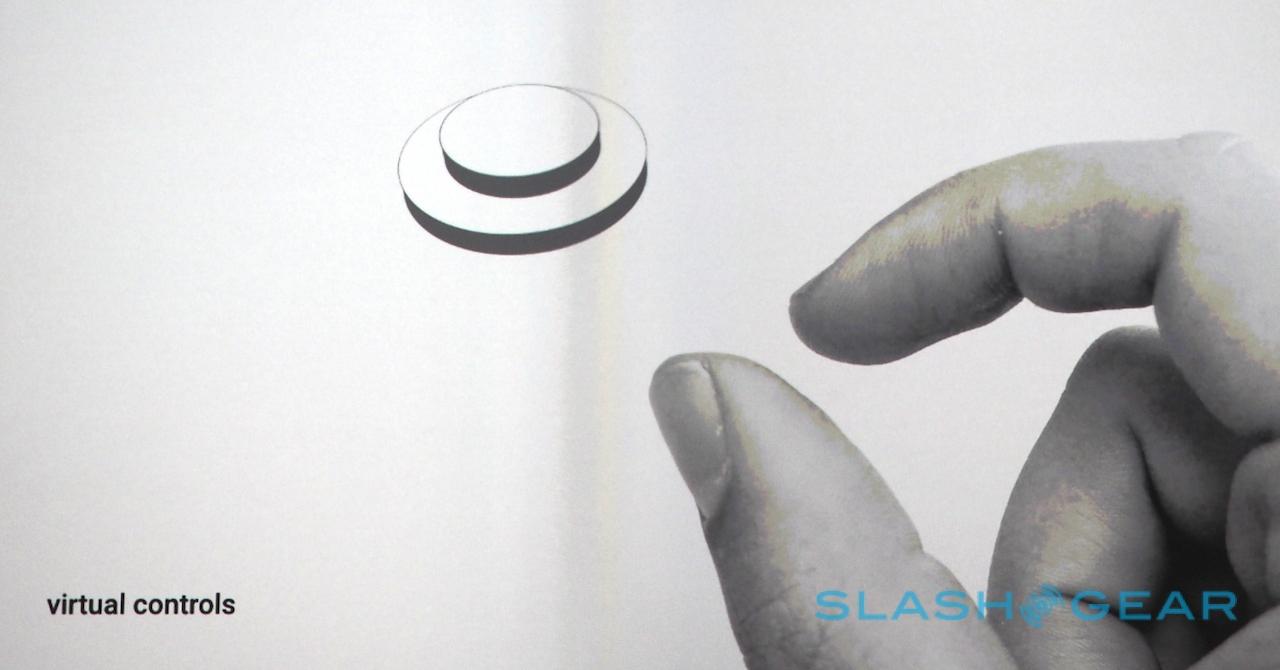

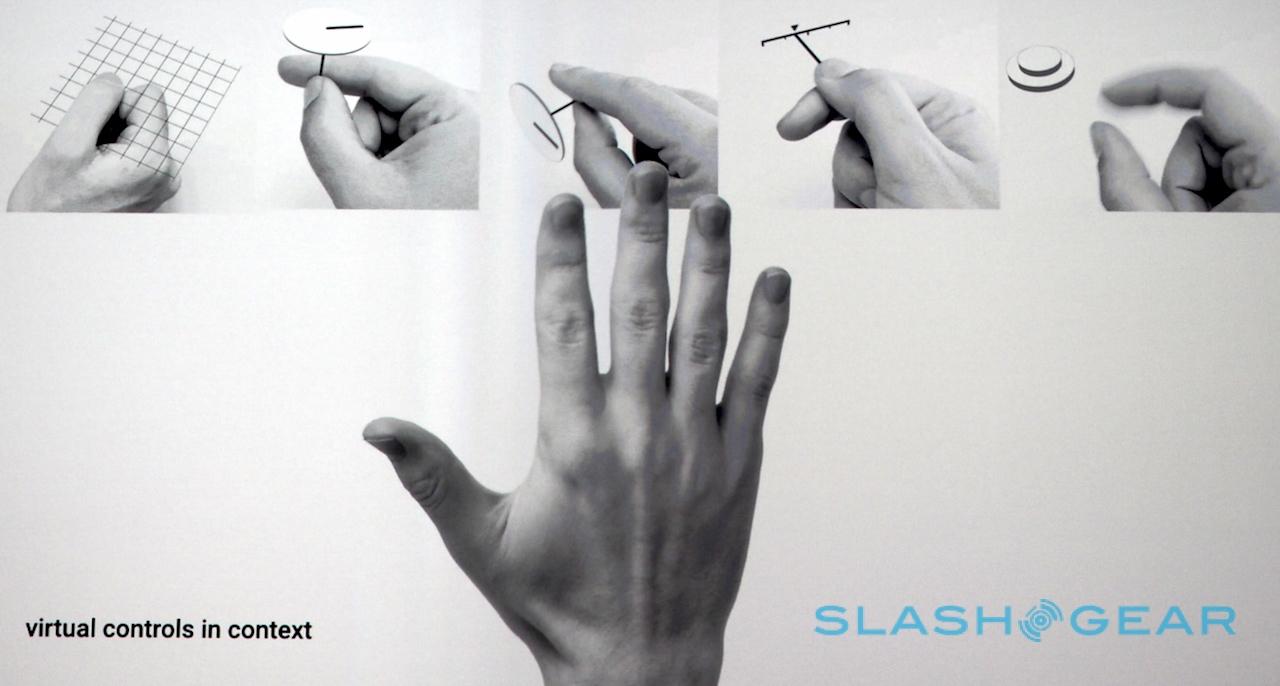

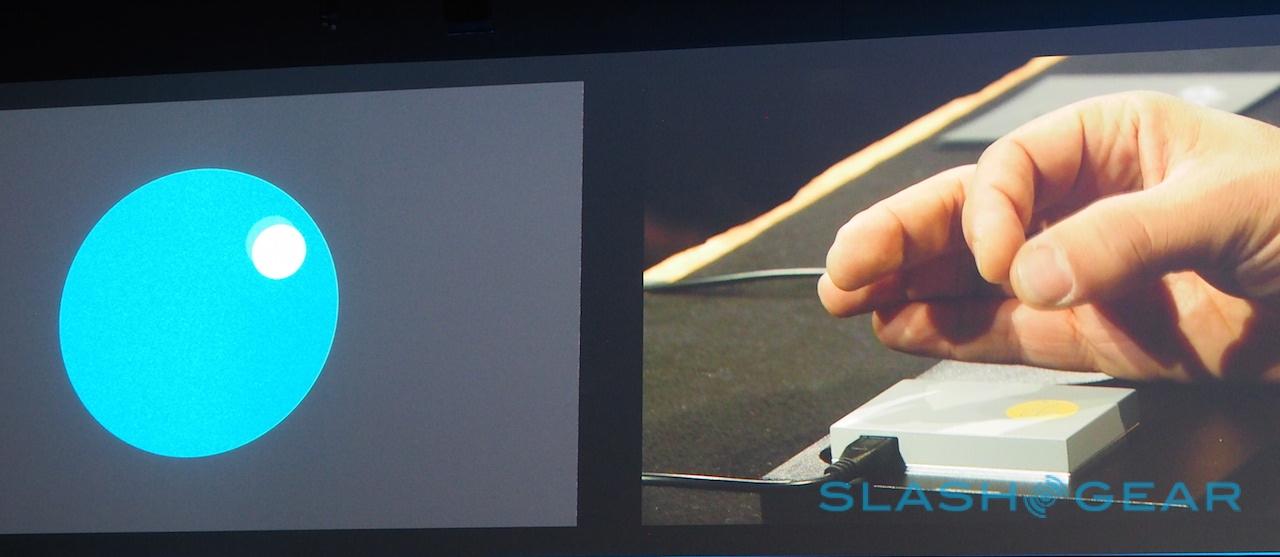

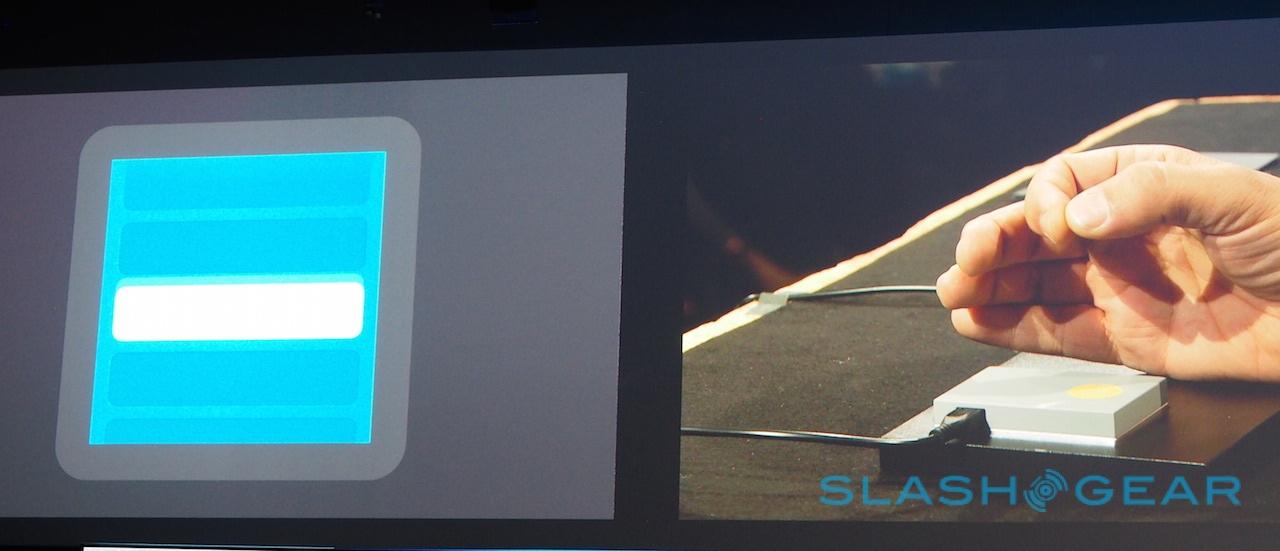

The possibilities are huge. Rubbing his fingertips together, Poupyrev showed how you could twiddle a virtual dial with incredible precision, or push a virtual slider. Tapping his fingertips together, he pushed an invisible button.

It gets even more interesting when you combine the measurements. Setting the time on a conceptual smartwatch UI, by turning the virtual dial with his fingers close to the sensor Poupyrev adjusted the hour, whereas moving his hand higher up adjusted the minutes instead.

It's not the first attempt we've seen at giving more precise control over a small-form-factor interface. Apple Watch's Digital Crown, for instance, is a good example: while the wearable has a touchscreen, and can thus be scrolled with taps and swipes, by twiddling the wheel on the side it keeps the display clear for more information to be visible.

Project Soli opens the door to far greater flexibility than just a scroll, however. With a chip set into a demo station table – Poupyrev said that, connected to a computer, Soli could manage a resolution as high as 10,000 fps, though in mobile implementations it's more likely to be around 60 fps – I was able to manipulate an on-screen digital shape simply by moving my fingers and palm, switching between various directions and orientations in a way that a physical control simply couldn't accommodate.

Combine it with sensors woven into fabric, so that your watch interface runs up your arm, around its strap, or even into the furniture you're sitting on, and you can see why ATAP's Regina Dugan is excited.

"We got a little frustrated by the ever shrinking screen size and user interactions," the skunkworks lead had explained in her opening remarks today. "As the screen size shrinks to the point where we can put it on our body, we're reaching the limit of how we can interact with it."

Google's next step is a developer board which ATAP expects to see released later this year along with the API. When it might go from there to showing up in wearables and phones is unclear, but already at this stage it's shaping up to be a potential game-changer.