Google Glass In Focus: UI, Apps & More

You've seen the Glass concept videos, you've read the breathless hands-on reports, but how exactly is Google's augmented reality system going to work? The search giant's Google X Lab team has been coy on specifics so far, with little in the way of technical insight as to the systems responsible for keeping the headset running. Thanks to a source close to the Glass project, though, we're excited to give you some insight into what magic actually happens inside that wearable eyepiece, what that UI looks like, and how the innovative functionality will work, both locally and in the cloud.

Google knows smartphones, and that's familiar territory for the Android team, and so unsurprisingly Glass builds on top of that technology. So, inside the colorful casing there's Android 4.0 running on what's believed to be a dual-core OMAP processor. This isn't quite a smartphone – there's WiFi and Bluetooth, along with GPS, but no cellular radio – but the familiar sensors are present, including a gyroscope and an accelerometer to keep track of where the wearer is facing and what angle their head is at.

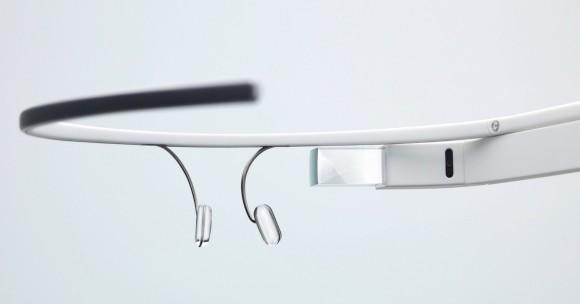

The eyepiece itself runs at 640 x 360 resolution and, when Glass is positioned on your face properly, floats discretely just above your line of vision; on the inner edge of the L-shaped housing there's an infrared eye-tracking camera, while a bone conduction speaker is further back along. Glass is designed to get online either with its own WiFi connection, or to use Bluetooth and tether to your smartphone. That given, it's pretty much platform agnostic for whatever device is used to get online: it doesn't matter if you have a Galaxy S III in your pocket, or an iPhone, or a BlackBerry Z10, as long as they can be used as a modem.

Where Glass departs significantly from the typical Android phone is in how applications and services run. In fact, right now no third party applications run on Glass itself: the actual local software footprint is minimal. Instead, Glass is fully dependent on access to the cloud and the Mirror API the Glass team discussed briefly back in January.

In a sense, Glass has most in common with Google Now. Like that service on Android phones, Glass can pull in content from all manner of places, formatted into individual cards. Content from third-party developers will be small chunks of HTML, for instance, with Google's servers supporting the various services that Glass users can take advantage of.

[aquote]Glass has most in common with Google Now[/aquote]

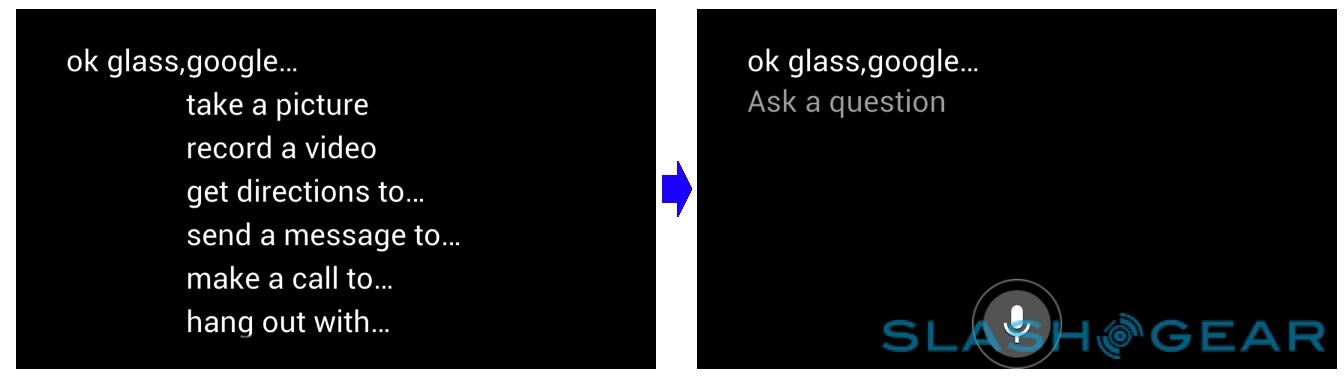

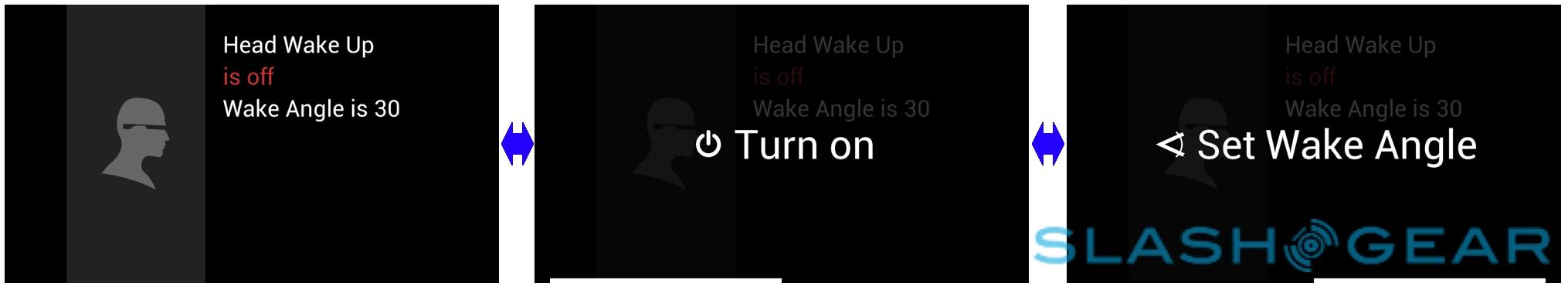

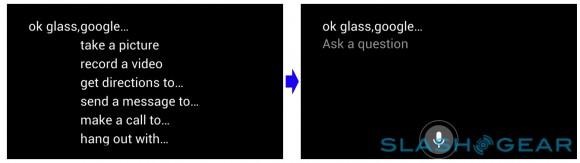

When you activate Glass – by tilting your head up, to trigger the (customisable) motion sensor, or tapping the side, and then saying "OK, Glass" – you see the first of those cards, with the current time front and center. Navigation from that point on is either by swiping a finger across the touchpad on the outer surface of the headset or by issuing spoken commands, such as "Google ...", "take a picture", "get directions to...", or "hang out with..." A regular swipe moves left or right through the UI, whereas a more determined movement "flings" you through several items at a time, like whizzing a mouse's scroll wheel. Tap to select is supported, and a downward swipe moves back up through the menu tree and, eventually, turns the screen off altogether. A two-finger swipe quickly switches between services.

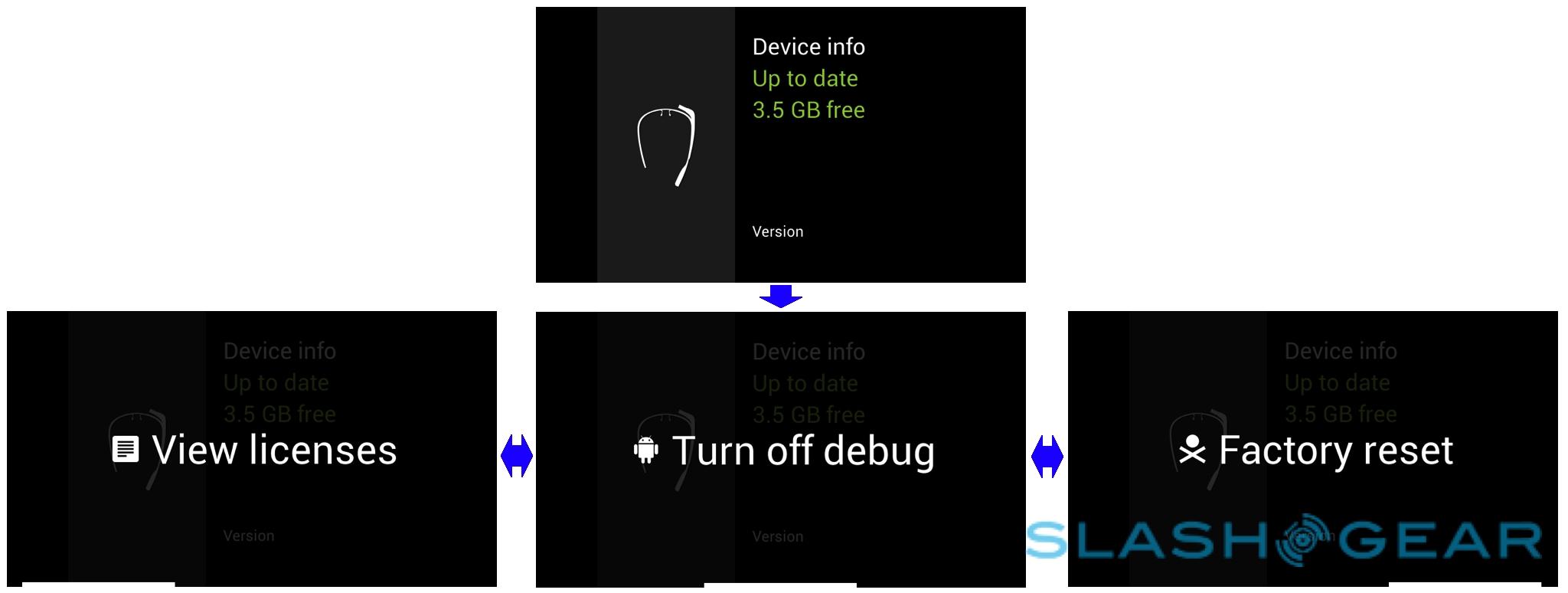

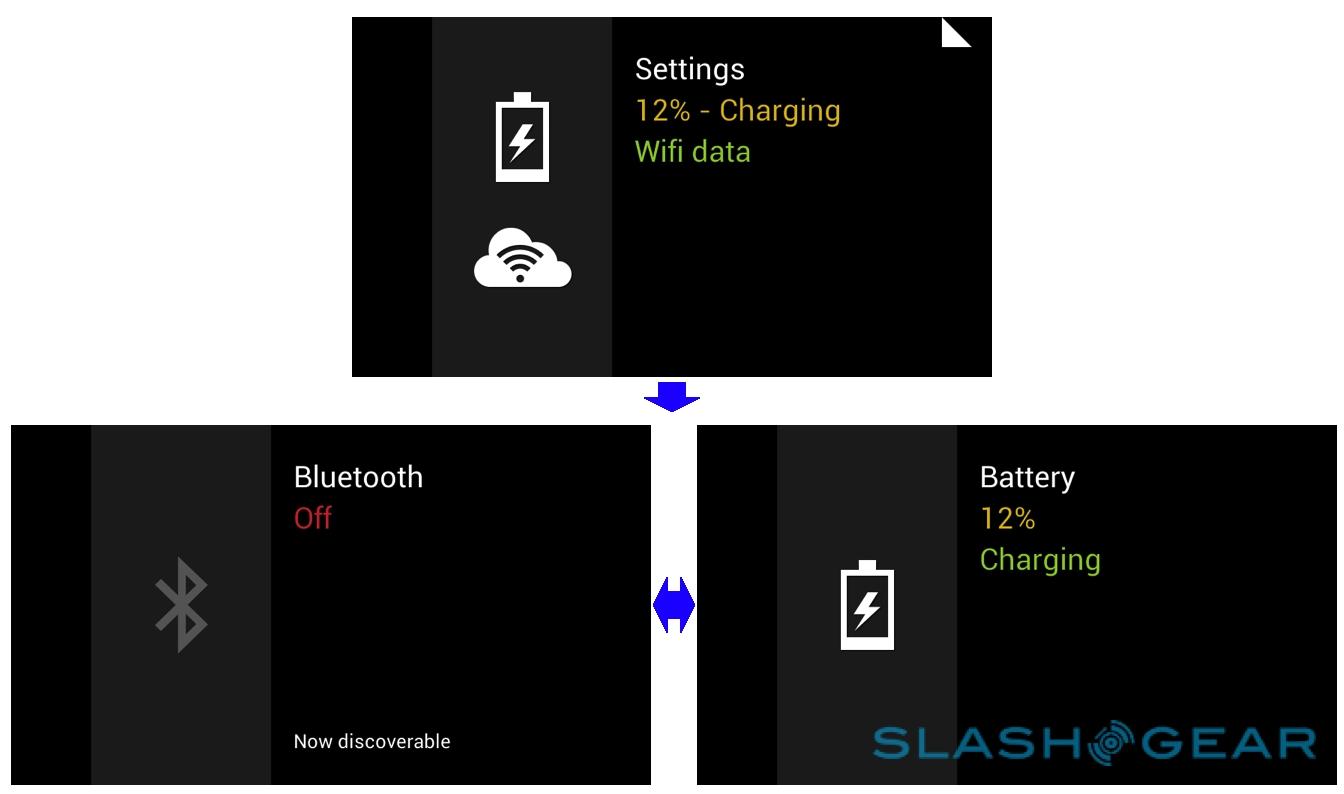

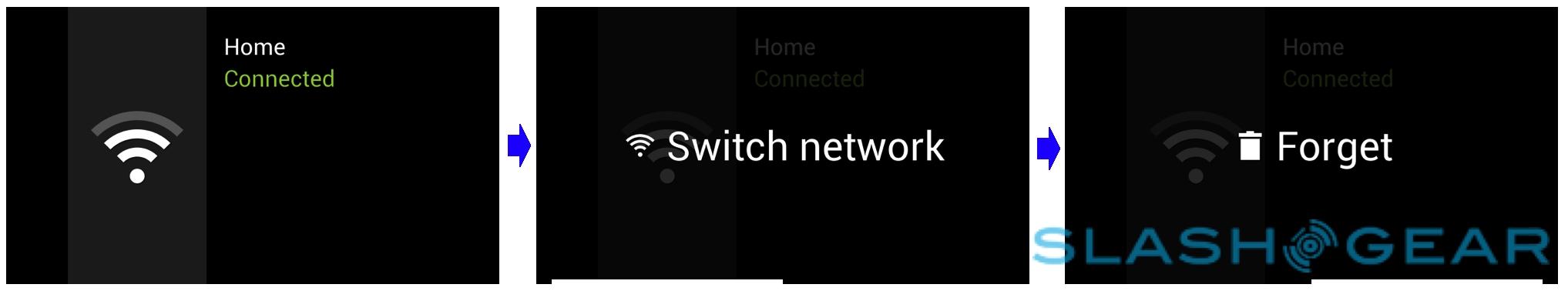

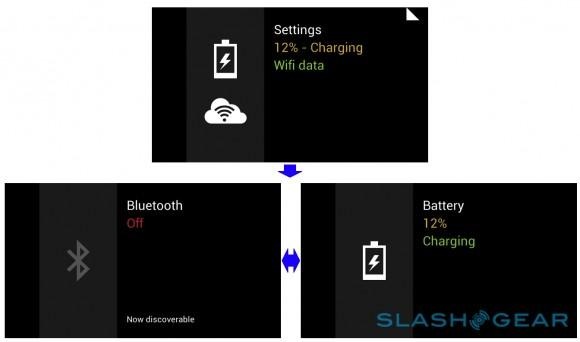

Some of the cards refer to local services or hardware, and a dog-ear folded corner indicates there are sub-cards you can navigate through. The most obvious use of this is in the Settings menu, which starts off with an indication of battery status and connectivity type, then allows you to dig down into menus to pair with, and forget, WiFi networks, toggle Bluetooth on or off, see battery percentage and charge status, view free storage capacity and firmware status (as well as reset the headset to factory settings), and mange the angle-controlled wake-up system.

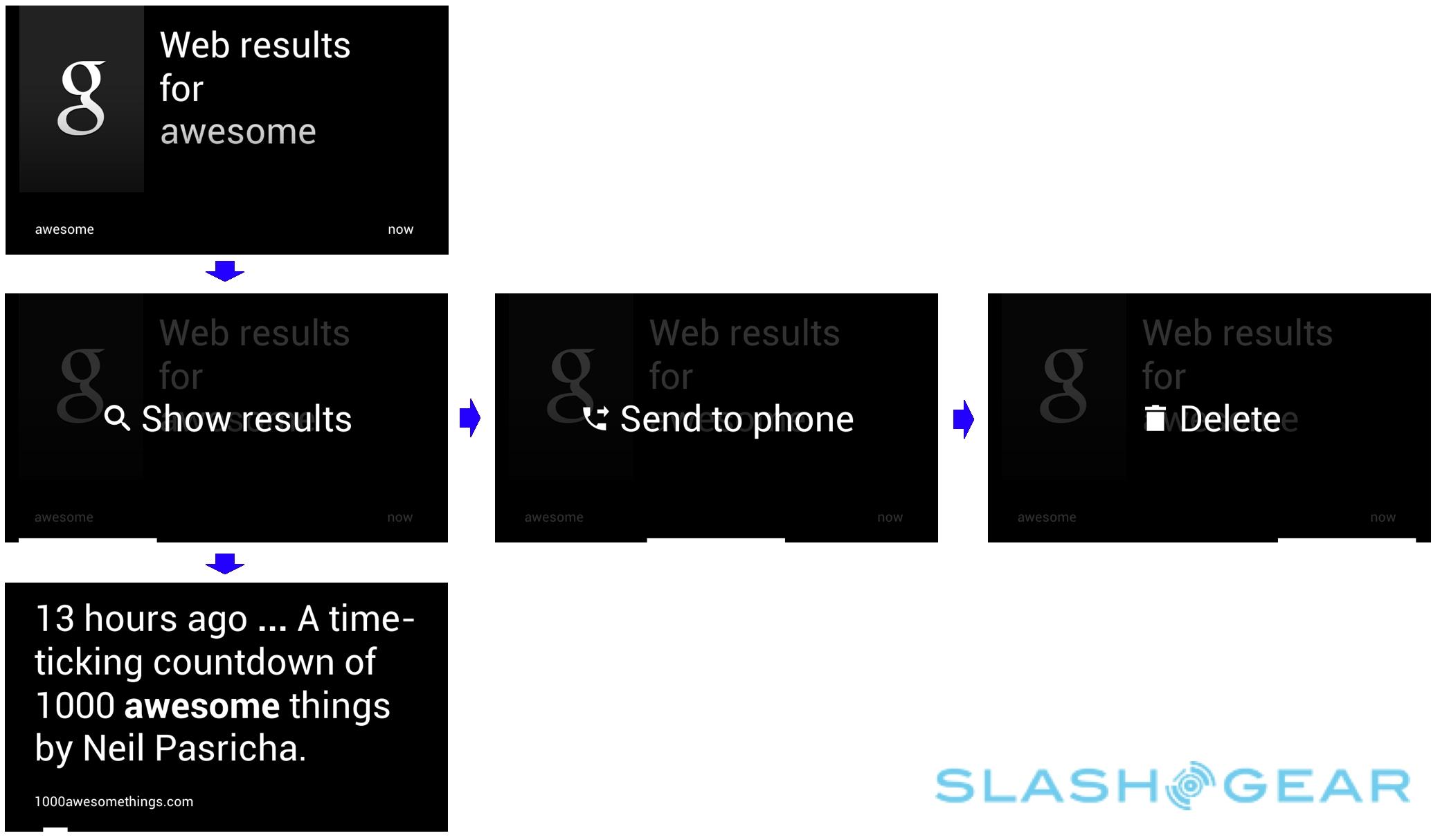

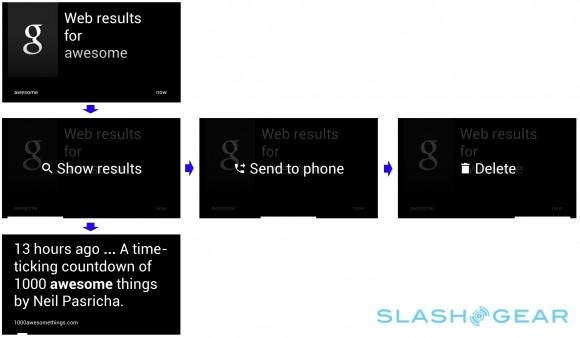

In effect, each card is an application. So, if you ask Glass to perform a Google search – using the same server-based voice recognition service as offered on Android phones – you get a side-scrolling gallery of results cards which can be navigated by side swiping on the touchpad. It's also possible to send one of those results to your phone, for navigating on a larger display.

For third-party developers, integrating with Glass is all about integrating with the Mirror API Google's servers rely upon. So, if you're Twitter, you'd use the API to push a card – say, to compose a new tweet, using voice recognition – to the Glass headset via the user's Google+ account, coded in HTML, with a limited set of functions available on each card to keep things straightforward (say, dictate and tweet). Twitter pushes to Google's servers, and Google pushes to Glass.

[aquote]You could push a card to Glass from anything: a website, an iOS app[/aquote]

As a system, it's both highly flexible and strictly controlled. You could feasibly push a card to Glass from anything – a website, an iOS app, your DVR – and services like Facebook and Twitter could add Glass support without the user even realizing it. Glass owners will log in with their Google account – your Google+ is used for sharing photos and videos, triggering Hangouts, and for pulling in contacts – and then by pairing a Twitter account to that Google profile, cards could start showing up on the headset. All service management will be done in a regular browser, not on Glass itself.

On the flip-side, since Google is the conduit through which services talk to Glass, and vice-versa, it's an all-controlling gatekeeper to functionality. One example of that is the sharing services – the cloud right services that Glass hooks into – which will be vetted by Google. Since right now there's no other way of getting anything off Glass aside from using the share system – you can't initiate an action on a service in any other way – that's a pretty significant gateway. However, Google has no say in the content of regular cards themselves. The control also extends to battery life; while Google isn't talking runtime estimates for Glass yet, the fact that the heavy lifting is all done server-side means there's minimal toll on the wearable's own processor.

Google's outreach work with developers is predominantly focused on getting them up to speed with the Mirror API and the sharing system, we're told. And those developers should have ADB access, too, just as with any other Android device. Beyond that, it's not entirely clear how Google will manage the portfolio of sharing services: whether, for instance, there'll be an "app store" of sorts for them, or a more manual way of adding them to the roster of supported features.

What is clear is that Google isn't going into Glass half-hearted. We've already heard that the plan is to get the consumer version on the market by the end of the year, a more ambitious timescale than the originally suggested "within twelve months" of the Explorer Edition shipping. When developer units will begin arriving hasn't been confirmed, though the new Glass website and the fresh round of preorders under the #ifihadglass campaign suggests it's close at hand.

Glass still faces the expected challenges of breaking past self-conscious users, the inevitable questions when sporting the wearable in public, and probably the limitations of battery life as well. There's also the legwork of bringing developers on board and getting them comfortable with the cloud-based system: essential if Glass is to be more than a mobile camera and Google terminal. All of those factors seem somehow ephemeral, however, in contrast to the potential the headset has for tying us more closely, more intuitively, to the online world and the resources it offers. Bring it on, Google: our faces are ready.