Google Glass Emotion Detection Makes Wearable Empath

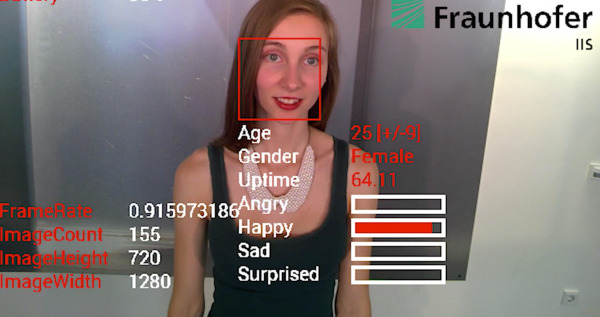

The idea of Glass doing face-recognition may not sit well with Google, but how about if the wearable could just give you a little more insight into how the person you're talking to is feeling? Fraunhofer IIS has loaded its SHORE emotion, age, and gender detection system straight onto Google's headset, giving real-time feedback on those around you.

SHORE uses the shape of the face, the eyes, nose, and mouth, and from that figures out how old someone might be, their gender, and where they rank on scales of happy or sad, angry or surprised.

When we tried SHORE a little over a year ago, the system was designed for installation purposes, like in a store window. In fact, Fraunhofer IIS told us that deployments of the technology were already in the real-world, performing tasks like assessing how well retailers' displays are going down with passers-by.

Fraunhofer IIS SHORE hands-on:

The Glass version is arguably more impressive, however. Loaded straight onto the wearable, rather than running in the cloud, it's entirely self-contained: there's no facial recognition, and no storage or sharing of faces, so it's anonymous too.

Multiple people can be recognized within the same frame (the slight sluggishness in the research team's demo is caused by Glass' own bottlenecks on mirroring to a second screen).

Fraunhofer IIS suggests that the technology could be useful for those on the autism spectrum, who might otherwise struggle to read the emotional cues those around them are giving, or for the visually impaired.

Right now this is all a demo, and while the SHORE system can be licensed for use in projects, there's no Glass or smartphone app you can download.