As Self-Driving Car Safety Debate Rages, Google Teaches Cyclist Manners

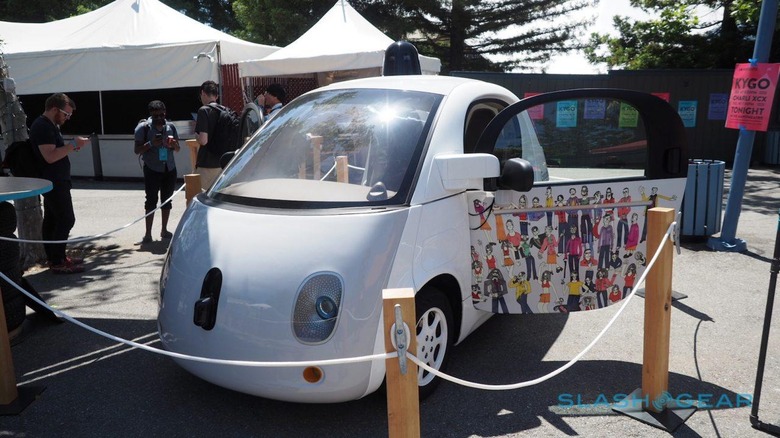

Self-driving car tech safety – or otherwise – may be making headlines, but Google's autonomous fleet has been focusing on smaller potential incidents, the company has explained in its latest monthly report. Every month, as part of its obligations in return for being allowed to test out its fleet of autonomous prototypes on public roads, Google details any crashes or damage sustained, and sure enough this month there've been a couple of scrapes.

Neither of the two accidents were of Google's vehicles' doing – the actual number of crashes where the autonomous prototypes have been at fault themselves is a significant minority – with one car side-swiped after a human-driven vehicle unsafely changed lanes too close to it.

The other incident was a low-speed rear-end collision, with another car rolling forward at around 3 mph and hitting the back of the Google car while it was waiting in a turn lane.

Undoubtedly they're far less dramatic than the fatal crash that saw the driver of a Tesla EV killed in May after he and the Autopilot assistance system missed a tractor-trailer passing in front of the vehicle – though, to be fair, Tesla's Autopilot system is neither billed as offering the same sort of autonomy as Google's, and there are far more Model S and Model X cars on the road actually using it.

What's interesting, though, is Google's explanation of how it's looking in the opposite direction on the size scale: trying to make its self-driving cars more considerate around cyclists.

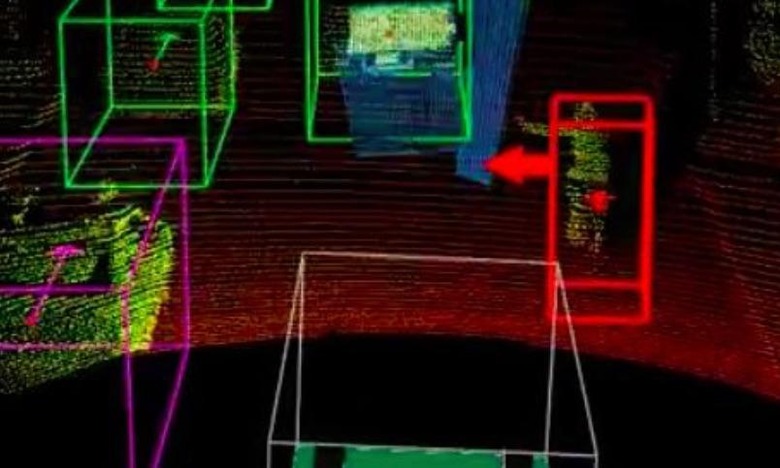

"Cyclists are fast and agile — sometimes moving as quickly as cars — but that also means that it's hard for others to anticipate their movements," Google writes. "Our cars recognize cyclists as unique users of the road, and are taught to drive conservatively around them."

That includes spotting and understanding hand-signals for upcoming lane-changes and turns, figuring out that cyclists may want to move further into the lane to avoid an open car door, and even deal with behavior that may not fit with the rules of the road, such as cycling in the wrong direction.

It's unclear whether, had one of Google's cars been in the same situation as the Tesla involved in the May crash, the same outcome would have occurred. Tesla blamed the bright white of the truck against the bright afternoon sky for confusing the cameras Autopilot uses, with the Model S apparently concluding that the tractor-trailer was an overhead gantry beneath which it could safely drive.

NOW READ: Humans are the roadblock to autonomy

The automaker also highlighted that Autopilot is intended as a driver assistance system, not something capable of true autonomous driving, and that the person behind the wheel is still fundamentally responsible for their own safety.

Investigators are still examining the data pulled from the car's "black box" by Tesla after the crash, as well as digging into claims that the Model S' driver was watching a movie on a portable DVD player when the accident took place.