Intel's Larrabee GPU Could Have Rivaled Nvidia, So Why Was It Discontinued?

At this point in 2025, if you're on the hunt for a graphics card that doesn't break the bank, Intel's Arc B580 will repeatedly appear on recommendation lists by experts. It's almost surreal to witness, especially in a domain absolutely dominated by Nvidia at the top-end and AMD in the value-first GPU segment. Interestingly, the GPU turnaround from Intel could've arrived all the way back in 2009-10 if the Larrabee project wasn't cancelled.

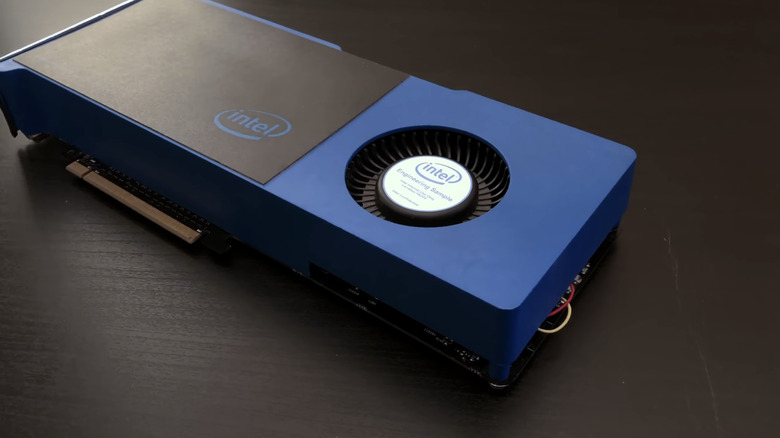

In 2008, Intel dropped the first official teaser for Larrabee, a time when Nvidia and AMD had already established their dominance in the graphics industry. The hope was to develop a highly scalable graphics engine built atop the same fundamental design as CPUs, starting at an 8-core configuration and going all the way up to 48 cores, with almost linear scalability as one of its biggest benefits.

Sound familiar? Well, that's because Apple is doing something similar with its groundbreaking M-series silicon for Macs. The company's in-house UltraFusion tech allows it to link two baseline (M3 Max) processing units into a high-performance powerhouse (M3 Ultra) using thousands of high-speed connections.

So, why did Intel cancel such a promising project, especially at a time when x86 architecture was the undisputed champion in the game, from regular PC workloads to gaming? If you're familiar with Intel's history, the company has been beset with the curse of delays and slow development. In Larrabee's case, silicon and software development were so far behind schedule that the product's market launch would've made it an uncompetitive product.

What Larrabee intended to achieve?

At its heart, Larrabee was a multithreaded, vectorized, multicore processor. Instead of approaching CPUs and GPUs as two fundamentally different breeds, Intel envisioned a hybrid approach where they could take the foundations of Intel Pentium processors. One of the big advantages of Larrabee was using general-purpose cores, and making the performance scalable, instead of using something hyper-specific, such as Nvidia's CUDA, shader, tensor, and ray-tracing cores.

Intel was essentially envisioning what would become the Accelerated Processing Unit (APU), a product that you can now find inside AMD-powered machines. AMD introduced the APU formula in 2011, right after the official demise of Intel's Larrabee ambitions. Intel never officially gave a deep dive into the technical limitations and what doomed the Larrabee project, but at a panel discussion, a senior executive gave a hint.

Thomas Piazza, an Intel Senior Fellow, explained that the company was trying to do too many things on the silicon, which made it impractical. "I just think it's impractical to try to do all the functions in software in view of all the software complexity. And we ran into a performance per watt issue trying to do these things," Piazza said at Intel Developer Forum 2009.

At the fundamental level, Intel struggled with balancing the raw programmability that could be achieved with the Larrabee platform and the fixed-function features, such as rendering and rasterization. Back then, Intel was trying an approach where a hardware component handled DirectX and OpenGL rasterization, alongside its own software-based rendering system.

What could have been

Intel's Larrabee ambitions weren't inherently flawed, but it ran into the limits of what software can achieve at tasks where purpose-built GPU cores excel. "Software rasterization will never match dedicated hardware peak performance and power efficiency for a given area of silicon," says a deep dive paper hosted by the School of Computer Science at Carnegie Mellon University

Intel feels the sting to this day, it seems. "Well, you know, clearly, I mean, I had a project that was well known in the industry called Larrabee, which was trying to bridge the programmability of the CPU with the throughput-oriented architecture," former Intel chief Pat Gelsinger told a panel at Nvidia's GTC event earlier this year. "And I think had Intel stayed on that path, you know, the future could have been different at that point."

"When I was pushed out of Intel 13 years ago, they killed the project that would have changed the shape of AI. I had a project underway. It was called Larrabee to do high-throughput computing in the x86 architecture," Gelsinger later said at an MIT session, adding that Nvidia got extraordinarily lucky.

Bryan Catanzaro, currently the VP of Applied Deep Learning Research at NVIDIA, dismissed the notion, however. "NVIDIA's dominance didn't come from luck. It came from vision and execution. Which Intel lacked," he said in a post on X. Catanzaro says he worked on Larrabee applications at Intel and eventually left to join the machine learning team at Nvidia.