Here's How Self-Driving Cars Stay In Their Lane, Know When To Brake, And More

More often, self-driving cars are making their way onto the roads — at least vehicles with self-driving capabilities. But for many non-tech-savvy people, it could be difficult to understand how exactly the technology behind them works. Is it cameras? LiDAR (Light Detection and Ranging) sensors? GPS? It might look like magic, but in most cases, it's a combination of all of the above and more processed through none other than AI.

However, you should know that when we say self-driving cars, we don't mean Advanced Driver-Assistance Systems (ADAS). Merely having adaptive cruise control, lane keeping assist, blind spot detection, parking assistance, and the like doesn't mean the car is self-driving. According to the Society of Automotive Engineers (SAE), that is only a level two autonomy level (levels range from zero to five). Here, we are talking about level four because no level five car has been sanctioned and produced.

A level four car may still require input from the driver, but it can function fairly autonomously. There's no publicly available consumer level four car right now, but, several companies such as Waymo and Cruise, are actively testing and developing them in restricted areas.

So, if you hop into a Waymo vehicle, the question remains: "How does it know when to stop, go, and stay in its lane?" The answer to that is a bit of magical science, but it's science nonetheless.

Sensor technologies and data fusion

Self-driving cars "see" through a suite of components, typically comprising LiDAR, radar, cameras, and ultrasonic sensors. They all work together and fuse their data to provide a comprehensive view of the vehicle's surroundings.

Here's a breakdown of what each of these do:

- Radar: Autonomous cars emit radio waves and analyze the echoes that return after the waves bounce off objects in their paths. Radar uses the time it takes for the waves to return to calculate the angle, distance, and velocity of obstacles around the car. What makes radar important, though, is its reliability in poor weather conditions such as fog, rain, or snow.

- LiDAR: Instead of radio waves, LiDAR emits light and uses it to measure distance. A LiDAR system works by emitting multiple laser pulses and measuring that reflection. It's a higher-resolution and more precise version of radar that uses light instead of sound. It allows the autonomous car to see small or narrow objects that radar might struggle with. They are typically located at the top of the car

- Ultrasonic sensors: In addition to radio and light, autonomous vehicles (AVs) also use sound to sense obstacles. An emitter releases sub-audible sounds and calculates the distance from an obstacle based on how quickly the sensor picks up the sound.

- Cameras: LiDAR and radar may be able to map objects in a 3D field, but they are unable to read signs and see lanes. That's where cameras come in, they are positioned all around the car to provide a 360-degree view that can spot obstacles and provide visual data, like reading a stop sign by the side of the road.

AI, machine learning, and decision making

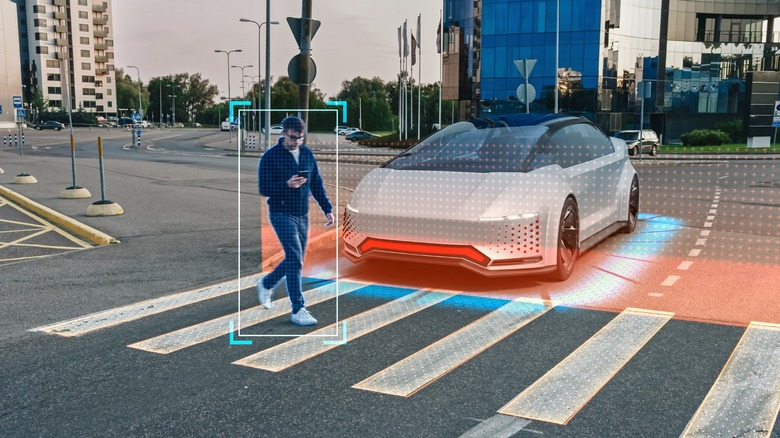

Having all the data from the various sensors and cameras all over the AV is simply not enough on its own to explain how it stays in its lane and knows to avoid an obstacle. Something has to process all the data and help the autonomous car make a decision similar to how a human would. This is where machine learning and artificial intelligence (AI) enter the mix.

There are advanced computers in AVs powered by AI-oriented processors and neural networks that combine all the data, detect what objects are in the way, and sift through them. These AI models are trained on large datasets and improve upon each journey. They can then calculate angles for turns, choose optimal driving paths, and make predictions based on the information they have.

However, this is where the limit of AVs appears. Since machine learning and AI must be exposed to and trained on millions of scenarios to understand what to do in every instant, new and unpredictable situations might be problematic. It's almost impossible to replace human intuition, like when you know from body language and instinct — or even eye contact — what a pedestrian or nearby driver is about to do. Until we solve this problem, we cannot achieve level-five vehicle autonomy.

Navigation and connectivity

It's not just fancy radars and LiDARs helping AVs figure out what to do, there's older, more familiar technology at play here: GPS, cellular connectivity, and the Internet of Things (IoT). GPS, in particular, is at the core of the self-driving car, and a simpler version of it is used with services like Google Maps.

Navigation services can give you a general idea of how AVs use GPS. They triangulate your general location but also download more detailed, frequently updated maps and traffic information from the service provider's cloud, which is sourced from other users on the road or third-party providers. These high-definition maps contain details such as road networks, lane configurations, traffic signals, and road signs.

While it's still in its infancy, autonomous vehicles are being developed to communicate with other objects in the environment (frequently referred to as the Internet of Things). In these cases, the vehicle can connect with other vehicles (V2V) and roadside infrastructure (V2I) to share information and figure out what to do.

When more vehicles and infrastructure are built into the IoT network, autonomous cars will find it much easier to communicate with things around them and make better transportation and safety decisions.