We Tested This Popular AI Detection Tool And Here's What We Found

AI took us by storm, and many individuals and industries are struggling to play catchup. Now, more than ever, it's become imperative to know what a computer made and what a human made. This need to differentiate could be the key to many questions of plagiarism, copyright, and "inspiration" that AI poses.

Some people have come up with a solution: AI detector products. But how accurate are AI detectors? How different can AI's art be from a human's? With the advancement of Stable Diffusion, GPTs, and other generative models, the lines keep getting even blurrier.

However, the public needs a solution and has turned to these AI detectors. While there are others best-known as ChatGPT detectors focusing on text, AI or Not is targeted at images and audio for now. It seems to work by weighing the odds and telling you if it's likely human or AI. AI or Not uses machine learning and its own AI comparison method to see which of the two categories your upload belongs to.

Since it's one of the most popular, we've tested it against multiple image and voice samples to see just how much you should trust it. As you might have guessed, it has areas it excels in and others...not so much. For these tests, we used DALL-E, MidJourney, YouTube, and original photos and audio.

Photos and images of human beings

AI-generated human beings are generally easier to spot, even for us humans. Many of them come with extra fingers, teeth, and limbs or just give you a subtle "uncanny valley" effect. Looking for those is one of the ways to tell if an image was AI-generated.

AI or Not does a good job of spotting DALL-E's human images. But that's because DALL-E's human image generation isn't exactly the best. Most of DALL-E's human images have weird faces, mismatched eyes, smooshed edges, and a 3D-animation style.

However, when we moved on to MidJourney, it struggled with the lower-resolution camera filters and MidJourney's more realistic human rendering. It's still worth noting that it got most of the human MidJourney images we uploaded right. As a side note, AI or Not can't tell when a human-shot photo has been edited by AI.

PetaPixel ran similar tests in June 2023 for AI or Not, and the tests confused the AI. There was one with an AI photo of Tom Hardy as James Bond to which the AI just said it wasn't sure. We've been unable to replicate that same effect, even with the same image. It seems that the team behind AI or Not must have worked on it since that time.

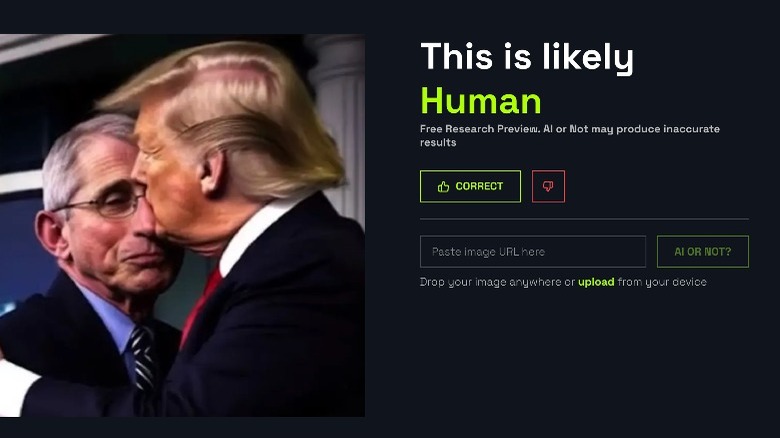

Interestingly, it still can't tell that the Trump and Fauci image that made rounds on the internet for being fake isn't real. It gave us a low-resolution notification, but it still defaulted to calling it human. Maybe it's now designed to give a conclusive answer instead of saying it's not sure.

Digital illustrations and paintings

When it comes to digital illustrations, that's when you really see the cracks in the armor. Using DALL-E, we generated a watercolor-style children's book illustration of a teddy bear watching TV. However, the AI couldn't tell that the image was generated by AI.

It's hard to say what logic AI or Not uses to arrive at its conclusions, because when we generated another watercolor-style children's book illustration of a rabbit and a tiger, it confidently said it was an AI image. So, what's the difference? It seems that in this section, it's pretty much guesswork.

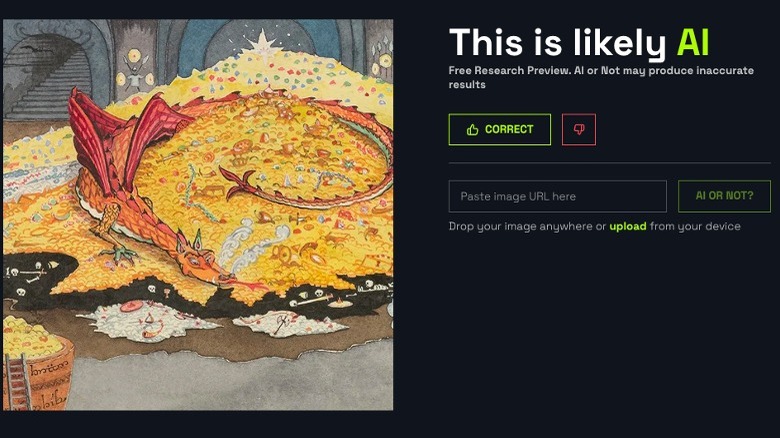

Do you know that popular Hobbit painting of Smaug? The one titled Conversation with Smaug that J.R.R. Tolkien drew himself? Well, AI or Not thinks that AI made it. Perhaps because of its rougher edges and whimsical placement of subjects in the piece. The bottom line is that it was wrong half the time in these tests, and it can't be used with any degree of certainty to rate works in this category.

Audio and voice detection

This is the one category where AI or Not scored perfectly. It could be because AI voice technology is still a little rough around the edges. Most of the time, you can probably tell when a voice is fake and sounds slightly robotic. However, the technology is improving, and AI or Not seems to have a decent hold on what a human voice should sound like.

We first made a voice recording of someone talking and uploaded it. It got that one right, of course. Here's the fun part: We did another recording of a human trying to sound robotic, and it still knew it was a human. That's quite impressive because the voice was pretty convincing.

Next, we used some AI music from YouTube and some AI Morgan Freeman and Trump speeches. It guessed those were AI accurately, as well. We also ran some YouTube shorts of some NPC livestream TikTokkers, and it all recognized them as human.