3 Best ChatGPT Detectors (And Why You Still Can't Trust Them)

With the overnight popularity of generative AI tools, educators and editors have a new problem on their hands: how to tell bot-generated content from what is the product of a human thought process. Plagiarism and intellectual infidelity are serious issues in academic institutions and workplaces, and generative AI makes it all too easy for students and professionals to indulge in both.

But modern problems get modern solutions. The webscape is just as quickly getting flooded with AI-content detectors — tools that promise to help differentiate between machine-generated and human-created content, usually by looking for patterns in word choice and sentence structure typical of AI writing tools. These tools are still grossly unreliable, and there are a few reasons why it's impractical to expect accuracy from them soon, but we'll get into those later.

Still, a handful of ChatGPT detectors seem to stand out from the others in their approach and results, and they might prove useful if you work in a capacity where you need to vet content originality. Here are three of them.

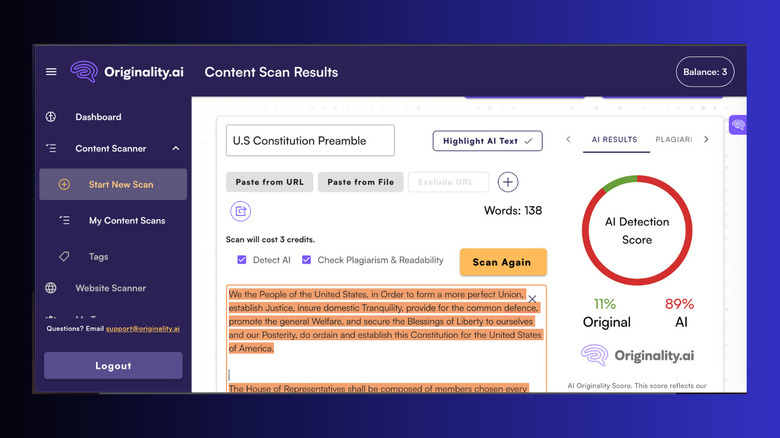

Originality.ai

Originality.ai is a premium tool that offers a pay-as-you-go and a monthly plan, and it's a plus that it includes plagiarism and readability checks in the service, plus a nifty Chrome extension. After each scan, the tool provides an overall percentage score indicating which portion of the text was likely to have been generated by AI. It will also highlight specific sections of the text with a verdict on whether or not it was written by AI.

The tool delivered mostly accurate checks on the texts we scanned — even correctly identifying a human-created piece that had been spun through a paraphraser. However, when we ran the lyrics of "The Star-Spangled Banner" through the machine, the report said there was only a 10% chance that it was written by a human. It had a different verdict on a second test, ruling that the material was most likely 100% the work of a human. It was also 89% confident that the U.S. Constitution preamble was entirely AI-generated.

These results inspire very little confidence, which is ironic considering Originality.ai boasts a 94% accuracy at detecting AI-generated content. What makes it even harder to trust is the tool's inability to be contextual. Instead of shooting blind, one would expect the machine to be able to judge the Constitution Preamble and Anthem based on their renown, match the text with records online, and thus correctly identify their origin, especially considering we clearly spelled out in the title box what each was.

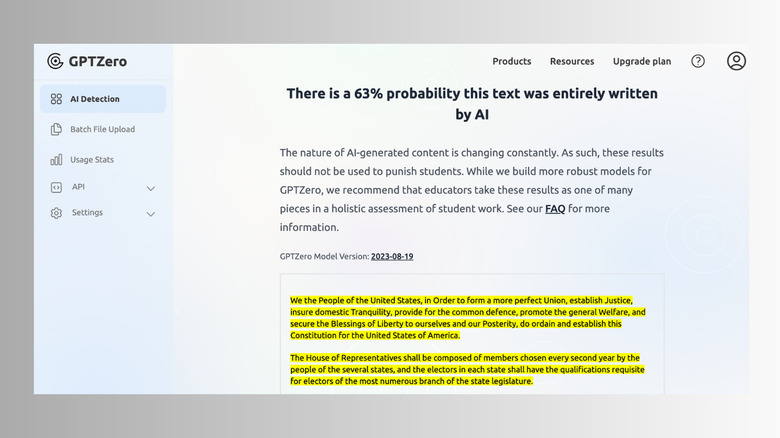

GPTZero

GPTZero is a free tool with a simple interface. It pretty much does the one task it sets out to — there are no complementary features like plagiarism checkers or readability scoring. You can scan up to 5,000 characters at a go or do unlimited words and get "premium" AI detection if you upgrade to its Enterprise plan at $50 per month. You could also scan files in batches with the tool's Batch Upload feature, which makes it useful for classrooms and other academic use cases. You'll get results as a percentage predicting the degree to which AI was likely involved in creating the text.

It analyzes text based on burstiness and complexity, which are two criteria used to determine generative AI patterns in word choice and sentence structure, and it'll rank how the content performs on both counts.

As far as accuracy goes, GPTZero correctly distinguished human-created from AI-generated content on several scans, but it also ruled that the content of the U.S. Constitution might have been created with some AI involvement, so it's just as far from perfect as Originality.ai.

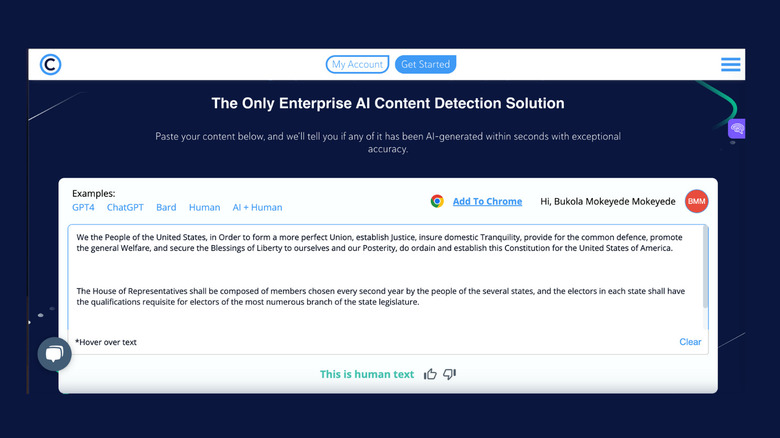

Copyleaks

Copyleaks provides its AI detection tool as part of an enterprise service that includes a plagiarism checker and offers a Chrome extension as well. The interface is pretty straightforward — you paste your text, and the tool scans and delivers an overall verdict. It doesn't use percentage scoring in the final results like the other tools on this list, but if you hover over specific portions of the text, it'll give you a number for the chance of AI involvement.

The tool judged three out of five scans correctly, but it tended to rule in favor of human origin, even when the text was awkwardly phrased in a pattern common to AI. We pasted in a ChatGPT response including the following sentence, "intriguingly, inflection and cadence lend an auditory dimension to the written word, analogous to the crescendo and decrescendo of a vocal maestro," and Copyleaks judged that to have come from a human mind.

Why you still can't trust ChatGPT detectors

The biggest issue with AI detector tools is that their algorithms are still far from human-level intelligence, and human communication is too nuanced and complex to be judged by one pattern. Burstiness and perplexity are grossly limited and unreliable metrics to judge any text by, as writing style and tone are largely influenced by several variable factors. For example, writers who aren't native English speakers might not use the most sophisticated grammar or sentence structures, so AI can (and does) flag their content as unoriginal, as Stanford research discovered.

It's also important to consider that, with the right prompts, ChatGPT can be easily gamed to produce more sophisticated, human-like answers that will throw off AI detectors and produce false negatives. The tools we tested seemed to stumble, particularly on human text with formal or academic writing styles, but there are legitimate contexts in which one might need to use those.

As it stands, these tools might be useful as part of a holistic approach to determining the origin of content, but they're still too imperfect to be used as the final arbiter of what's AI-generated or not. They could flag innocent students or writers' work for AI cheating, like this instance in The Washington Post report about the AI tool Turnitin, and that could be pretty damaging to reputation or morale. You'd have more luck with detecting AI-generated images than text.