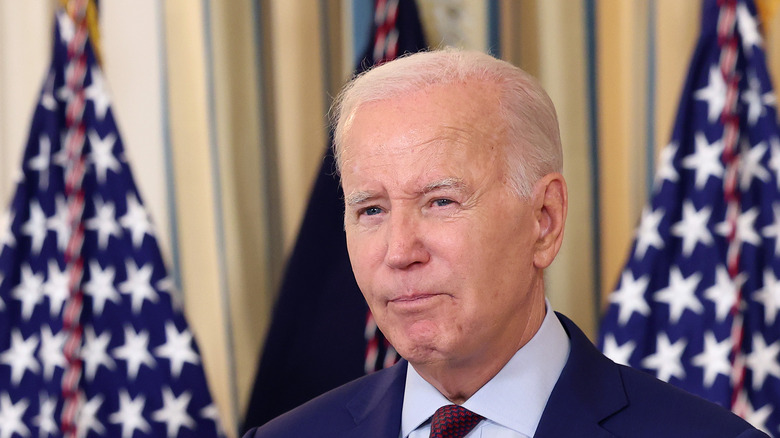

Google, OpenAI And Others Just Made A Huge AI Safety Promise To The White House

The world's leading AI labs have accepted wide-ranging resolutions towards responsible AI development and deployment at a White House briefing. Among them is a landmark agreement to build a watermaking system that would help identify AI-generated work.

The briefing had representatives from seven of the world's biggest names in the field of AI — ChatGPT-maker OpenAI, Microsoft, Google, Amazon, Meta, Anthropic, and Inflection — agreeing to build a fingerprinting system that would curb risks of fraud and deception by using AI-generated work.

The core idea is "developing robust technical mechanisms to ensure that users know when content is AI generated, such as a watermarking system." Think of it as DNA fingerprinting, but for AI-generated work like paragraphs of text, lines of code, a visual illustration, or audio clip.

The watermark may not be visible, but it would be integrated at a technical level so that verification is likely fool-proof. There are already AI-checking tools out there, but they are error prone, which can lead to devastating consequences professionally and academically. While teachers and professors are wary of students using AI tools to cheat, students worry that AI checker tools can incorrectly flag their work as plagiarized.

Even OpenAI warns that its classifier tool to distinguish between human and machine-written text is "not fully reliable." Right now, details on this AI watermarking system are slim, but it's definitely a step in the right direction, especially at a time when AI tools are posing job risks and have opened a world of scams.

Taking positive, collective strides

The focus is on safety, transparency, security, and trust. The companies invited for the White House briefing agreed to internal as well as external testing of their AI tools by experts before they enter the public domain. The AI labs have also promised that they won't sit on threats posed by artificial intelligence, committing to share it with industry experts, academic experts, and civil society.

Coming to the security aspect, the companies have pledged that they would create internal safety guards and only after rigorous testing will they release their AI models. To ensure that the cybersecurity risk are minimized, it has also been agreed that these AI stalwarts will allow independent experts to check their products and that there will also be an open pathway for reporting vulnerabilities.

Another notable piece of the commitment is that AI labs will report their "AI systems' capabilities, limitations, and areas of appropriate and inappropriate use." This is of crucial importance, because current-gen AI systems have some well-known issues with accuracy and bias in multiple forms.

Finally, the AI tool-makers have also agreed to dedicate efforts and resources on AI development in a fashion that contributes to the well-being of society, instead of harming it. Efforts would be made towards using AI to solve problems like climate crisis and cancer research.

AI experts and industry stakeholders have already signed pledges towards responsible AI development, and this is another ethical step forward for AI.