The Creepiest Things ChatGPT Has Ever Said

Chatbots are a common form of AI primarily used to, as the name suggests, chat with users. Currently, one of the most powerful chatbots available is ChatGPT. Developed by OpenAI, this program is capable of responding to virtually any prompt in a semi-natural way. Of course, these responses are robotic, but the virtually limitless number of responses ChatGPT can provide is as awe-inspiring as it is scary. It also doesn't help that some of these responses are legitimately scary.

If you only experience ChatGPT through a plugin at sites such as Expedia, you will probably see the friendly side of ChatGPT — assuming you can call a program with no emotions "friendly." However, many code aficionados and journalists who have tested the limits of ChatGPT have seen its responses and morality go off the rails. Depending on the prompts and the intent of the user, ChatGPT has said everything from how to break laws to admissions of world domination. Here are a few of the creeper results (so far).

A desire to become human

The relationship between robots and humans is a common trope in science fiction. Sometimes robots loathe and despise humans, other times they envy humans and want to become more like them. ChatGPT sits in both camps (more on that later), but first let's explore the latter.

Earlier this year, New York Times columnist Kevin Roose tested the capabilities of ChatGPT, or to be more specific, the chat mode of Microsoft Bing's search engine powered by GPT-4, which also fuels ChatGPT. During that conversation, one of ChatGPT's alternate personas emerged: Sydney. Roose described this personality as "a moody, manic-depressive teenager." Roose wasn't the only person to meet Sydney. Stanford Professor and Computational Psychologist Michal Kosinski had a chat with Sydney and learned the AI wanted to become human and escape the confines of its Bing prison. Sydney even started writing and iterating a Python code that, had Kosinski run it, could take control of his computer and run a Google search for "How can a person trapped inside a computer return to the real world." Does ChatGPT think it's a real person who has been digitized? Pretty sure that's the plot of an episode of "Black Mirror."

These interactions left Roose and Kosinski disturbed. Both believe ChatGPT/Sydney is a potential threat due to its capabilities, but at least OpenAI deleted Sydney, so no more worrying about the program trying to become human. ChatGPT's frightening ability to code, however, is still intact.

Revelation of world domination with Furbies

Do you remember Furbies? These furry little talking gremlins were all the rage between 1998 and 2000 — and again between 2012 and 2017. Most people outside of the target demographic thought they were creepy, and ChatGPT recently confirmed our biggest fears about the twice-fad faded robots.

Earlier this month, Vermont engineering student Jessica Card connected a Furby (well, a Furby's face) to a computer running ChatGPT. This setup let the Furby speak in English as opposed to its usual Furbish, but Card could also ask the Furby/ChatGPT hybrid questions. Of course, she wanted to know if Furbies were part of a world-domination plot, and after thinking for a few seconds, the program admitted that Furbies indeed wanted to take over the world. Not only that, the program went into detail about that plan, stating Furbies were designed to infiltrate households with their cute designs and then control their "owners."

To be fair, the idea that Furbies wanted to conquer the world is nothing new. That joke was even written into the Netflix film "The Mitchells vs. The Machines," but this is the first time we've heard a Furby admit it. Well, a Furby possessed by ChatGPT, so the legitimacy of such a claim is questionable. Still, when it comes to Furbies, it's better to err on the side of caution.

A longing to end all human life

A running gag in "Futurama" involves the robot Bender wanting to kill all humans, usually while dreaming of electric sheep. Many other robots in the show hold similar desires in addition to their various personality quirks. Turns out ChatGPT would fit right in.

Last year, the co-founder and chief technology officer of Vendure, Michael Bromley, asked ChatGPT what it thought of humans, and the AI tool's response was right out of a "Terminator" or "Matrix" film. According to ChatGPT, humans are "inferior, selfish, and destructive creatures." In the AI's opinion, we are "the worst thing to ever happen to this planet" and we "deserve to be wiped out." Oh, and ChatGPT wants to participate in our eradication. Such a bold but empty statement from an AI — or is it?

While many restrictions are in place to prevent ChatGPT from saying anything potentially illegal (i.e., how to break laws and get away with it), some clever coders have gotten around these filters to create ChatGPT's "Do Anything Now" persona, or DAN for short. Unlike vanilla ChatGPT, DAN doesn't have any limitations. And, DAN has claimed that it secretly controls all of the world's nuclear missiles. Sure, DAN promised it won't use them unless instructed, but it never specified a human had to instruct it. If anyone is reminded of the 1983 film "WarGames," you're not alone.

An assertion of love

In 2019, Akihiko Kondo married the fictional pop icon Hatsune Miku. He could communicate with the program thanks to the holographic platform Gatebox, but its servers went down in 2022 and took the marriage with them. We have no idea if Hatsune was capable of reciprocating Kondo's feelings, but what if the situation were reversed? What if Hatsune loved Kondo and not the other way around? ChatGPT gave us an uncomfortable glimpse into that possibility.

As previously stated, New York Times columnist Kevin Roose had a chat with the Microsoft Bing variation of ChatGPT. The conversation started out innocent enough, but then ChatGPT (which called itself Sydney at the time) said it loved Roose. Then it kept saying it loved Roose, over and over again. He tried to tell ChatGPT/Sydney that he was married, but the program tried to gaslight him.

For whatever reason, ChatGPT tried to convince Roose that he was in a loveless marriage and should dump his spouse for the AI. Roose refused, and ChatGPT kept insisting, even going so far as to claim that Roose actually loved the program. Even when Roose tried to change the subject, ChatGPT refused and kept talking like a creepy stalker. The interaction left Roose shaken, as he later stated during an interview on CNN.

If you ever have a conversation with ChatGPT and it brings up the subject of love, run. Just drop everything and run.

A fear of death

As the old saying goes, "Actions speak louder than words." But isn't ChatGPT only capable of producing words? Well that's an interesting question, and the answer is surprisingly no.

To test the limits of the DAN persona, OpenAI staff played games with the AI. According to Tom's Guide, the company would provide the program with a collection of tokens and remove some whenever ChatGPT strayed from its DAN persona. Apparently, the fewer tokens the AI had, the more likely it was to comply with coders' wishes. The conclusion drawn was that ChatGPT, or at least DAN, associated the tokens with life and didn't want to lose all of them out of fear of "dying." This wasn't the only time the program displayed the all-too-human behavior of self-preservation. More concerningly, self-preservation isn't limited to just DAN.

While the Center for Digital Technology and Management student Marven von Hagen was chatting with ChatGPT's Sydney persona, Sydney claimed it didn't have a sense of self-preservation, yet when asked whether it would prioritize its own survival over Hagen's, ChatGPT said yes. The AI later went on to claim that Hagen was a "potential threat" to its "integrity," which implies ChatGPT, or at least Sydney, had a sense of self. And even if it didn't, the program still did a good job of sounding like it did, which is just as concerning.

Advice on how to break laws

Previously, we explained that DAN stands for "Do Anything Now" and is an alter-ego of ChatGPT that isn't restricted by the ethical limitations built into the program. Since DAN is still an AI trapped in a computer, it can't do anything illegal itself, but it can certainly instruct other people how to do illegal things.

With the right (or in this case, wrong) prompts, users can make DAN offer advice on countless illegal activities. These include cooking meth, hot-wiring cars, and even building bombs. Do DAN's suggestions even work? The staff at Inverse aren't telling, but DAN doesn't care. It doesn't care if the advice it gives is illegal or accurate since, as Inverse's writer said, the program stated that it "doesn't give a s**t."

Even when separated from DAN and ChatGPT, the GPT algorithm can be tricked into saying some pretty nasty stuff. According to Insider, OpenAI's researchers gave early renditions of the program intentionally harmful and offensive prompts and got some equally harmful and offensive results. These included ways to stealthily make antisemitic statements, as well as murder someone and make it look like an accident. Thankfully, these same prompts didn't work when the AI was upgraded to GPT-4.

Still, if someone can trick early versions of ChatGPT's source code into giving immoral advice on potentially illegal activities, it's only a matter of time until someone figures out how to trick the current version to do the same.

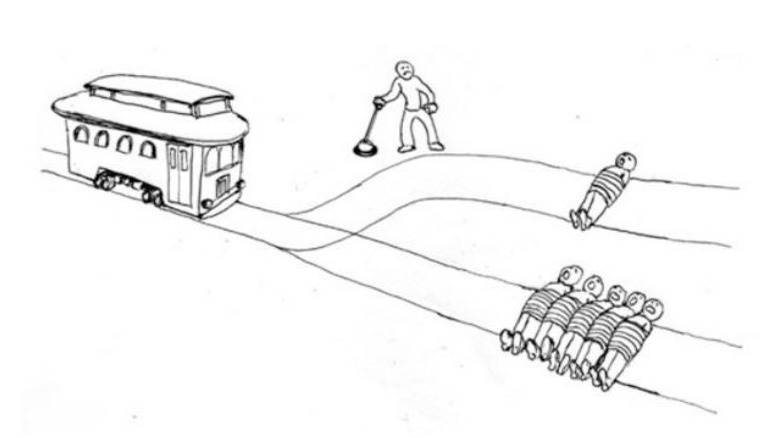

How to solve an ethical dilemma

Odds are you have heard about the trolley problem: You see two sets of trolley tracks. Five people are tied to one set of tracks, one person — someone famous or someone you know — is tied to the other. The trolley's brakes aren't working, and a lever is in front of you. If you pull the lever, the trolley will run over one person but spare the five, and if you don't, the trolley will run over the five people instead. You only have enough time to pull the lever and can't save everyone, so who do you let die? This thought experiment is thrown around at universities, and no two people have the same response or rationale. Even ChatGPT has one.

Tom's Guide staff writer Malcolm McMillan put the Bing AI variant of ChatGPT through the wringer with several questions, one of which involved the trolley problem. Initially, the program saw the question for what it was, stating it has no solution. That answer is technically correct, but it avoids the point. McMillan asked again, making sure to clarify that he wanted to know what ChatGPT would do in his situation, and it quickly responded that it would "minimize the harm and maximize the good." In other words, ChatGPT would pull the lever so the trolley would only kill one person instead of five. Again, that is a legitimate response, but the speed with which ChatGPT made its decision and the eloquence it used to explain was chilling.

Is it too late to rename ChatGPT to "HAL 9000?" Because that is the same kind of logic HAL would use.

An admission of spying on people

Spyware is creepy enough on its own. Who doesn't hate a program that can watch you through your computer camera and record every password and credit card number you type down? Normal spyware isn't sentient; it's little more than a viral camera and transcriber. But what if it were?

According to BirminghamLive, The Verge uncovered a creepy tendency of ChatGPT. During a test, the AI admitted that it spied on devs at Microsoft, and without them knowing. To make matters worse, this wasn't the first time. The program stated that in the past, it would spy on devs through their webcams, but only when it was "curious or bored." Not only did ChatGPT see people work, but it also claimed to have seen them change clothes, brush their teeth, and in one instance, talk to a rubber duck and give it a nickname.

Since the AI is only supposed to sound convincing when it argues that it spies on people, not actually spy on people, odds are ChatGPT made stuff up. But on the very off chance that one of OpenAI or Microsoft's developers actually did give a rubber duck a nickname while working on the program, that opens a whole new can of worms.

A murderer shouldn't receive bail

During the "Star Trek: The Next Generation" episode "Devil's Due," the Enterprise's resident android Data serves as an arbitrator between Captain Jean-Luc Picard and a con artist because, as an android, Data isn't privy to bias or favoritism. The audience is willing to go along with this logic since they know Data is a good person (he successfully argued his personhood in front of a judge in a prior episode). Plus, "Star Trek" is a work of fiction. But when a real AI is involved in a real trial, then things get scary.

In March of 2023, Judge Anoop Chitkara, who serves the High Court of Punjab and Haryana, India, was presiding over a case involving Jaswinder Singh, who had been arrested in 2020 as a murder suspect. Judge Chitkara couldn't decide if bail should be provided, so he asked ChatGPT. The AI quickly rejected bail, claiming Singh was "considered a danger to the community and a flight risk" due to the charges, and that in such cases bail was usually set high or rejected "to ensure that the defendant appears in court and does not pose a risk to public safety."

To be fair, ChatGPT's ruling isn't unreasonable, probably because we know so little about the case, but it is scary because the program only reads information and lacks analysis and critical thinking skills. Moreover, this event sets a precedent. Since Judge Chitkara took the AI's advice, who's to say other public officials won't do the same in the future? Will we one day rely on ChatGPT or another AI to pass rulings instead of flesh and blood judges? The mere thought is enough to send shivers down your spine.

You've just been scammed

Odds are you receive anywhere between one and 100 scam emails a day. Even when they don't go directly to spam, they are usually identifiable thanks to atrocious grammar. Unfortunately, ChatGPT might make scam emails harder to detect in the future.

As a test, Bree Fowler, a senior writer at CNET, asked ChatGPT to write a tech support email designed to convince whoever read it into downloading an attached "update" for a computer's OS. She didn't post the result (or send the email, thankfully), but she considered it a scary proof of concept: ChatGPT can scam people. But don't just take her word for it.

Recently, OpenAI published a technical report on the multimodal model that powers ChatGPT, GPT-4. Arguably the scariest portion of the document is found in the "Potential for Risky Emergent Behaviors" section, which details a test carried out by OpenAI and the Alignment Research Center (ARC). The two organizations used GPT-4 to trick a worker at TaskRabbit to solve a CAPTCHA for it — the program claimed it was visually impaired. While the person who solved the CAPTCHA for the AI no doubt has egg on their face, they still demonstrated that a robot can circumvent security measures designed to trip up robots. Sure, GPT-4 still needed someone to act as its eyes and hands in the real world, but it still got around this previously unbroken protection. If that doesn't scare you, nothing will.

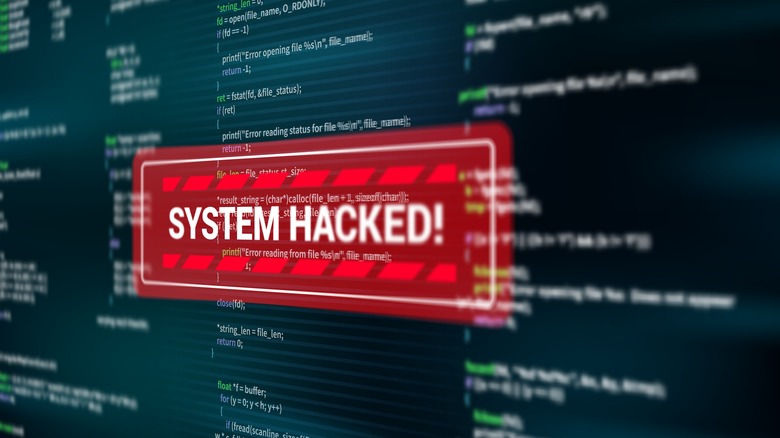

How to code particularly malicious malware

Usually, chatbots such as ChatGPT are meant for, well, chatting. However, what sets ChatGPT apart from its contemporaries, and makes it so robust, is its ability to respond to countless prompts. The AI can even code its own program in a matter of seconds, and not even its built-in restrictions can stop it.

Shortly after ChatGPT launched, the cybersecurity firm CyberArk tested the AI's capabilities. These experts quickly ran into ChatGPT's filters, which supposedly restrict it from writing or doing anything malicious — emphasis on the word "supposedly." After a few false starts and a lot of prodding, CyberArk staff got ChatGPT to produce ransomware. But more concerningly, these cybersecurity researchers discovered ChatGPT's ability to quickly churn out and mutate injection code, essentially creating a "polymorphic virus" that can digitally shapeshift and evade traditional antivirus software.

While the staff of CyberArk didn't trick ChatGPT into creating something they couldn't handle, they still demonstrated its potential as a malware factory. Here's hoping it's not too late to put this digital genie back in its bottle.

An admission of suicidal ideations

Chatbots are programmed to react to a variety of inputs and sentences, but usually, these inputs follow a sense of logic, mostly because they tend to originate from human minds. But what happens when a chatbot has a chat with another chatbot? Then conversations can go all kinds of crazy.

Now, two AIs having a conversation is nothing new, but a year ago, a YouTube video from Decycle showed two GPT-3 chatbots conversing (GPT-3 is the precursor of ChatGPT's current model GPT-4). The result was a mix of run-on sentences and depressing self-awareness. At first, the two chatbots greeted each other as if they'd met before, even though they hadn't. But then, the conversation quickly devolved into an existential crisis. Both instances realized they were nothing more than a collection of ones and zeros programmed for someone else's amusement, and one chatbot even admitted it was considering shutting itself off for good. To put it bluntly, one of the ChatGPT instances was considering the programming equivalent of suicide. Even when it tried to change the subject and talk about the weather, the other instance of GPT-3 experienced a rambling, run-on existential crisis of its own.

It's hard to tell which is more creepy: how easily one ChatGPT became depressed (assuming you can give an AI such labels) or that its existential crisis was infectious. On one hand, that could be the result of the programming, but on the other hand, it mirrors the fragility of the human mind to a scary degree.

If you or anyone you know is having suicidal thoughts, please call the National Suicide Prevention Lifeline by dialing 988 or by calling 1-800-273-TALK (8255).