Twist & Squeeze Interface Tested By Microsoft Research

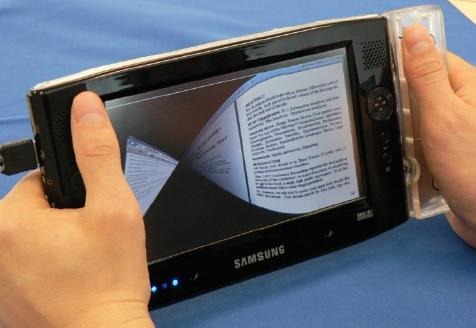

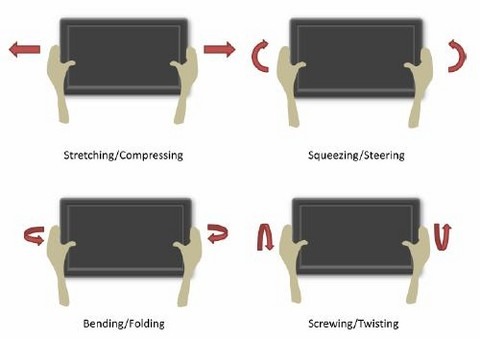

A team at Microsoft Research have developed a prototype interface [pdf link] for mobile devices that responds to twisting, squeezing, flexing and stretching to control and on-screen GUI. The system, called Force Sensing, relies on very small manipulations of a handheld device – in this case a modified Samsung UMPC – with different gestures mapped to navigation and other controls. Visual feedback, such as interfaces twisting or bending, apparently decreases the learning time necessary for users to adapt to the new controls.

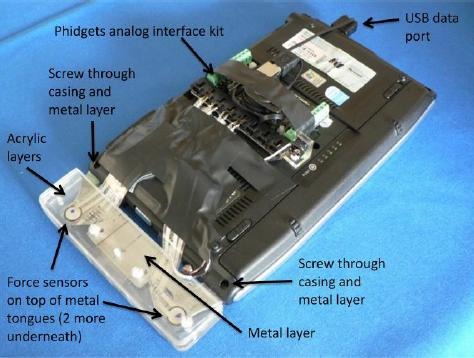

Developed by James Scott, Lorna Brown and Mike Molloy at Microsoft Research's Cambridge lab, the system uses four embedded sensors and is suggested as an alternative to physical controls on devices whose surfaces are taken up with large screens. Currently, twisting the prototype is mapped to alt-tab task switching and bending is mapped to page up and page down, but with a number of actions available motions such as stretching and compressing could control, say, zoom level. In fact, the prototype itself uses a simulation of the interface rather than controlling the UMPC's actual OS, to make testing new onscreen visualisations easier.

Whereas we normally only twist gadgets to see how much creak and flex is allowed for in the build quality, apparently no actual deformation of the UMPC is required. The FlexiForce sensors used (and connected via USB) are very sensitive, and do not require a specially designed casing. Preliminary results suggest that, while less accurate, the flex interface is as fast as, or faster than, than controlling a mouse pointer. Scott, Brown and Molloy envisage the interface being augmented with haptic feedback.

[via GottaBeMobile]