TikTok Automates Video Moderation: Here's How You May Get Banned

TikTok plans to automatically remove videos believed to contravene its policies around violent, adult, and illegal content, aiming to make moderation faster and more effective as the social network grows. Currently, TikTok relies on a US-based Safety team, the company says, which is responsible for checking every video flagged either by users or an automated system.

That can be time-consuming, however, and so TikTok now plans to give more power to the automated moderation system. Starting in the next few weeks, the company confirmed today, it would be allowing the system to automatically remove some types of content which breaks the rules.

"Automation will be reserved for content categories where our technology has the highest degree of accuracy," TikTok's Eric Han, Head of US Safety, explains, "starting with violations of our policies on minor safety, adult nudity and sexual activities, violent and graphic content, and illegal activities and regulated goods."

The enhanced system has already been trialed in other markets, Han says, and will now be introduced in the US and Canada. For users, it'll appear much like business as normal, with the ability to appeal video removals – whether automated or manually removed – and report potential violations of the content policy. The ability, and accuracy, of the system is expected to improve over time, Han suggests.

It's not just about dealing with a growing number of TikTok uploads, however. Increasing attention has been focused on the mental health impact of performing content moderation by teams of people at social networks, and the cumulative affect of repeatedly seeing violent, explicit, or otherwise disturbing photos and videos. Facebook, YouTube, and other companies have been criticized for giving insufficient consideration to their moderation staff, many of which are contract employees that fall outside of ostensibly protective policies.

"In addition to improving the overall experience on TikTok, we hope this update also supports resiliency within our Safety team by reducing the volume of distressing videos moderators view and enabling them to spend more time in highly contextual and nuanced areas, such as bullying and harassment, misinformation, and hateful behavior," Han suggests.

In places where the enhanced system has already been rolled out, TikTok says the false positive rate for automated removals is 5-percent. Requests to appeal removals by creators have remained consistent, meanwhile, compared to when the process was handled manually.

How TikTok content violations work

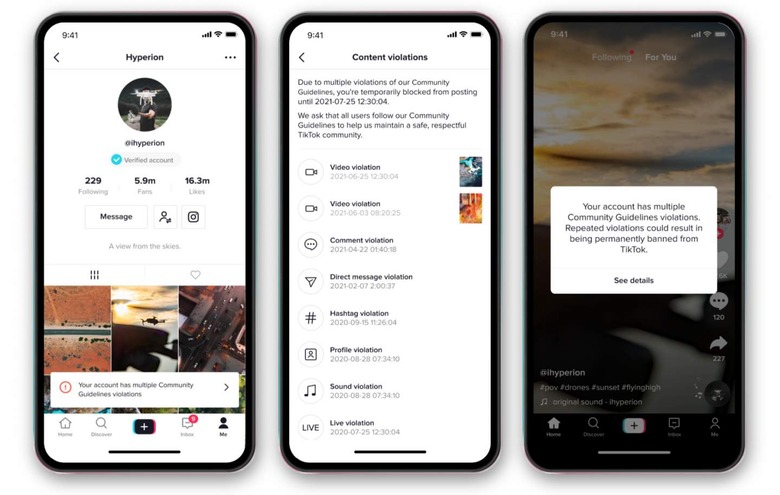

Like other services, TikTok has a series of escalating tiers for content violations that creators face. In addition to the new automated moderation system, it's also trying to make the potential repercussions for falling foul of the system more clear.

A first violation will see a warning issued in the TikTok app. Subsequent violations will suspend the account's ability to upload video – or to comment or even edit their profile – for 24 or 48 hours. The exact length of time will depend on both the severity of the violation, and any previous violations.

Accounts can also be restricted to a view-only experience for 72 hours or up to a full week. That way users can only see videos, but not post them, and nor can they comment on videos.

After several violations, a notification will warn the user that "their account is on the verge of being banned," Han explains. If they continue with the same behaviors, the account will be permanently removed.

If, of course, the violation is of a so-called "zero-tolerance policy" – which includes things like posting child sexual abuse material – then an account will be automatically removed. TikTok may also block a device completely in such circumstances, preventing it from being used to create new accounts.