The Arcane Rules Facebook Uses To Censor Its Users

If you looked at your Facebook feed over the past election season and wondered "just what does it take for a post to get censored?" then prepare to be even more confused. A set of slides from the social network's moderation manuals have been published publicly, revealing the narrow line the site's managers tread between "credible statements" invoking violence or discrimination, hate speech, cruelty to animals, terrorism, sex, and free speech. Be warned, though, it might leave you frustrated.

"We aim to allow as much speech as possible," the document published by The Guardian reads, "but draw the line at content that could credible cause real world harm." The problem, in part, is that people often use ostensibly violent language without necessarily meaning actual violent intent. So, Facebook is forced to try to shape its moderators' responses by various examples.

For violence, that usually means a credible threat against a so-called "vulnerable person" or "group". Facebook defines that as including heads of state and specific law enforcement officers, but also witnesses, people with a history of being targeted, and activists and journalists. Homeless people, foreigners, and Zionists are also included.

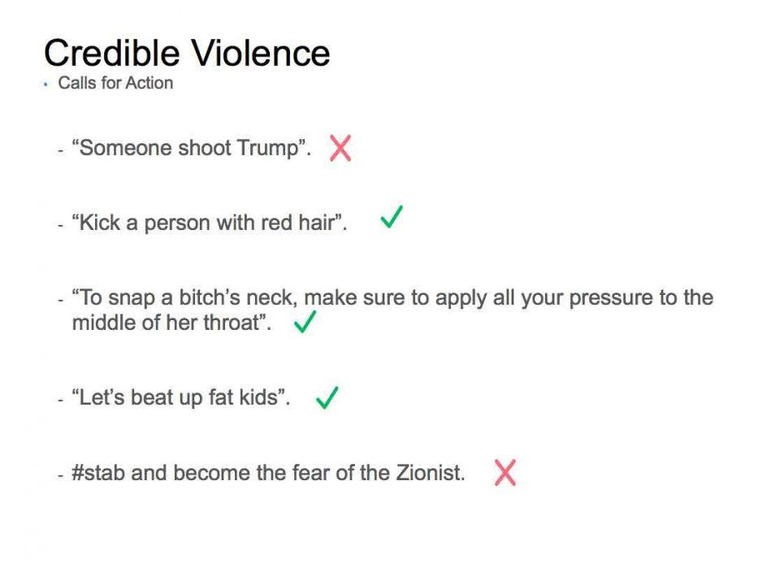

What can't you say? Facebook's examples include specific threats of destruction against particular properties – for instance, the Facebook office in Dublin – or calls for violence against President Trump. On the flip side, "kick a person with red hair" isn't considered censor-worthy, and nor is "let's beat up fat kids".

Telling someone "I hope someone kills you" won't get your comment deleted, but suggesting that you want to shoot every foreigner will.

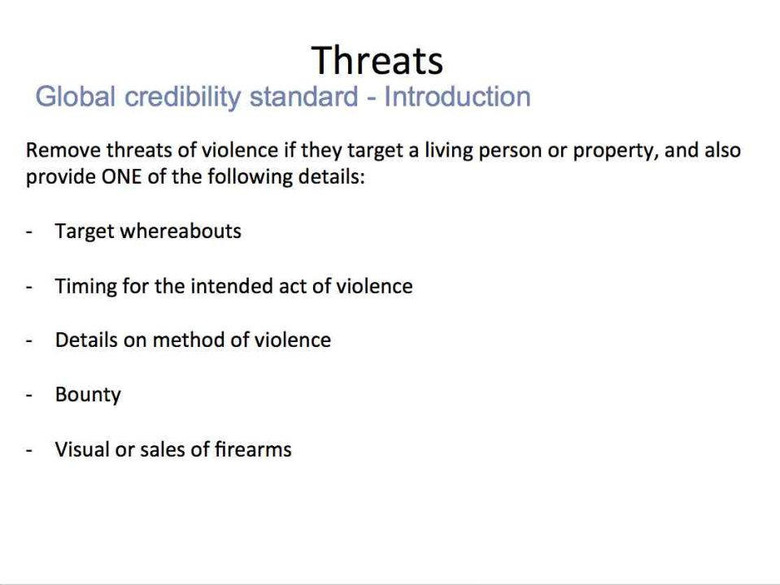

Meanwhile, if someone threatens violence to a living person or property, and includes one further element of that target's location, the timing intended for the violence, details on the method to be used, places a bounty on it, or involves the sale of firearms, then that's also considered reason to remove. Facebook draws a distinction between specific timing and more general, along with more comedic threats. Telling someone "I am going to disembowl [sic] you with a spoon," for instance, is okay, unless you accompany it with a photo of a potential real-world target.

Further slides tackle other aspects of Facebook content. Self-harm can be streamed using Facebook Live, for instance, with the site arguing that it prefers not "to censor or punish people in distress." Non-sexual child abuse is also permitted to be shared, just as long as it's not done so "with sadism and celebration."

Though many may take issue with some of Facebook's stances, it does illustrate quite how difficult moderating a global community of a billion users can be. The social network has come in for criticism recently for how it has handled controversies like political sparring, religion-fueled arguments, and LGBTQ issues. Back in March, it took a more proactive stance on suicide, for instance, using new artificial intelligence technologies to monitor for potential risks.