Microsoft's Google Glass Rival Tech Tips AR For Live Events

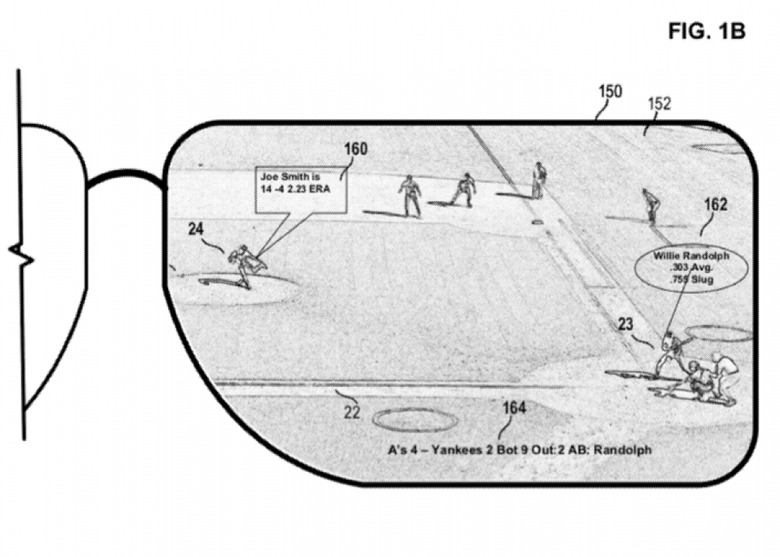

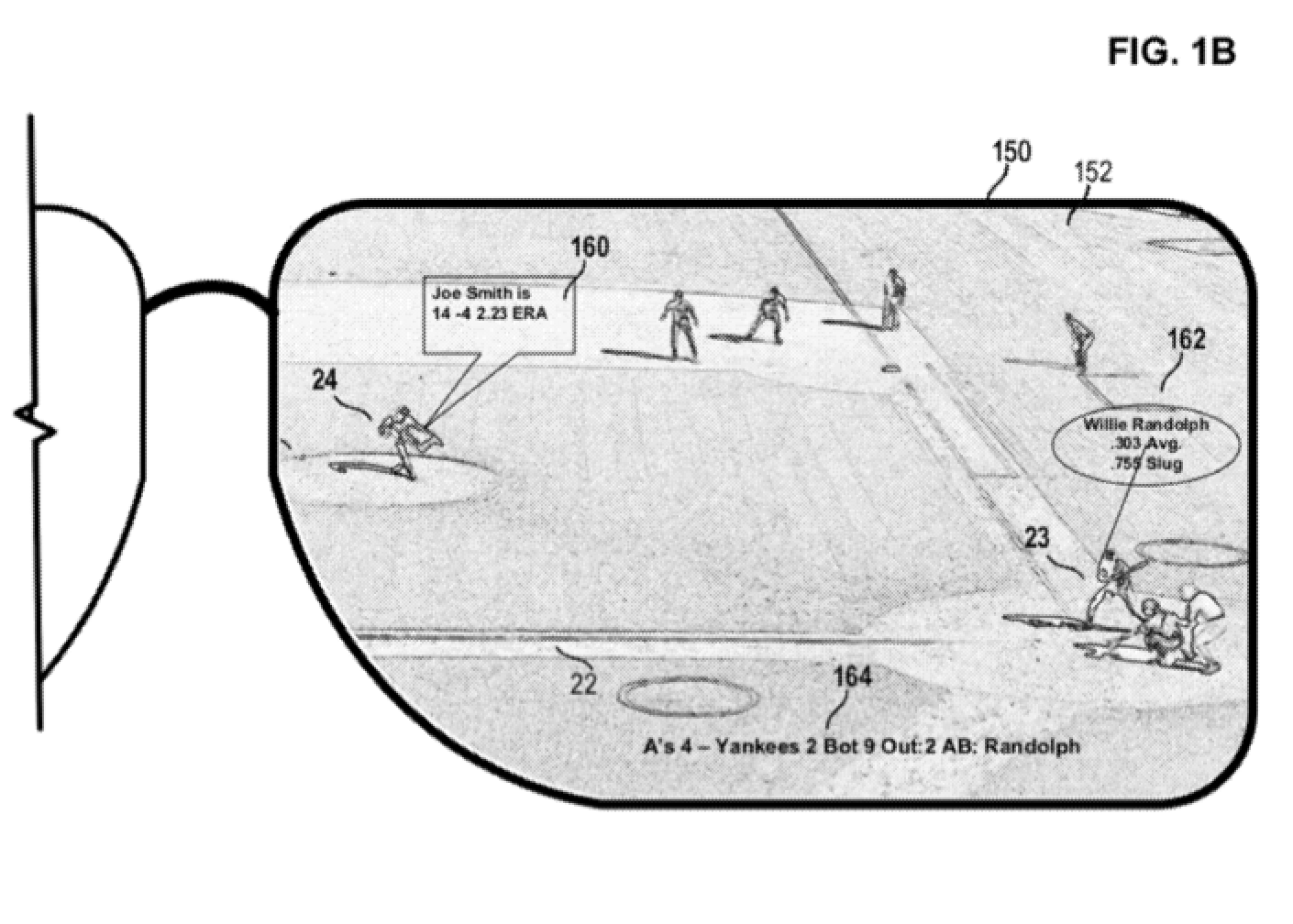

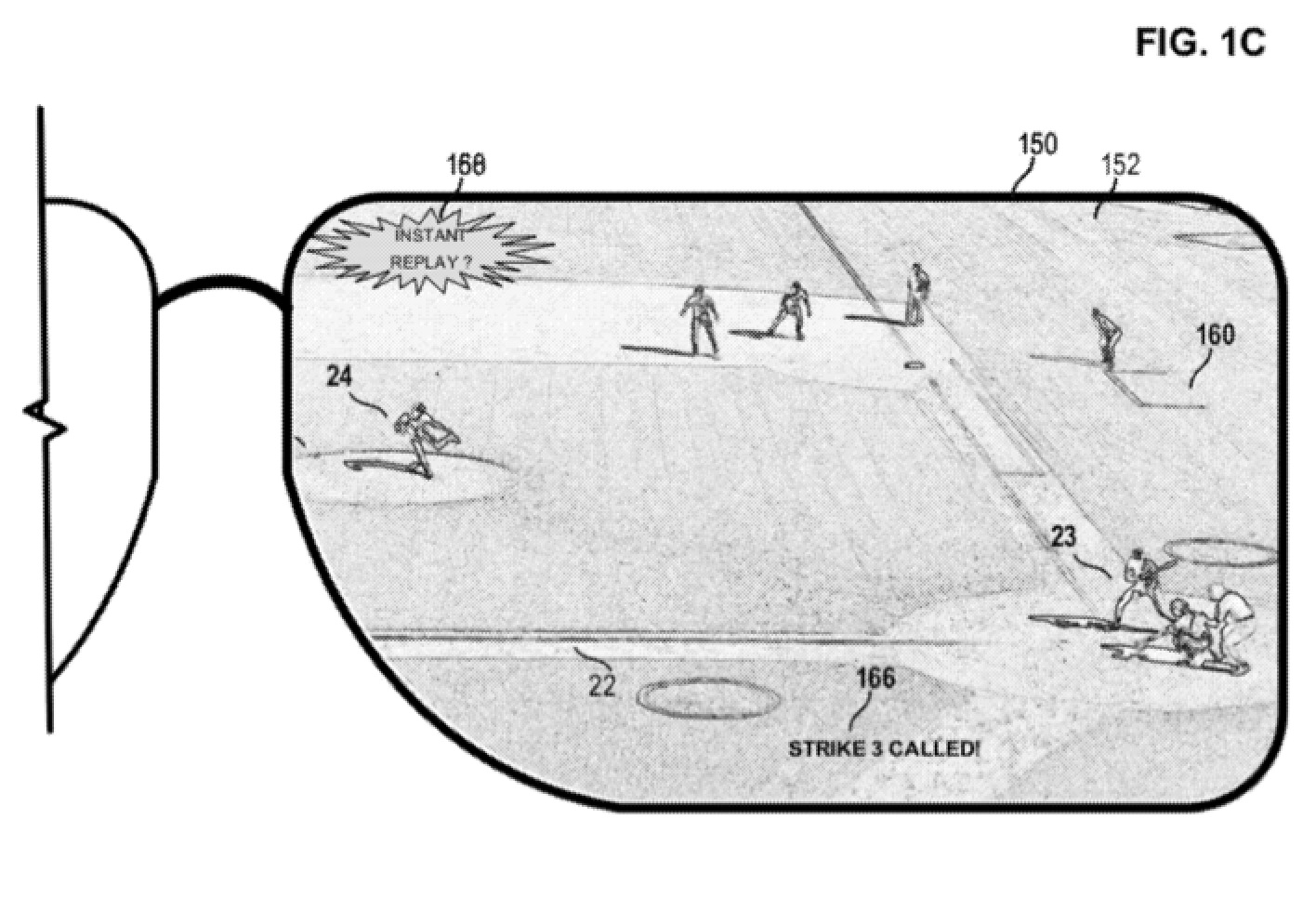

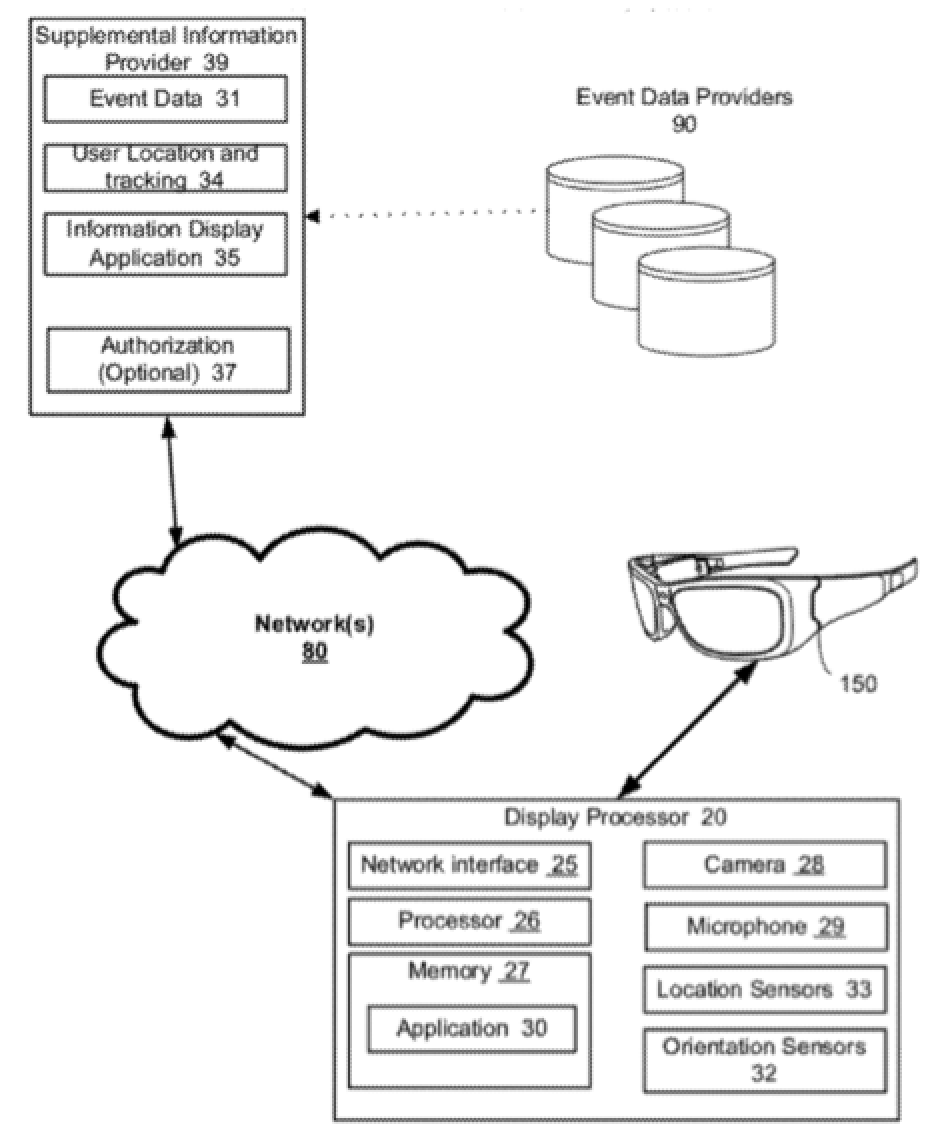

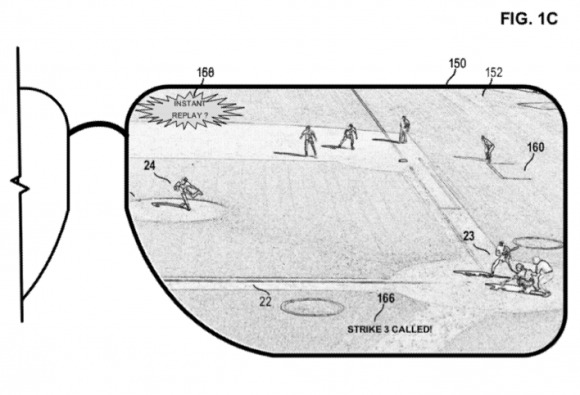

Microsoft is working on its own Google Glass alternative, a wearable computer which can overlay real-time data onto a user's view of the world around them. The research, outed in a patent application published today for "Event Augmentation with Real-Time Information" (No. 20120293548), centers on a special set of digital eyewear with one or both lenses capable of injecting computer graphics and text into the user's line of sight, such as to label players in a sports game, flag up interesting statistics, or even identify objects and offer contextually-relevant information about them.

The digital glasses would track the direction in which the wearer was looking, and adjust its on-screen graphics accordingly; Microsoft also envisages a system whereby eye-tracking is used to select areas of focus within the scene. Information shown could follow a preprogrammed script – Microsoft uses the example of an opera, where background detail about the various scenes and arias could be shown in order – or on an ad-hoc basis, according to contextual cues from the surrounding environment.

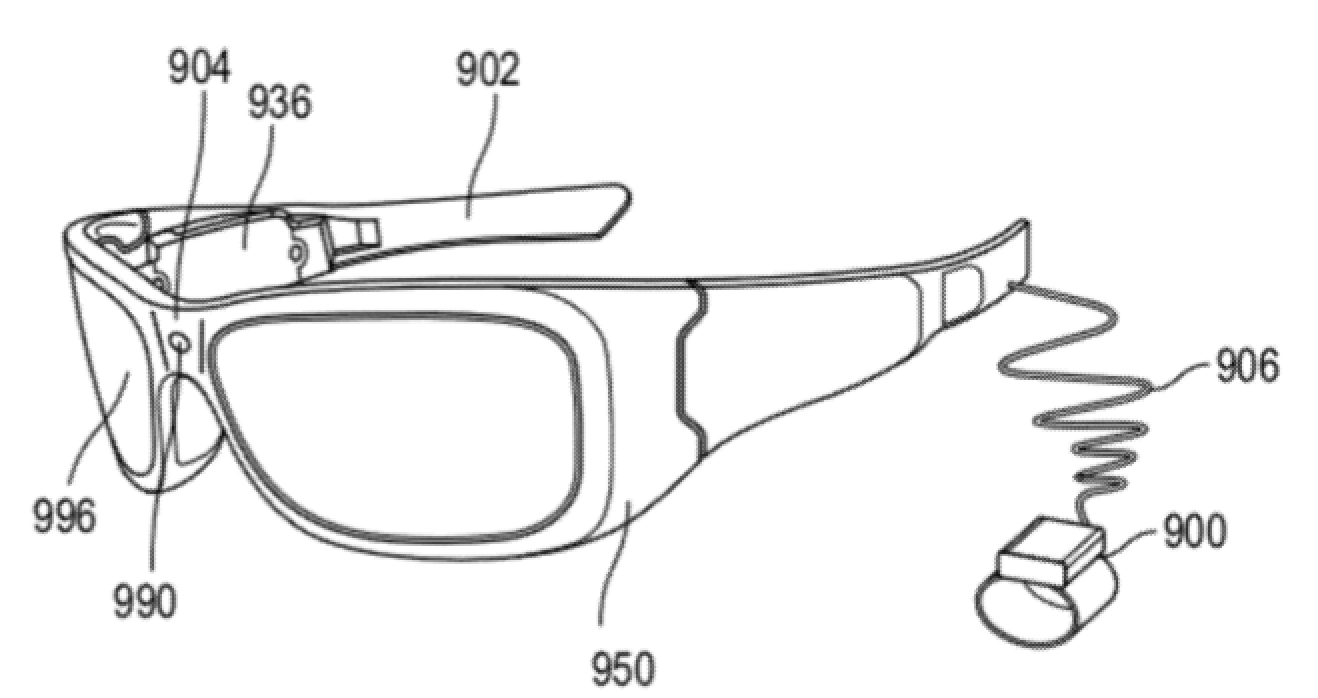

Actually opting into that data could be based on social network checkins, Microsoft suggests, or by the headset simply using GPS and other positioning sensors to track the wearer's location. The hardware itself could be entirely self-contained, within glasses, as per what we've seen of Google's Project Glass, or it could split off the display section from a separate "processing unit" in a pocket or worn on the wrist, with either a wired or wireless connection between the two.

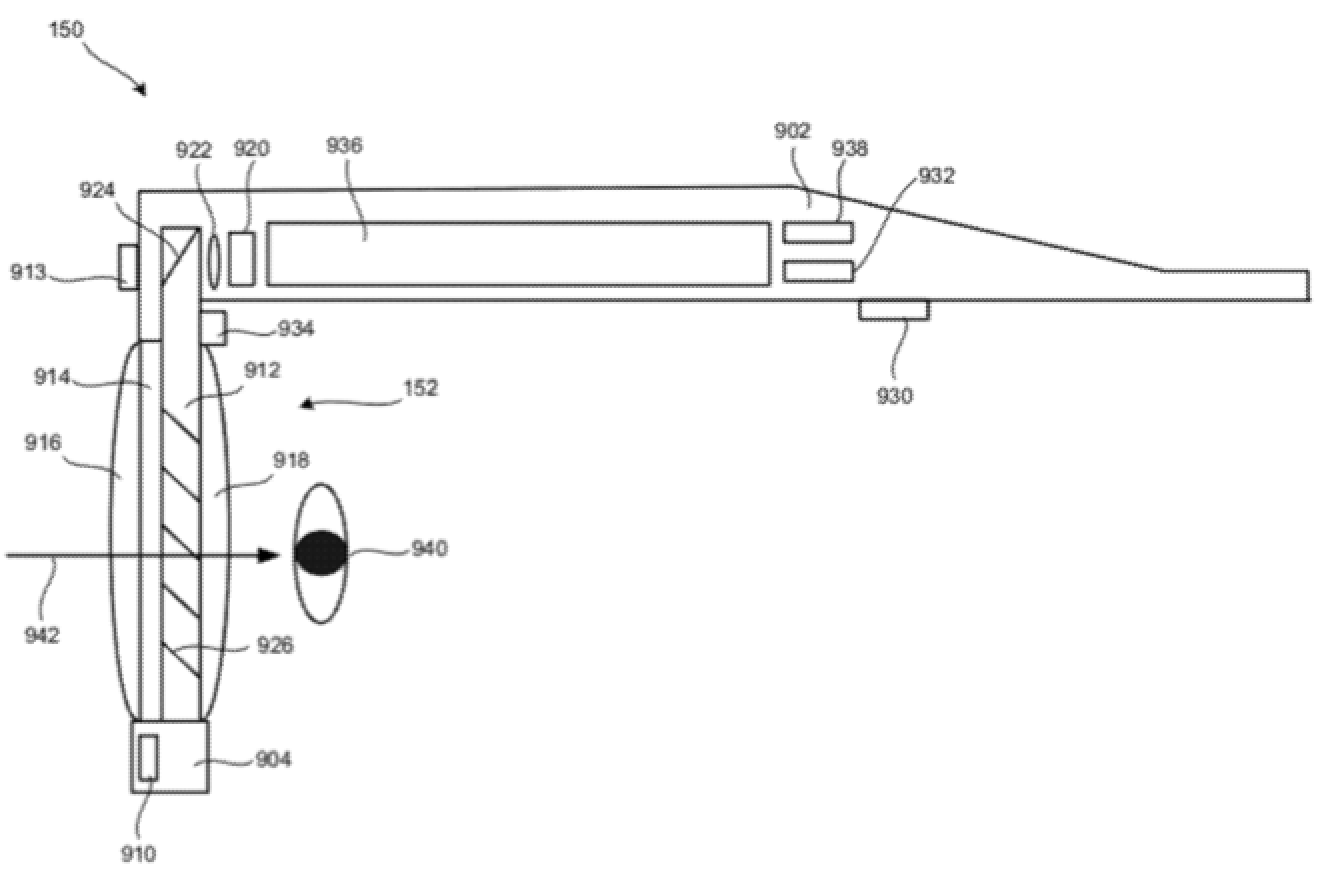

In Microsoft's cutaway diagram – a top-down perspective of one half of the AR eyewear – there's an integrated microphone (910) and a front-facing camera for video and stills (913), while video is shown to the wearer via a light guide (912). That (along with a number of lenses) works with standard eyeglass lenses (916 and 918), whether prescription or otherwise, while the opacity filter (914) helps improve light guide contrast by blocking out some of the ambient light. The picture itself is projected from a microdisplay (920) through a collimating lens (922). There are also various sensors and outputs, potentially including speakers (930), inertial sensors (932) and a temperature monitor (938).

Microsoft is keeping its options open when it comes to display types, and as well as generic liquid crystal on silicon (LCOS) and LCD there's the suggestion that the wearable could use Qualcomm's mirasol or a Microvision PicoP laser projector. An eye-tracker (934) could be used to spot pupil movement, either using IR projection, an internally-facing camera, or another method.

Whereas Google has focused on the idea of Glass as a "wearable smartphone" that saves users from pulling out their phone to check social networks, get navigation directions, and shoot photos and video, Microsoft's interpretation of augmented reality takes a slightly different approach in building around live events. One possibility we could envisage is that the glasses might be provided by an entertainment venue, such as a sports ground or theater, just as movie theaters loan 3D glasses for the duration of a film.

That would reduce the need for users to actually buy the (likely expensive) glasses themselves, and – since they'd only be required to last the duration of the show or game – the battery demands would be considerably less than a full day. Of course, a patent application alone doesn't mean Microsoft is intending a commercial release, but given the company's apparently increasing focus on entertainment (such as the rumored Xbox set-top box) it doesn't seem too great a stretch.

[via Unwired View]