Microsoft's Conversation Transcription Demo Wows As New Hardware Revealed

Microsoft has figured out real-time conversation transcription, revealing a new Azure-integrated conical reference design speaker along with a way to turn every phone and laptop in a meeting into an ad-hoc voice recognition array. The Build 2019 demo highlighted how a combination of edge devices and cloud processing could better work in harmony, as well as potential improve future smart speakers that could understand multiple commands and do away with the wake-word.

Everybody's speaking, Azure is listening

Voice to text isn't difficult, but trying to keep track of a conversation complete with overlapping speech is much harder. That's the nut which Microsoft says it has cracked, showing off a new Conversation Transcription system at Build 2019 this week. It massages the existing Azure Speech Service to support a combination of real-time, multi-person, far-field speech transcription and speaker attribution.

Microsoft's system was previewed at Build 2018 last year, but now it's making it available publicly. There's a gated preview that's taking applications, along with partnerships with providers like Accenture, Roobo, and Avanade to commercialize the Conversation Transcription system.

In the Build 2019 demo, a meeting device was able to track multiple people talking and not only correctly transcribe them, but do so even during periods of "cross-talk." It uses both audio and video signals, with audio-visual fusion to help identify who is saying what. The edge device isn't responsible for the processing, unsurprisingly: instead, the data crunching is all done in the Azure cloud.

We'll consider it a draw in this dev rap battle. #MSBuild pic.twitter.com/kFTeglyYCc

— Microsoft (@Microsoft) May 6, 2019

There's a new video and microphone array reference design

Last year, Microsoft set tongues wagging with a brief preview of new hardware. The black, cone-shaped gadget – lined with what looked like cooling fins – was topped with a fish-eye lens, and promised not only to hear and see everyone in a room, but transcribe them too. That came, you'll be unsurprised to hear, from a whole chunk of AI.

The pointy-topped speaker could automatically recognize meeting participants as they entered the room, for example, so that it would know when everybody was present. Through recognizing different voices and speech patterns, it could transcribe multi-person conversations, automatically breaking the text up according to who said what. Integration with Cortana, meanwhile, could help with finding a time on the calendar when everybody was free, and a room available for them to use.

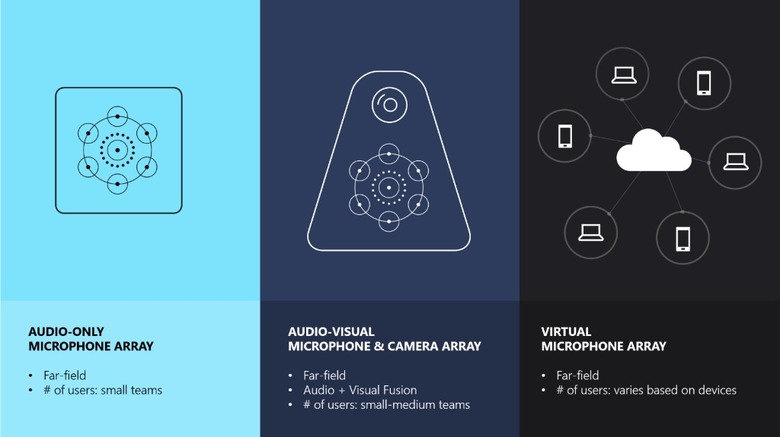

Now, Microsoft is making it available as a developer reference device, complete with a 360-degree microphone array and a 360-degree camera. The company already has a number of options for those wanting to try the Devices SDK, ranging from simple multi-microphone arrays through to smart cameras like the Azure Kinect, and this conical speaker will join them. Pricing and availability are yet to be confirmed, though Microsoft tells us that it's only going to be offered to system integrators as a limited private preview. Whether those operators opt to make a commercial product based on the same technology remains to be seen.

You might not even need a specific meeting microphone, though

Microsoft, though, is looking beyond specific hardware for better collaboration sessions and meetings. Dubbed Project Denmark, it effectively turns a group of existing devices with regular microphones – such as smartphones and laptops – into a dynamic, ad-hoc virtual microphone array.

The idea is that you wouldn't need a pro-quality far-field microphone in order to do things like conversation transcription. Instead, you'd virtually connect everybody's phones or laptops – or both – in attendance, and Project Denmark would use that for improved voice recognition than any one, single device might be capable of. Microsoft says that, with seven input audio streams, it achieves a 22.3-percent word error rate (WER) despite overlapping speech.

It's not just for a meeting room, however. For example, Microsoft suggests that the Project Denmark system could be used to power more impromptu speech-based encounters. With multiple Microsoft Translator applications, for instance, on multiple phones all linked in a single virtual microphone array, better real-time translation could be enabled.

Home smart speakers could benefit next

If you're not in the habit of attending multi-person meetings, you might wonder how all this could benefit you. The good news is that the same research that allows for Microsoft's conversation transcription could also improve future smart speakers.

"While smart speakers are commercially available today," Microsoft points out, "most of them can only handle a single person's speech command one at a time and require a wake-up word before issuing such a command." With the new additions to the Azure Speech Service, however, future smart speakers could be far more attuned to when they're being spoken to, understand requests and commands even when multiple people are talking, and even deal with complex, multi-part instructions issued simultaneously by more than one person at a time.

[Updated to reflect availability of the speaker reference design]