K-Glass AR Chip Promises All-Day Wearables Using Human Eye Tricks

A dedicated augmented reality chip that works just like human vision could open the door to wearables like Google's Glass running all day on a single charge, by only analyzing the most important details in view. The K-Glass AR processor, developed by the Korea Advanced Institute of Science and Technology (KAIST), takes a different approach to the do-it-in-software strategy Glass and other recent wearables projects have adopted, relying on customized hardware to trim power consumption by up to 76-percent, according to researchers.

Wearables like Glass and Epson's Moverio usually rely on either location-based or image-processing systems to introduce augmented reality content into the user's vision. For instance, Field Trip on Glass uses the wearer's physical location to pull up nearby points of interest that the headset can display.

On Epson's Moverio, meanwhile, computer-vision analysis is used to spot glyphs like QR codes or markers and overlay digital graphics – whether menu UIs or animated dinosaurs – onto the scene.

The downside to both systems is generally power consumption and accuracy, either from running GPS or other positioning sensors permanently, or from doing processor-intensive image recognition on every scene. To combat this, most wearables manufacturers have opted for relatively low-power components – such as the few-generations-old processor in Glass – and offsetting heavy-duty tasks to the cloud, which then of course requires persistent wireless connectivity.

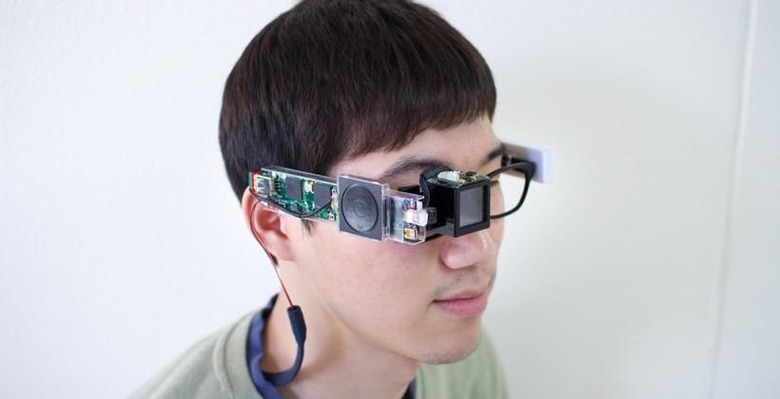

KAIST's K-Glass, in comparison, doesn't have the relatively sleek looks of Google's headset, and only uses a monocular display in prototype form, unlike the binocular vision of Epson's. What it does have, however, is significantly lower power consumption than most other head-mounted displays (HMDs) because it uses targeted hardware rather than generic chips and software, as well as some clever junking techniques to minimize the amount of raw data that needs processing.

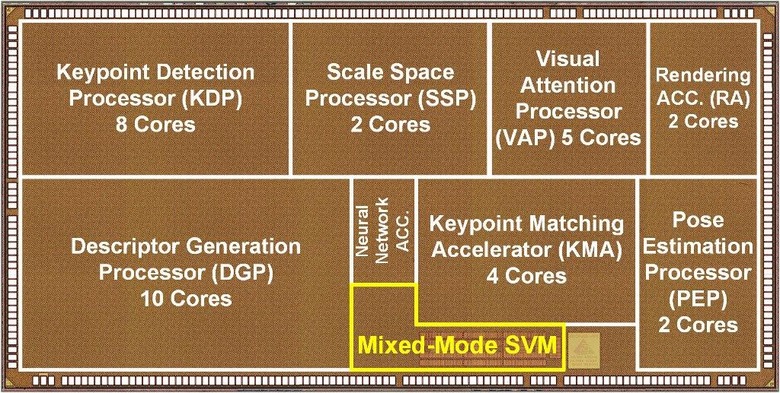

The Korean chip is based on the Visual Attention Model (VAM) which sees the human brain identify – either unconsciously or automatically – the most important part of the scene ahead and process only that. K-Glass' digital version uses a similar strategy, with parallel data processing figuring out whether each segment of the scene is important and filtering only the most vital to the surface.

That way, the 250MHz chipset can analyze 720p HD video at 30fps with 1.57 TOPS/W efficiency, for what KAIST says is a 76-percent improvement over other devices. "Our processor can work for long hours without sacrificing K-Glass' high performance" Professor Hoi-Jun Yoo, team lead, said of the new chip, "an ideal mobile gadget or wearable computer, which users can wear for almost the whole day."

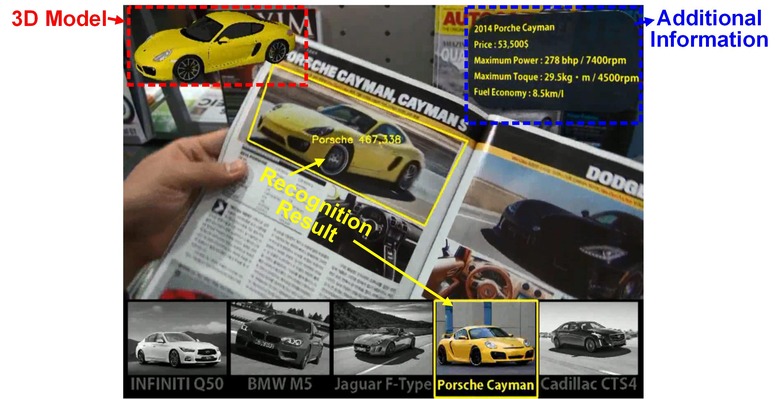

KAIST suggests that the technology could be useful in restaurants, where the most pertinent information might be deciphering and explaining menu items as well as introducing 3D graphics of sample dishes into the wearer's line-of-sight, or when reading magazines, pulling out feature content and adding to it with online context.

The Institute hasn't discussed production plans for the K-Glass chip at this stage, though it would seem likely that any market strategy would involve licensing the technology or producing the AR chip and then selling it to third-party wearable firms, rather than building augmented reality headwear itself.