Google Lens Spreads From Android To iPhone

Google Photos users on iPhone and iPad are getting a new feature, with Google Lens adding its visual search and more in an update to the app. Announced back at Google IO 2017, and first released on the Pixel 2 and Pixel 2 XL, Lens has until now been limited to Android devices.

The technology turns the camera into a smart scanner, of sorts. Point Lens at a landmark or scene, such as the Eiffel Tower or Statue of Liberty, and it will try to identify it and pull up background information online. Point it at a business card, meanwhile, and it will attempt to extract the pertinent contact details and then offer to create a new contact entry with them on your phone.

While not perfect, it's definitely useful at times, but so far iPhone and iPad users have been left out of luck. That changes with an update to Google Photos, which will bake Lens functionality in for iOS devices. Starting with version 3.15, there's a new preview of the Lens functionality.

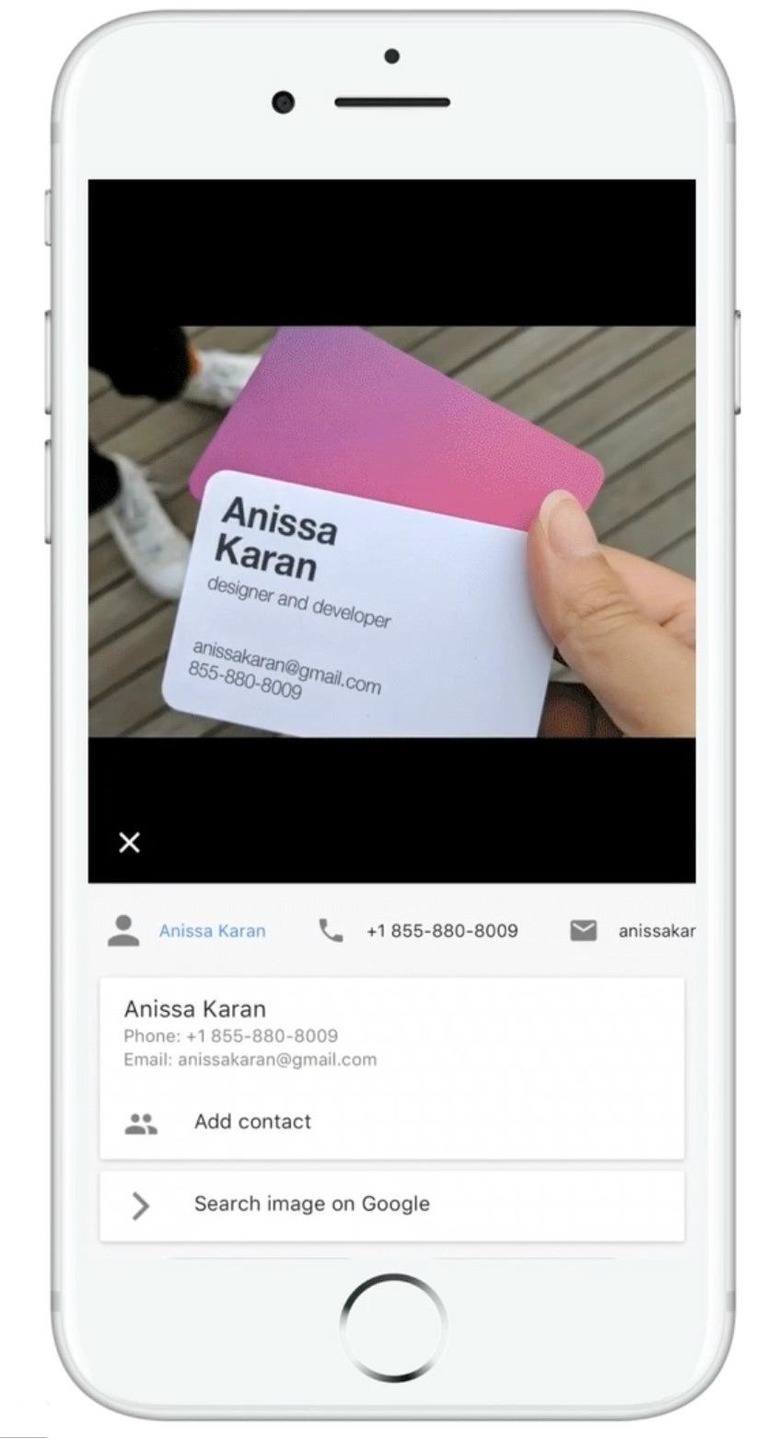

The workflow in Google Photos for iOS is straightforward. Rather than taking a new photo, Lens works from an existing shot in your library. Tap the newly-added Google Lens button, and the system will automatically analyze it and offer whatever actions it believes are most useful.

If that's a business card, that means pulling out contact details. Photos of books offer up reviews and other information, while landmarks and buildings, paintings, and plants or animals search the internet for background details. Flyers or event billboards can have their details automatically added to the calendar.

If you have Web & App Activity turned on, meanwhile, your Google Account will keep track of your searches. Google Lens activity is saved in that timeline for future recall. However, you can delete individual entries, or indeed turn off activity history altogether.

Lens isn't quite at the stage where we'd consider it a must-have feature, though if you deal with a lot of business cards it could make your contacts workflow much smoother. Nonetheless, as a sign of Google pushing computational photography and paving the way for more AR-style features that bridge real-world objects and locations with data, it's certainly an interesting experiment. If you want to give it a try, Google says it's rolling out for Google Photos users over the next week or so.

Starting today and rolling out over the next week, those of you on iOS can try the preview of Google Lens to quickly take action from a photo or discover more about the world around you. Make sure you have the latest version (3.15) of the app.https://t.co/Ni6MwEh1bu pic.twitter.com/UyIkwAP3i9

— Google Photos (@googlephotos) March 15, 2018