Facebook notifies users about COVID-19 misinformation they shared

Social media has quickly become one of the ways many people get their news and information these days. In some cases, it may even be the only way people learn about what's happening around them. Unfortunately, these tools have also become seedbeds for misinformation, thanks to both spontaneous as well as coordinated spread of fake news. The likes of Twitter and Facebook have made attempts to curb this misuse of their networks and Facebook's latest move is aimed squarely at stopping the spread of false COVID-19 information.

Facebook actually already took steps back in April to warn users about COVID-19 misinformation spreading on its network. Not only would it remove misleading posts but also warned users that shared those on their feeds. Unfortunately, that strategy proved not only ineffective but also confusing.

The notice just would appear on users' timelines, often without context. Facebook tried to err too much on the side of caution by not pointing users to the offending post that could inadvertently make them more curious about the misinformation. More than half a year later, Facebook realized it just wasn't enough.

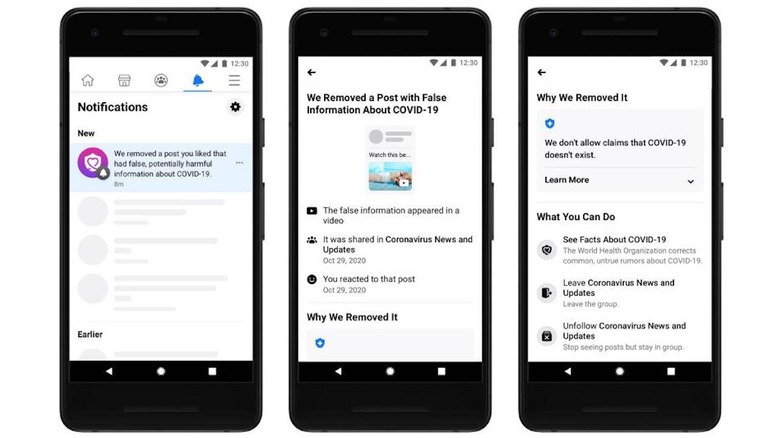

Now it will push a notification to users that shared such misinformation that a post has been removed exactly because of that violation. But rather than leave it at that, Facebook will actually show a thumbnail of the offending post to give context. And to ensure that users are pointed in the right direction, the notification will link them to verified COVID-19 facts.

Unsurprisingly, there are a few that still find Facebook's response lacking. The generic notification, for example, doesn't directly address why the particular misinformation was false and offer the facts that correct it. Additionally, some also criticize Facebook for taking too long to take such bold steps, making these changes too little, too late to make a difference.