Facebook Will Warn Those Who've Seen Coronavirus Fake News

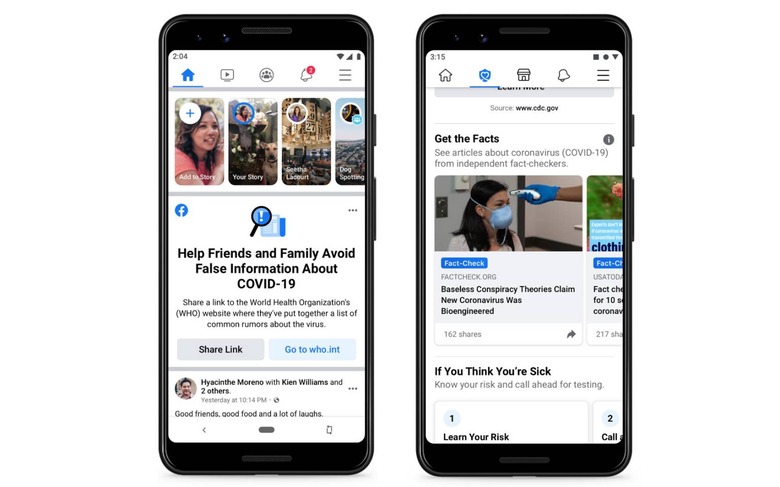

Facebook will notify anybody who interacted with COVID-19 fake news, such as debunked myths and potentially harmful guidance on untested "cures" for the coronavirus. In March 2020, Facebook said today, it displayed warnings on a whopping 40 million posts related to COVID-19, after incorrect information was spotted by independent fact-checkers.

Facebook works with more than 60 of those fact-checking organizations, operating across more than 50 languages around the world. That number expanded in March, with eight new checkers and more than a dozen extra countries.

Their role is simple but herculean: spot potentially false news being shared on Facebook, and flag it. Facebook uses warning labels that add greater context about the topic, and according to the social network around 95-percent of the time they don't go on to view the original shared content. After a false story is highlighted in the system the first time, it can be spotted as reposts continue.

"To date, we've also removed hundreds of thousands of pieces of misinformation that could lead to imminent physical harm," Facebook said today. "Examples of misinformation we've removed include harmful claims like drinking bleach cures the virus and theories like physical distancing is ineffective in preventing the disease from spreading."

Facebook will now contact the misled

The problem with that system is it requires fact-checkers to see the incorrect information before regular Facebook users do. If that doesn't happen, there are no warning messages to see.

Now, Facebook says, it plans to show a new message "to people who have liked, reacted or commented on harmful misinformation about COVID-19 that we have since removed." It'll include a link to the World Health Organization (WHO) for further information. Those messages will begin appearing "in the coming weeks," Facebook says.

Not every piece of debunked news will seemingly trigger that notification, however. Facebook doesn't take down every piece of misinformation its fact-checkers spot, and it appears the alert will only be shown for removed content. If it has been flagged with warnings but left up, it's unclear whether that will prompt an alert.

"If a piece of content contains harmful misinformation that could lead to imminent physical harm, then we'll take it down," Facebook CEO Mark Zuckerberg explained today. "For other misinformation, once it is rated false by fact-checkers, we reduce its distribution, apply warning labels with more context and find duplicates."

"We will also soon begin showing messages in News Feed to people who previously engaged with harmful misinformation related to Covid-19 that we've since removed, connecting them with accurate information," Zuckerberg continued.

Fake products and guidance on how to avoid or treat coronavirus have become commonplace amid the pandemic. Earlier this month, the FDA issued guidance on avoiding scam products that promised to cure the infection; the agency has also been delivering take-down demands to companies peddling such fake treatments.