Beetle Camera Research Shows Turning Tiny Head Better Than Whole Body

Researchers from the University of Washington have revealed a new "wireless steerable vision" system for live insects and teeny tiny robots. Solutions presented in this research were created to investigate insect vision, and the study the trade-offs made by insects between small retinal regions and the movement of said retinal regions independent of an insect's body through head motion.

In creating a new camera system and a tiny robot to accompany said camera system, researchers aimed to better understand the trade-offs made by insect vision systems in nature. Through that understanding, engineers will be able to design "better vision systems for insect-scale robotics in a way that balances energy, computation, and mass."

These researchers created a vision system that was:

• Fully wireless

• Power-autonomous

• Mechanically steerable

• Able to imitate head motion

• Small enough to fit their needs

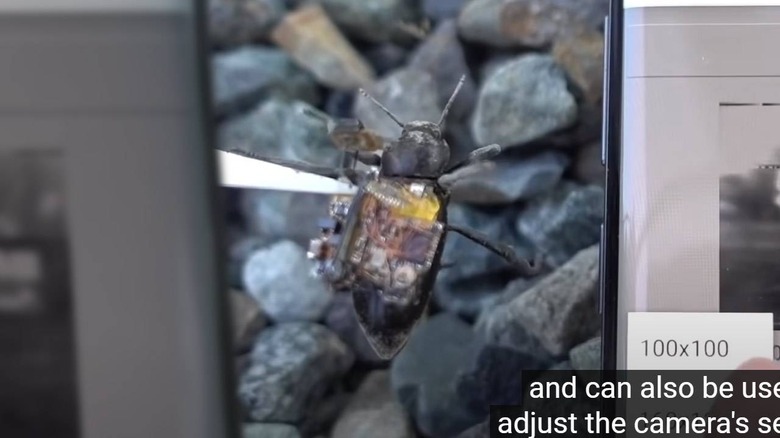

This camera system needed to be so extremely small that it was able to "mount on the back of a live beetle" or a "similarly sized terrestrial robot."

Camera system specs:

• 248 milligrams total

• Turns 60-degrees

• Controlled by nearby smartphone

• Bluetooth control and data transfer 120 meters away

• Video: 160 x 120 pixel resolution, monochrome, 1 to 5 frames per second

• Onboard accelerometer

• 10mAh battery

• Up to 6 hours of activity

With the onboard accelerometer, the beetle camera captures media only when the system detects the beetle is in motion. Because this system only triggers when motion sets it to activate, it's able to work on a 10-milliamp battery for up to 6 hours.

The group also created a tiny robot that's able to take the place of the beetle – more or less. This little beetle robot was used as a testing ground for the camera alongside actual living beetles.

Beetle robot (terrestrial robot)

• 1.6 centimeters x 2 centimeters large

• Speed up to 3.5 centimeters per second

• Vision support

• Between 63 and 260 minutes of activity

The most basic finding of the research was that steerable vision can enable object tracking and wide-angle views "for 26 to 84 times lower energy than moving the whole robot." It's easier to move your head than it is to move your whole body – and here it's true to a high degree!

You can learn more by checking out the research paper associated with this news at "Wireless steerable vision for live insects and insect-scale robots" as hosted by the research publication Science Mag (with AAAS, Science Robotics). This paper was authored by Vikram Iyer, Ali Najafi, Johannes James, Sawyer Fuller, adn Shyamnath Gollakota.

Vikram Iyer hails from Paul G. Allen School of Computer Science and Engineering, University of Washington, Seattle, as well as the Department of Electrical and Computer Engineering at that same institute. Ali Najafi also hails from the Department of Electrical and Computer Engineering at University of Washington, Seattle, USA. Iyer and Najafi are equal and primary contributors to this research.