Apple Delays Controversial Child Safety Scanning System

Apple is delaying the launch of its controversial child safety features, postponing the system which would scan iCloud Photos uploads and iMessage chats for signs of illegal sexual content or grooming. Announced last month, the system would use a third-party database of Child Sexual Abuse Material (CSAM) to look for signs of illegal photos uploaded to the cloud, but met with instant push-back from privacy advocates.

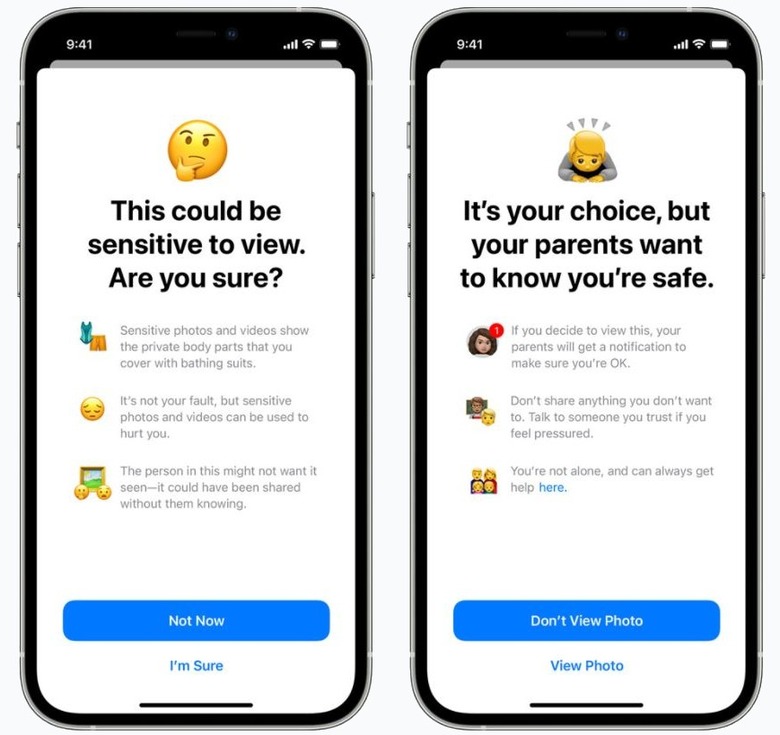

Lending to the confusion, Apple actually announced two systems at the same time, though the functionality was in many places conflated. On the one hand, iMessage would use image recognition to flag potentially explicit pictures being shared in conversations with young users. Should such a picture be shared, it would be automatically censored and, optionally for younger users, parents notified about the content.

At the same time, the second system would be scanning for CSAM. Only images uploaded to Apple's iCloud Photos service would be monitored, using picture fingerprints generated by a database of such illegal content by expert agencies. If a number of such images were spotted, Apple would report the user to the authorities.

Envisaging privacy and safety concerns, Apple had baked in a number of provisos. The scanning would take place on-device, rather than remotely, the company pointed out, and the fingerprints by which images would be compared would contain no actual illegal content themselves. Even if uploads were flagged, there'd be a human review before any report was made.

Nonetheless, opponents to the plan were vocal. Apple's system was a slippery slope, they warned, and the Cupertino firm – despite its protestations otherwise – would undoubtedly face pressures from law enforcement and governments to add content to the list of media users' accounts would be monitored for. Young people could also be placed at risk, it was pointed out, and their own right to privacy compromised, if Apple inadvertently outed them as LGBTQ to parents through its iMessage scanning system.

Even Apple execs conceded that the announcement hadn't been handled with quite the deft-touch that it probably required. Unofficial word from within the company had suggested the teams there were taken aback by the extent of the negative reaction, and how long it persisted. Now, Apple has confirmed that it will no longer launch the new systems alongside iOS 15, iPadOS 15, watchOS 8, and macOS Monterey later this year.

"Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material," Apple said in a statement today. "Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features."

To be clear, this isn't a cancellation of the new CSAM systems altogether, only a delay. All the same, it's likely to be seen as a win for privacy advocates, who while acknowledging the need to protect children from predatory behaviors, questioned whether widespread scanning was the best way to do that.