Apple CSAM Detection Failsafe System Explained

Today Apple released a document that outlines the security threat model review system included with their new child safety features. Apple clarified the various layers of security and pathways with which their new child safety system works. Clarification today was part of a series of discussions Apple had in the wake of the announcement of their new child safety features – and the inevitable controversy that followed.

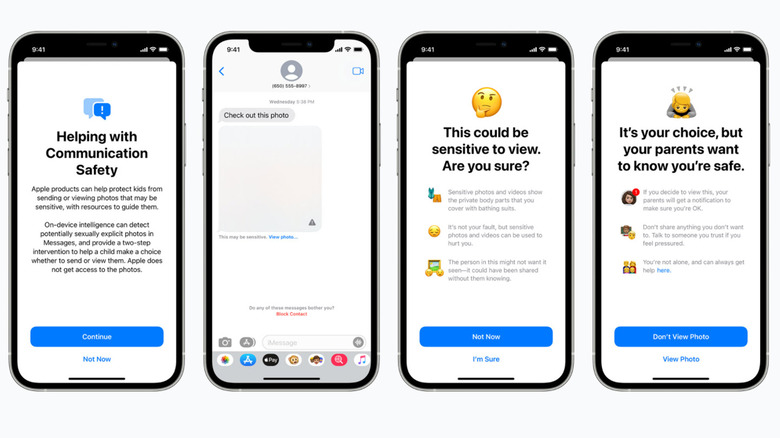

There are two parts to this system of expanded protections for children: one involving Family Sharing accounts and Messages, the other dealing with iCloud Photos. The Messages system requires that a parent or guardian account activate the feature in order for it to work. This is an opt-in system.

Family Sharing with Messages

With Family Sharing, an parent or guardian account can opt-in to a feature that can detect sexually explicit images. This system only uses an on-device machine learning classifier in the Messages app to check photos sent by and to a given child's device.

This feature does not share data with Apple. Specifically, "Apple gains no knowledge about the communications of any users with this feature enabled, and gains no knowledge of child actions or parental notifications."

The child's device analyzes photos sent to or from their device with Apple's Messages app. The analysis is done on-device (offline). If a sexually explicit photo is detected, it'll will not be visible to the child, and the child will have the option to attempt to proceed to view the image – at which point the parent account will be notified.

If the child confirms their wish to see said image, said image will be preserved on the child's device until a time when the parent can confirm the content of the photo. The photo is saved by the safety feature, and cannot be deleted by the child without the parent's consent (via parental access of the physical device.)

CSAM detection with iCloud

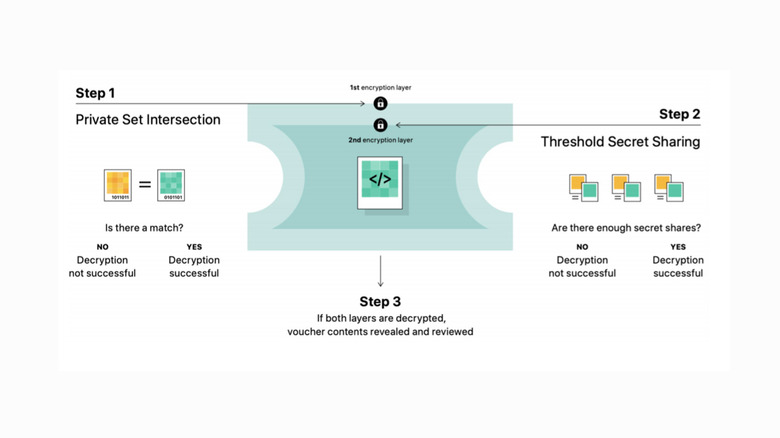

The second feature works specifically with images stored on Apple servers in iCloud Photos libraries. CSAM detection can potentially detect CSAM images, and if enough CSAM images are detected, data will be sent to Apple for human verification. If Apple's human verification system confirms that CSAM material is present, the offending account will be shut down and the proper legal authorities will be contacted.

Apple will detect CSAM imagery in the first part of this process using known CSAM hash databases. CSAM hash databases include a set of detection parameters created by organizations whose job is to use known CSAM imagery to create said sets of parameters.

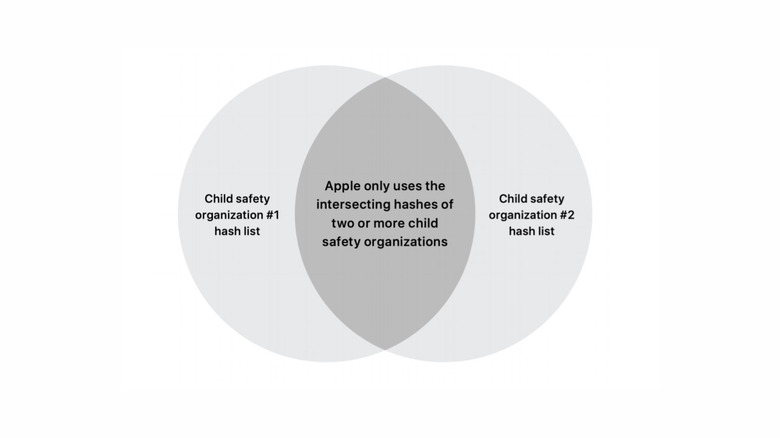

Apple suggested this afternoon that each check is done with hashes that intersect between two or more child safety organizations. This means that no one child safety organization can add a parameter (hash, here) that might set off the process to check for non-CSAM material.

Apple also makes sure that any perceptual hashes that exist in multiple organizations hash lists in a single sovereign jurisdiction (but not others), are discarded. This way there's no way for a single country to coerce multiple organizations into including hashes for non-CSAM material (for example, photos of antigovernment symbols or activities).

Apple suggests that the chances a photo was falsely identified as CSAM at this point in the process are one-in-one-trillion.

At this point Apple still has no access to the data being analyzed by their system. It is only once a single person's account meets a threshold of 30 flagged images that the system shares data with Apple for further review.

BUT – before that, there is a second, independent perceptual hash to double-check the 30+ flagged images. If the secondary check confirms this second check, data is shared with Apple human reviewers for final confirmation.

Per documentation released by Apple today, "Apple will refuse all requests to add non-CSAM images to the perceptual CSAM hash database," AND "Apple will also refuse all requests to instruct human reviewers to file reports for anything other than CSAM materials for accounts that exceed the match threshold."

If a human reviewer at Apple confirms that an account has CSAM material, they will report to the proper authorities. In the United States, this authority is the National Center for Missing and Exploited Children (NCMEC).

Stay aware, in any case

Apple's documentation of the iCloud Photos part of this child safety system says that perceptual checks are only done on their cloud storage pipeline for images uploaded to iCloud Photos. Apple confirmed that this system "cannot act on any other image content on the device." Documentation also confirms that "on devices and accounts where iCloud Photos is disabled, absolutely no images are perceptually hashed.

SEE TOO: Apple Child Safety FAQ pushes back at privacy fears

The Messages perceptual check system also stays on the device, locally. The Messages Family Sharing check system is done using the child's own hardware, not any sort of server at Apple. No information about this system of checks is shared with anyone other than the child and the parent – and even then, the parent must physically access the child's device in order to see the potentially offending material.

Regardless of the level of security Apple outlines and the promises Apple makes about what'll be scanned and reported, and to whom, you're right to want to know everything there is to know about this situation. Any time you see a company employing a system with which user-generated content is scanned, for any reason, you're right to want to be aware of how and why.

Given what we understand about this system thus far, there is good news, depending on your view. If you want to avoid Apple employing any sort of checking of your photos, it seems likely you'll be able to do so – assuming you're willing to avoid iCloud Photos and you're not using Messages to send photos to children whose parents have them signed up with a Family Sharing account.

If you're hoping this will all lead to Apple contributing to stopping CSAM material-sharing predators, it seems possible that COULD happen. It'll only really be the offenders that for whatever reason don't know how to deactivate their iCloud Photos account... but still. It could be a major step in the right direction.