Microsoft-Backed AI Learns How To Play Minecraft By Watching Online Videos

Machines capable of rapidly learning functions and interacting with interfaces humans usually use, like keyboards, are taking over the world. Luckily for us, this particular world is virtual. An AI developed by a Microsoft-backed organization has learned how to play "Minecraft" after spending some time studying videos on YouTube.

Open AI, which developed the bot, is a non-profit research and development company with backers that include Microsoft, Reid Hoffman's charitable foundation, and Khosla Ventures. Elon Musk, a man who has publicly stated his fears about AI and how much of a threat it is to humanity, was one of the company's founders. Musk was heavily involved in the research organization early on and, along with the other founding board members, made a "substantial contribution" to its funding.

One of the non-profit's more famous recent projects is DALL-E, the open-source, machine learning-based tool that turns words into art. That particular project is not yet accessible to the general public, but another tool based on its source code, DALL-E Mini, is. The tool isn't exactly perfect, which has only increased its popularity — the internet loves things like messed-up images based on strange concepts.

How was the AI taught to play?

During Open AI's Video Pre Training study, the AI was linked to thousands of hours of "Minecraft" gameplay footage. Most of this footage was unlabeled, so the program had to independently pull useful information from the videos. After around 70,000 hours of footage had been viewed, the team created something called an inverse dynamics model (IDM), which used around 2,000 hours of video and contained labels for actions like mouse clicks and key presses. The IDM allows the AI to navigate by using "past and future information to guess the action at each step." The Trained IDM then labels 70,000 hours of footage with mouse and keyboard actions.

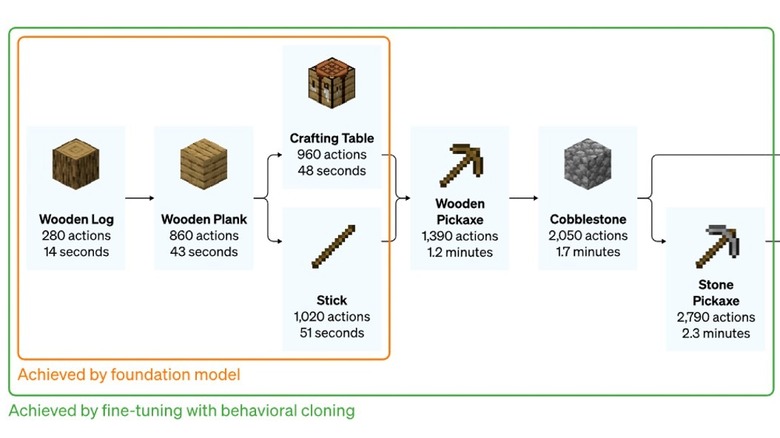

There was then a process of refinement, fine-tuning, and "behavioral cloning" that got the AI to a point where it could independently create a stone pickaxe. However, a key element of "Minecraft" is exploration, so how do you code for randomness and spontaneity? Instead of trying to code randomness, the model set a task of creating a diamond pickaxe, which requires diamonds. These diamonds are randomly placed at locations deep within the game's map, so to find a key component required for the task, the bot had to go exploring by default. It was essentially replicating the behavior of players it had seen creating diamond pickaxes.

How good is an AI bot at Minecraft?

OpenAI says that its bot "accomplishes tasks in "Minecraft" that are nearly impossible to achieve with reinforcement learning from scratch." This includes crafting a diamond pickaxe, a task that takes a good human player around 20 minutes on average and something the AI achieved in around 2.5% of the 10-minute episodes it took part in. Early on, the training and refinement created an AI that could "chop down trees to collect logs, craft those logs into planks, and then craft those planks into a crafting table," according to OpenAI. This is one of the game's more basic sets of actions and takes a player with a basic grasp of the game less than a minute.

There were other tasks not directly related to the objective (crafting a diamond pickaxe, for example) that the AI had both learned and demonstrated. This included swimming, hunting animals for food, and eating that food. More impressively, OpenAI's bot picked up the concept of pillar jumping, a skill "Minecraft" players use often; it involves gaining elevation by jumping and placing a block underneath your character before it lands.

While this bot is not truly independent, it seems to demonstrate the ability to take actions based on an outcome it wishes to achieve. This ability to think on the fly is a major leap forward and could open up some pretty major possibilities — both good and bad.

What does this mean beyond Minecraft?

You might think unleashing an AI that is capable of identifying a task, learning how to perform that task, and then carrying out that task is essentially how the plotline of movies like "The Matrix" and "Terminator" ended up playing out ... and you would be correct. But this isn't about bringing an end to sentient life on Earth or dominating block-based video games — in fact, it could actually benefit humanity massively. "Minecraft" itself isn't a simple game. Accomplishing tasks like creating a diamond pickaxe requires players to get comfortable with a variety of different skill sets.

Machines capable of learning independently, carrying out tasks, and making connections on their own could impact every area of our lives. We could advance our knowledge further than ever before, and even rediscover things we forgot millennia ago. Artificial intelligence — and especially neural networks — are already playing a huge role in the business, research, and technology sectors, and that role is only going to get bigger as the machine learning process improves. In terms of what machine learning could contribute to research, imagine having a bunch of PhD-level scientists who never tire and are intent on working 24 hours a day.

However, many top scientists and key figures in the world of technology have warned of the dangers that AI could pose. OpenAI founder Elon Musk even described the concept as "potentially more dangerous than nukes." Earlier this year, the AI research lab's top scientist, Ilya Sutskever, warned that the larger neural networks being used at the moment may be developing a kind of consciousness, which might be bad news.

You could win money by helping train the 'Minecraft' AI

The whole project is open source, and a prize pool has been put together — so you could potentially win a significant amount of money and play your part in potentially helping the machines rise up at the same time. The OpenAI team needs help tuning their model to perform particular tasks and the challenge centers around designing a reward function to help encourage the AI. It is hoped the rewards competitors come up with will help make the string of ones and zeroes more eager to carry out tasks in the game. Participants can also encourage the AI to create better work by designing it to respond to and learn from human feedback.

During judging, a 50-contestant shortlist will be assembled, then whittled down to 10 before the final winners are announced. The guaranteed prize pool of $20,000 will be split into a number of prizes and awarded to various competitors. A conditional prize pool of $50,000 to $100,000 is also available. The conditional money will go to an individual or individuals who create a solution "that reaches a considerable milestone during this competition," according to OpenAI. The window for submissions opens in July and closes in October 2022 — with the winner(s) being announced in November. A live online ranking board will also be displayed during the contest, but organizers say that may not reflect the final score and that it is only there to provide immediate feedback.