NVIDIA Is Mapping Earth's Digital Twin And Your Car Could Help

Self-driving and semi-autonomous cars need to know where they are, and that's something NVIDIA is undertaking with NVIDIA DRIVE Map as it attempts to map the world's roads. The chip-maker has announced a new mapping fleet, with which it plans to survey 500,000 kilometers (310,000 miles) of road worldwide by 2024, all in the name of creating an "Earth-scale digital twin" at centimeter-level accuracy. It'll be built using the DeepMap survey mapping technology that NVIDIA acquired last year. DRIVE Map will combine three localization layers: camera, LIDAR, and radar, each containing specifics that different aspects of Level 3 semi-autonomous or Level 4 autonomous vehicle might require.

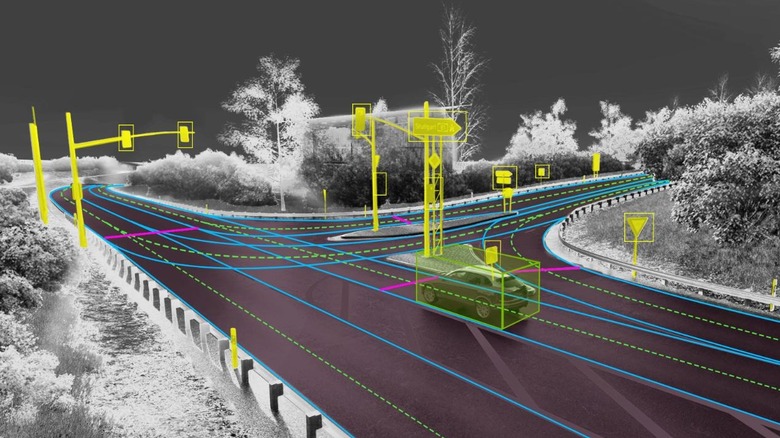

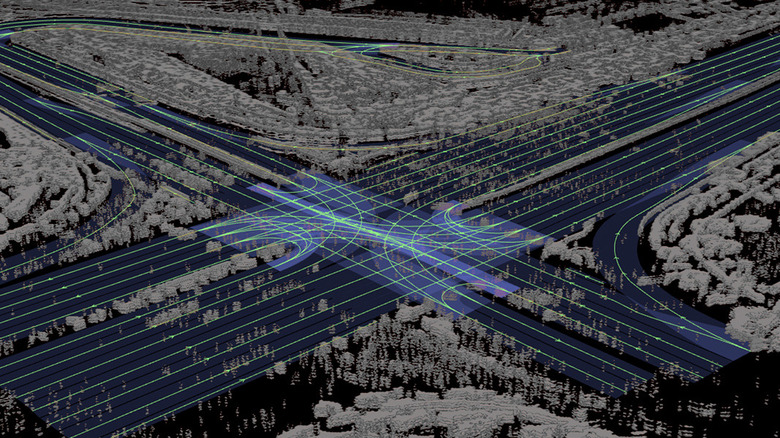

The camera layer, for example, would include details like road markings and lane dividers, together with the boundaries of road. It would also include street furniture such as traffic signals, signs, and poles. The radar level, meanwhile, would be an aggregate point cloud of radar returns. That has the advantage of being unaffected by low-light conditions and inclement weather. Finally, the LIDAR level would be the most precise in its representation of the digital Earth twin. It will be a 3D model of that at 5 centimeter (2 inch) resolution, allowing autonomous car systems to more accurately position themselves within the environment.

The map is not the territory - but it helps

NVIDIA isn't the only company working on this sort of super-accurate mapping data. It's a project we've heard of many times from HERE Technologies, now owned by Audi, BMW, Mercedes, and Intel among others, as its HD Maps evolve into what the company calls the Reality Index. That's effectively a digital representation of the real world, to better educate autonomous vehicles. At the same time, automakers like Ford and General Motors are already using more high-precision maps for Level 2 driver assistance systems. Ford BlueCruise and GM Super Cruise each deliver hands-off adaptive cruise control, holding vehicles centered in the lane by combining a high-resolution GPS sensor with a more data-rich map of divided highways. While far from true autonomous driving — both systems still rely on the human at the wheel paying attention and being ready to take over at a moment's notice — they're seen as a precursor to the sort of data actual Level 4 and Level 5 vehicles will require.

Actually getting that mapping data, and keeping it updated, is no small challenge. Ford and GM have promised regular upgrades to the database used in their vehicles, though in situations where the real world doesn't match the mapping the driver will have to retake control. NVIDIA's plan is to tap the power of the crowd to help keep its data up to date.

The power of crowdsourcing

DRIVE Map, then, will be the combination of two map engines. On the one hand, there'll be the so-called ground truth survey map engine: the triple-layer data set which NVIDIA's own survey vehicles will generate. That'll be combined with an AI-based crowdsourced engine, aggregating map updates from millions of cars actually out driving on the roads. That obviously requires a certain set of hardware — and connectivity — on those vehicles. NVIDIA is calling that DRIVE MapStream, effectively the requirements for gathering camera, radar, and LIDAR data. The resulting surveying is then fed into NVIDIA Omniverse, and then pushed back out to update the global fleet via over-the-air (OTA) updates in a matter of hours.

That'll be key for autonomous and semi-autonomous vehicles wanting the most accurate view of the real world around them, but it'll also be used by any driverless AI model built using NVIDIA's Earth-scale representation in the NVIDIA DRIVE Sim. It'll also help with the generation of simulated environments, effectively creating more lifelike virtual worlds to challenge those AI models.