NVIDIA's Robotics Platform Got A Job At Amazon

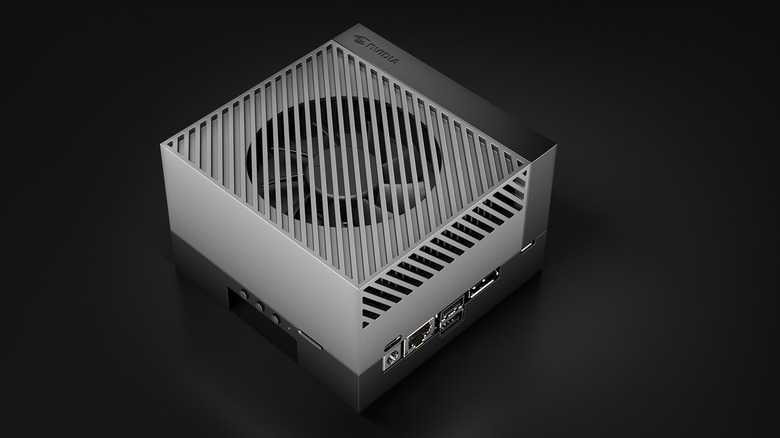

NVIDIA has revealed the latest version of its robotics platform at GTC 2022, a new Jetson module and a robot reference design to showcase it, along with a high-profile partnership that will see Amazon use its technology for warehouse robots. The new NVIDIA AGX Orin Developer Kit goes on sale today, the chip-maker announced, giving partners a simple way to tap the power of the new Jetson AGX Orin modules. "A million developers and more than 6,000 companies have already turned to Jetson," Deepu Talla, vice president of Embedded and Edge Computing at NVIDIA, said of the move. "The availability of Jetson AGX Orin will supercharge the efforts of the entire industry as it builds the next generation of robotics and edge AI products."

Orin builds on NVIDIA's existing work with Xavier, its previous-generation platform. The new version can manage 275 trillion operations per second (TOPS), or greater than 8x the peak performance of Xavier. It combines NVIDIA's Ampere architecture GPU, Arm Cortex-A78AE CPUs, and new deep learning and vision accelerators for things like computer vision. There's also an uptick in memory bandwidth and multimodal sensor support.

Orin delivers big performance, low-hassle upgrades

The new Developer Kit goes on sale today, at $1,999. It'll have twelve A78 Arm CPUs, along with 32GB of memory with 204 GB/s of bandwidth. Production Orin modules, meanwhile, are expected to arrive in Q4 2022. They'll be split into two families. Most affordable will be the Jetson Orin NX Series, starting at $399 for an 8GB version with six CPUs and a total of 70 TOPS of performance. A $599 version will have 16GB, eight CPUs, and 100 TOPs of performance.

More expensive – and more capable – will be the Jetson AGX Orin Series. That'll start with the $899 AGX Orin 32GB, with eight CPUs and 200 TOPS. Finally, the flagship AGX Orin 64GB will muster 275 TOPS from its twelve CPUs, for $1,599. Importantly, the new Orin modules are pin- and software-compatible with Jetson Xavier, which should help streamline upgrades by NVIDIA's partners. The starting price for Orin modules is also the same as that of Xavier modules, too. It'll mean those customers who've already used tools like NVIDIA's pre-trained AI models — of which there are now a couple of dozen, the company says — and the NVIDIA Omniverse synthetic data generator should have minimal issues switching to the more potent modules.

A reference design for autonomous warehouse robots

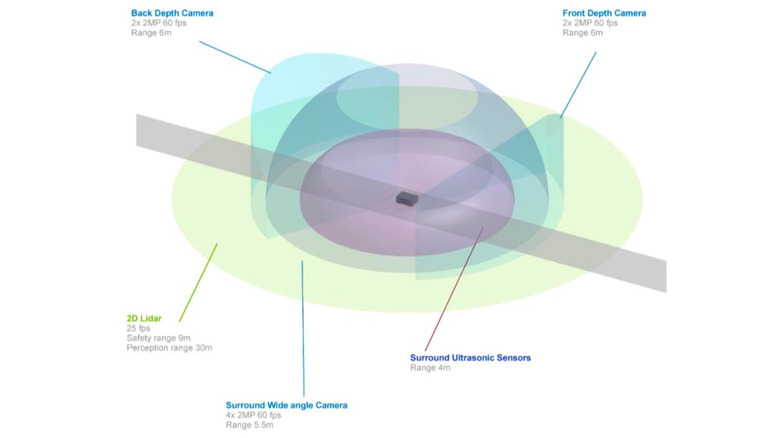

To demonstrate just what's possible, NVIDIA is also unveiling a new reference design for an AMR, or Autonomous Mobile Robots. Expected to launch in Q2 2022, NVIDIA Isaac Nova Orin combines two Jetson AGX Orin modules with multiple sensors, including up to six cameras, eight ultrasonics, and three LIDAR. It comes with an Isaac Sim on Omniverse, for quickly getting started with simulations, together with tools for understanding the surround cameras and sensors, depth cameras front and rear, and the laser scanner which gives a 30-meter perception range around the robot.

There'll also be support for NVIDIA DeepMap, the technology which NVIDIA acquired in 2021. So far we've predominantly seen that focused on 3D mapping for autonomous vehicles, but Isaac Nova Orin will also be able to use it for creating more accurate maps of environments like warehouses where AMR will need to safely roam. The technology challenge, NVIDIA points out, actually has a fair amount of overlap between the two: while AMR move at far slower speeds than would be expected from the average driverless car, they do so in highly unstructured environments.

For Amazon, NVIDIA offers a shortcut to smarter warehouses

It's simulation technology like that which Amazon will be using to explore how much more of a role AMR could play in its facilities, NVIDIA confirmed today. The upcoming NVIDIA Isaac Sim April release, for example, will allow developers to build complex warehouse scenes using 3D blocks, streamlining the process of making a virtual environment in which to train AMR models. One advantage to the new platform is NVIDIA Metropolis. Like a self-driving car, robots like the Isaac Nova Orin reference design are capable of inside-out perception: that is to say, they use their own cameras and other sensors to view and try to comprehend the environment they're in. However, there's also support for infrastructure cameras — effectively sensors mounted in the warehouse or any other environment in which the AMR will operate — that permit outside-in perception. Effectively, the robot could have a bird's-eye view of itself, and integrate that into its understanding of the overall workspace.

Unsurprisingly that sort of technology has caught the attention of big retailers like Amazon. With hundreds of warehouses, each around 1 million square feet, Amazon is now using Omniverse and Isaac Sim to model those facilities and then simulate real-life conditions that AMR need to handle. That includes trying to handle different sizes, shapes, orientation, and even taping of packages, the modeling of which is something Omniverse has apparently significantly accelerated.