This Hidden Google Setting Can Get You Real Results In The AI Age

All the way back in 2019, an article in The Wall Street Journal warned readers that AI has learned to write fake news stories. One of the tools highlighted in the article was GPT-2, a precursor to what we now know as the game-changing tech that is ChatGPT. Fast forward to 2025, and we now have AI integrated directly within the Google Search experience. Now, when you look up something on Google Search, you will see an AI Overview at the top of the page. Google hopes this AI-generated summary, or the summarized answer to your query, will save you time. But it has been repeatedly caught giving out blatantly wrong information. In fact, it can even fumble at something as basic as knowing what the current year is. But that's not all. Websites that churn out AI-generated articles like a content mill are only exacerbating the problem. Thankfully, Google has a solution for it.

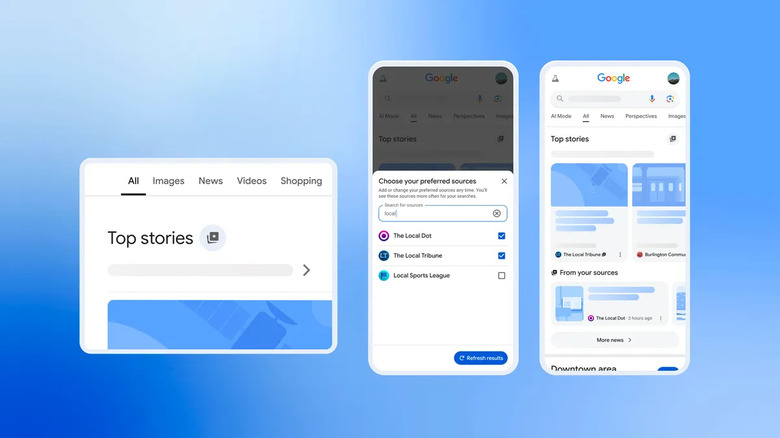

That solution is called Preferred Sources. It's a new feature that was launched in August 2025 that lets you pick where you want to find information on a certain topic. Here's how you can set it up:

- Type a query in Google Search and look it up.

- When the search results appear, scroll down till you see the Top Stories section. Next to the text, you will see an icon with a star in the center. Tap on it.

Top stories in Google Search - Next, you will see a pop-up window where you can enter the news outlet or website name. Once you have shortlisted the names, select the "Reload results" button.

- The next time you look up a topic, Google will prioritize results from your preferred sources.

Why sources matter

The rapid infusion of AI into digital tools over the past couple of years has dramatically changed the user experience, as well as behavior. And not all of it has yielded positive results. Google's AI Overviews are plagued by a well-known problem called hallucination, and, to a certain extent, by a lack of language nuance and reasoning. That explains why these AI models — Gemini in Google's case – continue to misinterpret and misreport news items. Only a few weeks ago, The Verge spotted misleading AI-generated clickbait headlines in the Discover news feed on phones. One of the most comprehensive studies of its kind, jointly conducted by the European Broadcasting Union (EBU) and the BBC, found that AI models "routinely misrepresent news content" irrespective of the language or geography. The error rate was as high as 45%, according to the analysis.

Readers are skeptical, as well. An investigation by the Reuters Institute for the Study of Journalism conducted across 47 countries revealed that a large chunk of users are uncomfortable with the idea of reading AI-generated news, especially on topics such as politics. A study by Poynter's also highlighted that nearly half of Americans don't want AI to serve them news. And yet, AI is getting popular as a news source in some of the world's biggest markets like India, and its adoption has doubled as of 2025, according to a joint study by Oxford University and Reuters Institute. Some newsrooms are adopting AI ethically for journalism, but there are many that don't disclose. At the end of the day, the onus is on the reader to find and read sources that they can trust. And this is where Google's preferred source offers a lifeline, by letting users pick the source they trust and avoid falling victim to AI-generated material.