What Is A Neural Engine & How Do NPUs Differ From GPUs?

Computing power has increased exponentially over the past few decades. We now have cameras on smartphones with incredible computational photography, voice assistants that respond near instantaneously, and hardware efficient enough to run open world games on the iPhone. More recently, advancements in generative AI have shed a light on just how useful the technology can be — and capabilities just keep ramping up with every passing day.

With all the chatter surrounding AI, there may have been certain buzzwords and terminology that you have heard. Apple likes to talk a great deal about its Neural Engine — and this piece of hardware is often brought up in the company's keynotes when talking about machine learning or artificial intelligence-related features. The technology is by no means new — Apple first showcased its A11 Bionic Neural Engine alongside the launch of the iPhone X in 2017, where it was said to assist with operations such as Face ID unlocks.

Apple's Neural Engine is essentially a specialized component that is tuned to handle operations in a way the human mind would. Products other than the iPhone, iPad, and Mac use similar hardware — in fact, NPUs, or Neural Processing Units, can be found in most new computers, smartphones, and even IoT devices. As someone who's had hands-on experience working with hybrid machine learning models during my master's course in Information Technology, what fascinated me the most was the difference between an NPU and a GPU — and how they complement each other.

What is a Neural Processing Unit?

To understand what a Neural Processing Unit is, it helps to first distinguish it from what a CPU does. The CPU is a core component in all computers, and is largely designed to handle sequential operations — aided by its fewer, but high-performing cores. This makes CPUs great for handling logic-heavy tasks, but the shorter core count makes them highly inefficient at performing repetitive calculations and thousands of tiny tasks — something that's a core aspect of how machine learning models are trained and used.

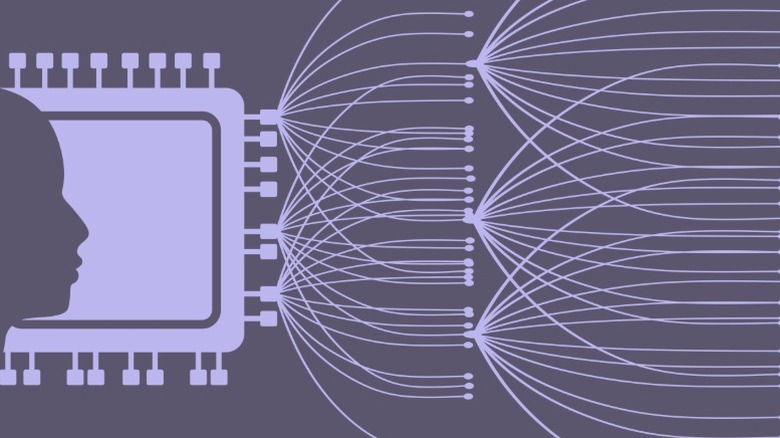

This is where the architecture of an NPU comes into play. Unlike a CPU that's meant for sequential processing, NPUs are designed with parallelism in mind — allowing them to execute the thousands of calculations I've exemplified earlier in a much shorter time. NPUs have a greater core count, and these dedicated compute units are used for repetitive operations such as matrix multiplication, which plays a huge role in how neural networks work.

NPUs also feature on-chip memory, which dramatically reduces latency — directly improving both performance and efficiency. Apple uses a similar approach towards building its own silicon, which is why post-2020 Macs have excelled in performance and battery life. This is evident in our review of the MacBook Air M4, which is a fanless machine that benefits from a CPU, GPU, RAM, and NPU — all on a single chip. In short, an NPU is used in computers as a hardware accelerator, specifically for AI tasks.

Why can't we just use GPUs for AI tasks?

A Graphics Processing Unit is a component that is much better known by the masses since its applications in gaming and video rendering have been in demand and at the center of consumers' needs for much longer. Like NPUs, and unlike CPUs, a GPU features thousands of comparatively weaker cores that specialize in image processing. GPUs also have a parallel design, allowing them to churn out smaller and repetitive tasks much quicker than a CPU.

This also includes operations that are used in neural networks and machine learning — and yes, GPUs can, and are, used for handling AI tasks in computers. In fact, leaders in the artificial intelligence sector, like OpenAI, rely on data centers that use massive clusters of GPUs from Nvidia and AMD. This is obviously industry-grade hardware, but the fact remains — GPUs have the compatible architecture to be used for AI-based operations.

However, GPUs weren't originally purpose-built for handling AI — that's the key difference between them and NPUs. When you offload just the AI-based tasks to a purpose-built accelerator, which the NPU is, you significantly lower the power consumption that a GPU would otherwise have asked for. Modern computers and smartphones, like the iPhone 16 and Google Pixel 10, come with an NPU that's designed to handle the many smartphone AI features we use today. Things like live translation, image generation, or call transcribing are tasks that are dynamically offloaded to an NPU.