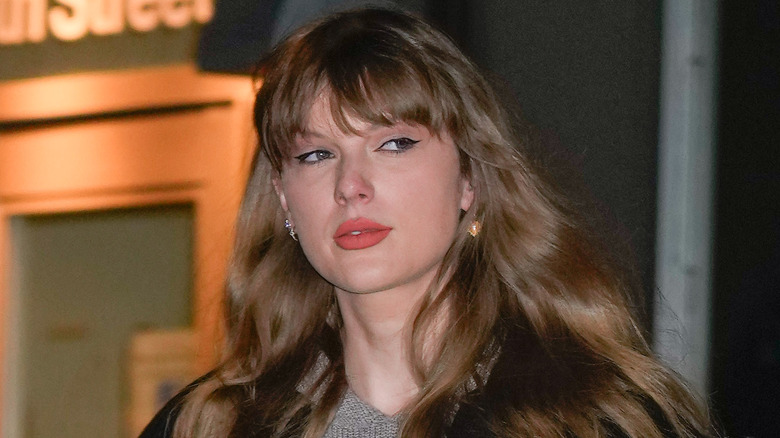

Why The Taylor Swift AI Photos Are A Bigger Deal Than Just An Angry Celeb

A series of AI-generated images seemingly featuring popstar Taylor Swift appeared on multiple social networks in recent days, renewing talks about the abuses of so-called "deepfake" technology. The first signs of this growing societal issue appeared several years ago when multiple products hit the market, all of which made it simple for the average person to generate deepfaked content. A subreddit then known simply as "deepfakes" popped up soon after featuring a slew of explicit material that had been modified to feature the likenesses of various celebrities. Reddit banned that subreddit in 2018, but such moves do little to address the wider issue, which has reached an entirely new level thanks to the casual availability of generative AI.

Whereas deepfaked content traditionally involved applying someone's face to the body of a person featured in an existing photo or video, generative AI can create images — including photorealistic ones — based solely on text prompts. Major generative AI platforms like Bing's Image Creator have safeguards in place to block prompts intended to create this kind of content, but there's no shortage of lesser-known options online that lack these same restrictions — in fact, many of the tools were launched solely for the purpose of generating graphic content.

Critics say social networks need to do more

A number of social networks were briefly home to the AI-generated Swift images, including X (formerly Twitter), Facebook, and Reddit, according to CNN. Though all three companies have removed the content, critics argue that a more proactive approach is needed to address this problem going forward, particularly now that generative AI is readily available to the average person. Many websites and online communities exist that dabble in the creation and dissemination of this kind of explicit content, and while some states and countries have laws in place that make doing so illegal, others haven't moved as fast as the evolution of the technology itself.

Swift isn't the first celebrity to fall victim to the technology, but her popularity means the issue has been brought to the public's attention in a big way, and that may help spur the movement of legislation that addresses the problem — that's all but guaranteed if, as claimed by Daily Mail, Swift's alleged contemplation about taking legal action results in an actual lawsuit or two. Meanwhile, though it is, for all intents and purposes, impossible to prevent the creation of this kind of explicit material due to the existence of offline AI generators, companies may soon leverage AI-powered proactive scanning technology to detect these images before they're made publicly available — similar technology is already used to detect CSAM.