What To Do If Someone Deepfakes Your Photo With AI

While just a few years ago, AI models needed large sample sizes of our images to be able to create a realistic likeness, this simply isn't true anymore. In fact, some models have proven to be able to recreate a person's likeness in video format from a single photo. Known as a "deepfake", this term is derived from the deep learning technology used to create manipulated content, which could be in the form of images, audio, or videos. As an application of machine learning, deepfakes use training data, such as a person's existing photos or videos online, to generate content in their likeness.

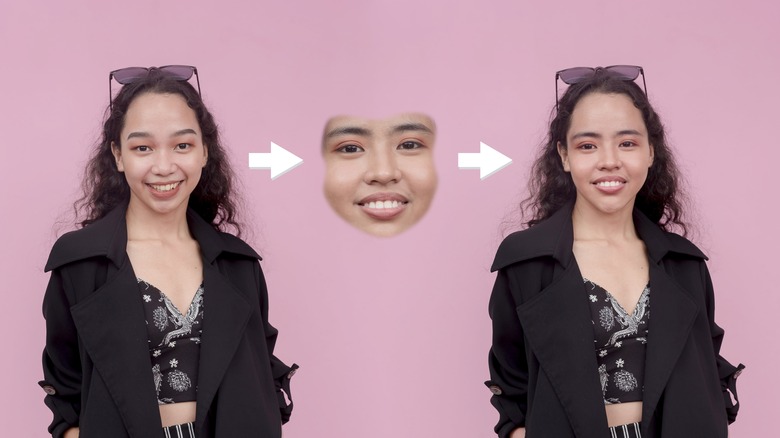

According to the U.S. Department of Homeland Security, the most common technique for creating deepfakes is face-swapping. Unsurprisingly, technology like this has been used by entertainment companies on actors who die before a film's completion for years. However, it is slowly becoming easy for anyone to do it because of apps like Snapchat, FaceShifter, FaceSwap, DeepFace Lab, Reface, and TikTok, which use Deep Neural Network (DNN) technology to let users generate various image and video manipulations in real-time.

Sadly, people aren't just using this technological advancement to superimpose their faces on animals or their favorite celebrity's body. It's also being used for more sinister things, such as non-consensual image (NCII) abuse, which is happening increasingly often. So, if you've found yourself as a victim of non-consensual imagery, here are some actions you can take.

What victims of non-consensual imagery can do

While no one plans to be the victim of non-consensual imagery abuse, you'll want to know the steps to combat it.

- Document the abuse: While there are limited laws in place to protect victims of NCII, it's important to cover your bases in case legal action will be required. To do this, document everything.Take screenshots of the images and takedown requests filed. If this experience is too painful for you to process, consider enlisting the help of a trusted friend or family member.

- File takedown requests: Many messaging and social media platforms have community guidelines that you can refer to when reporting images. For example, in 2021 Meta announced a partnership with StopNCII.org to stop the sharing of NCII images online. If there is a minor with nude, partial nude, or sexually explicit photo (even as a deepfake) online, the National Center for Missing & Exploited Children can assist you with filing takedown requests through its Take it Down initiative.

- Contact a lawyer: In some cases, websites may not be responsive to your takedown requests without legal action. Depending on the country, you may need to reach out to IP or copyright lawyers who can fight on your behalf. Should you not have the funds to hire a lawyer, there are several civic groups that can help assist you with this, such as DeepTrust Alliance, Cyber Civil Rights Initiative, and the Electronic Frontier Foundation.

- Enlist the help of digital reputation management firms: If you are a person of public interest, you can reach out to a PR agency or digital reputation management firm to help with NCII-related issues. With their professional help, you can develop a plan to mitigate the effects of any unseemly images shared online.

Protect yourself (and your loved ones) from deep fake technology

While tech giants like Google have made efforts to thwart deepfakes from taking over, there are some things we can still do to not contribute to the problem. First, we should be careful about sharing content without validating if it's a trustworthy publication or creator. While some countries like China are actively trying to crackdown on deepfakes in the media with legislation, many countries are still in the nascent stages in terms of preventive laws for cybercrimes.

Second, when we are made aware that a particular image or video is a product of a deepfake, we can report it to whatever platform we saw it on. Third, we can withdraw support for initiatives that use our likeness without our consent or compensation. On an individual level, this can mean avoiding apps or filters that train algorithms designed to improve this technology using personal data.

Should your likeness not be relevant to your career or personal brand, you may consider how often you post your face online. Although it may be too late for public figures, or even those of us who have uploaded hundreds of photos of themselves when they were younger, it's not too late to protect those who come after us.

You can and should discourage family members with younger children to explain responsible ways to share media online. While you may not be able to protect them forever, you can do your best to make sure that they understand their privacy rights. When they are older and begin to participate online, they can decide on their own terms how much of their life they wish to make public. In addition, you can reduce the risks of their likeness being used by predators without their consent.