Why We Should Be Worried About Deepfakes And What Is Being Done To Fight It

The year is 1969. President Richard Nixon read a speech about Apollo 11 astronauts being stranded on the moon. "The men who went to the moon to explore in peace, will stay on the moon and rest in peace," he said. That didn't happen. In truth, the world watched as the crew of Apollo 11 successfully landed, then returned to Earth. But a recently surfaced deepfake video of former President Nixon in this fictional scenario seemed to suggest otherwise. The computer-generated video of Nixon was created by the Massachusetts Institute of Technology Centre for Advanced Virtuality.

The MIT team wanted to show how deepfakes could affect our world. Trust individuals, journalists and leaders could be morphed into saying things they did not say and doing things they did not do. The consequences could be disastrous. Now, lawmakers scrambling for better solutions.

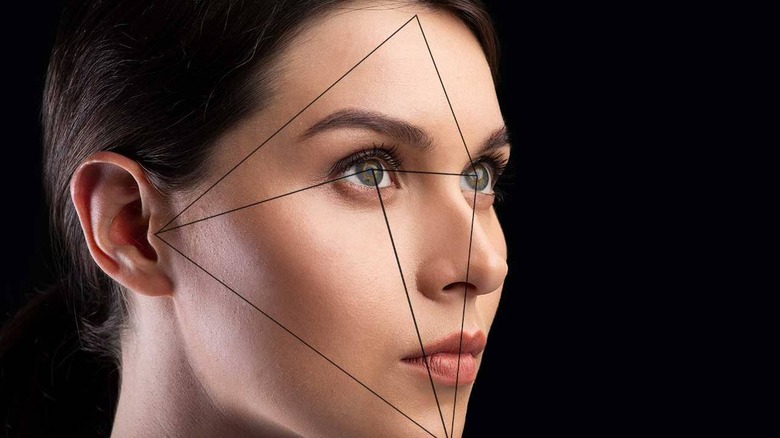

According to a study released by Samsung's AI Centre, deepfakes aren't difficult to generate at all. Contrary to popular belief, it doesn't take a whole library of images to create a convincing talking head model – a seasoned designer can do it with just one.

Immediately, there are real concerns about deepfakes being weaponised in several scenarios, such as in the 2020 election.

The role of big tech

This year, Congress requested the Director of National Intelligence for a formal report on deepfake technology. The House of Representatives Intelligence Committee also sent a letter to Twitter, Facebook and Google asking how they planned to tackle deepfakes in the 2020 election.

If much of today's news is circulated on social media, these tech firms could play a big part in how deepfakes are circulated. But therein lies the ongoing debate on what constitutes fake news; Facebook has been hesitant about being the decider of factual truth, not standing in the way of most user content up on its site.

But this week, Facebook have given in to Singapore's controversial fake news law, issuing a note on a post deemed fake news by the Singapore government. The note read: "Facebook is legally required to tell you that the Singapore government says this post has false information."

This surprising shift in cooperation with authorities could open up new debate on their stance on fake news in other jurisdictions.

And beyond businesses, government institutions like DARPA and colleges like MIT, Stanford University and the Max Planck Institute for Informatics are also experimenting with deepfake tech. Some of these projects include reverse-engineering algorithms to spot deepfake images.

Combating deepfakes with law

There's also a DEEPFAKES Accountability Act (how about that for an acronym), a proposed bill proposed by Rep. Yvette Clarke (D-NY). It requires anyone creating deepfake content to put an irremovable watermark and textual descriptions. Failing to do so would be a crime.

The Accountability Act would be a step in the right direction, but there are gaping loopholes. Deepfake creators who would comply to such rules are probably not the criminals that we are trying to stop here. Watermarks are also simple to remove. The Act does little to stand against malicious actors, and the need to tackle the potential virality of deepfake content. False messages could have spread like wildfire before anyone realized it was fake.

But at the very least there would be a law against it, defining the crime. This could speed up court procedures and kickstarting the legal discussion on combating deepfakes.

Why not just ban it all together?

China's approach is to simply criminalize deepfakes that are published without an advisory. This is similar to California's deepfake bill that prohibits circulation of doctored images regarding politicians within 60 days of an election.

While a ban may make clear a governments complete intolerance over doctored videos, it could be hard to enforce. It doesn't stop the spread of false messages with immediacy, which is the issue here.

Some have opposed the idea of a ban, stating there are real benefits to the technology behind deepfakes. Deepfake algorithms offer to bring Virtual Reality tech and image processing to new heights, using machine learning to enhance realism, which could be welcome in entertainment, education and also the medical industry. R&D in the medical field have been experimenting with deepfake technology to produce realistic images of estimations and forecasts.

But these advances threaten to upset society in other areas, from trust in the press and leadership. Authorities need to act fast and decide on which is its priority.

What we can do now

News is in a tough place today. And as deepfake technology becomes more widespread, faith in news could become even harder to hold on to.

Yet, we still receive vast information everywhere online and off. Our best defense is not taking news we receive at face value. Think about who posted it and the source of the content. It is also worth looking up at least two credible sources, to ensure a story is likely accurate. And take a moment to consider these before sharing.

Individual responsibility seems to be our best bet, until corporations and the government wrap their heads around deepfake policy, which will hopefully come sooner than later.