This AlterEgo Wearable Can Hear Your Silent Speech

A wearable that can track silent speech could make secret conversations with an Alexa-style AI possible, removing one of the big hurdles voice control still faces in mobile devices. The handiwork of researchers at the MIT Fluid Interfaces group, AlterEgo isn't mind reading but it could easily be mistaken as that, at least if you're watching someone use the headset.

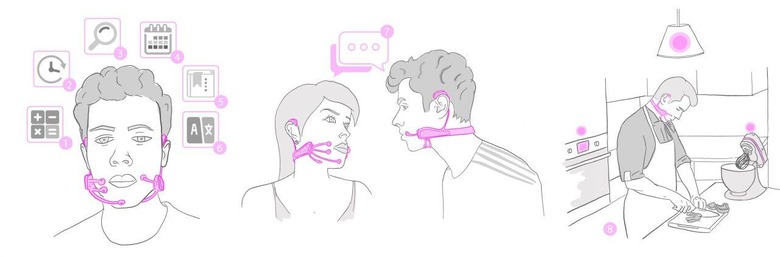

"AlterEgo seeks to augment human intelligence and make computing, the Internet, and machine intelligence a natural extension of the user's own cognition by enabling a silent, discreet, and seamless conversation between person and machine," the team behind the wearable explains. At the heart of it is a new peripheral that's mounted to the face and neck. That allows it to track the electrical signals in the facial muscles and vocal chords.

These so-called electrophysiological signals are created when the wearer intentionally, but silently, voices words. The MIT team likens the experience to when children first learn a language, and how they mentally sound out words to understand them. AlterEgo's collection of sensors are able to track that process non-intrusively.

Importantly, the wearable isn't reading brain waves: it's not plucking sentences straight out of the user's mind. Instead it relies on the conscious decision to speak silently. The computer that the "myonureal interface" is linked to can only receive, and process, speech that has been intentionally vocalized in that way.

The goal is to develop a wearable computer companion that can communicate in a way that's natural to humans, but which isn't as public as traditional voice recognition systems. Smartphones, smart watches, Bluetooth headsets, and more complex headsets like Google Glass all offer the ability to speak out loud and have voice recognition process commands and other instructions. However, that can be uncomfortable to do if you're in a public place, or altogether inappropriate if you're in a museum or other quiet space.

Wearables have got around one half of that issue already, of course. Like Glass, the AlterEgo peripheral has a bone-conduction speaker that can transmit sound to the wearer without occupying their ear with an earpiece. However MIT's approach also silences the user's inputs too.

The result is a computer that could hold a conversation that's completely imperceptible to those around the wearer. You could ask for search queries to answer questions, make personal notes and send messages, issue instructions to a helper AI, and remotely control Internet of Things devices without having to visibly or audibly do so. "Users with memory problems can silently ask the system to remind them of the name of an acquaintance or an answer to a question," the AlterEgo team suggests, "without the embarrassment that comes from openly asking for this information."

"With the right peripherals, meanwhile, AlterEgo could have broader implications. Hooked up to a speaker, for example, the system could read the silent speech of a user in one language, translate it, and then speak the phrase in a different language altogether" AlterEgo, MIT

Currently, the myoneural interface can deliver more than 90-percent accuracy in picking up silent speech, though that's based on an application-specific vocabulary. It's not an instant process, either, and require individual training, though the MIT team says it's currently working on versions that would require no personalization. Also on the cards is a less obvious form-factor.

Any commercial discussion is still some way out, too. Right now, this is all just a research prototype: there's still work to be done in reducing the complexity of the wearable component, improving the neural networks that do the speech recognition, and making the whole thing easier to use from the outset. How long that might take is unclear. Still, if you've always shied away from voice recognition because speaking to your gadgets is a little embarrassing in public, AlterEgo could one day allow you to hold your own, private conversations with the AI of your choice.

IMAGES Lorrie LeJeune; Arnav Kapur; Neo Mohsenvand; MIT Media Lab under CC